Balancing Accuracy and Speed in Fertility AI: A Research and Clinical Implementation Framework

This article provides a comprehensive analysis for researchers and drug development professionals on the critical trade-offs between predictive accuracy and computational speed in artificial intelligence (AI) models for reproductive medicine. It explores the foundational principles of AI model architecture in fertility applications, examines specific high-performance methodologies, addresses key optimization challenges like interpretability and data limitations, and establishes robust validation and comparative frameworks. By synthesizing current research and clinical survey data, this review aims to guide the development of next-generation fertility AI tools that are both clinically actionable and scientifically rigorous, ultimately accelerating their translation from research to clinical practice.

Balancing Accuracy and Speed in Fertility AI: A Research and Clinical Implementation Framework

Abstract

This article provides a comprehensive analysis for researchers and drug development professionals on the critical trade-offs between predictive accuracy and computational speed in artificial intelligence (AI) models for reproductive medicine. It explores the foundational principles of AI model architecture in fertility applications, examines specific high-performance methodologies, addresses key optimization challenges like interpretability and data limitations, and establishes robust validation and comparative frameworks. By synthesizing current research and clinical survey data, this review aims to guide the development of next-generation fertility AI tools that are both clinically actionable and scientifically rigorous, ultimately accelerating their translation from research to clinical practice.

The Core Trade-Off: Understanding Accuracy-Speed Dynamics in Fertility AI

Troubleshooting Guides

Guide 1: Addressing Low Model Accuracy in Clinical Validation

Problem: Your AI model for embryo selection shows high performance on internal validation data but demonstrates significantly lower accuracy (e.g., below 60%) when applied to new clinical datasets or external patient populations.

Diagnosis Steps:

- Check for Data Drift: Compare the statistical properties (e.g., image resolution, lighting conditions, patient demographics) of your new data against the training data. A significant mismatch is a primary cause of performance degradation.

- Validate Ground Truth Consistency: Ensure the clinical outcomes used as labels in your new dataset (e.g., clinical pregnancy confirmation) are defined and determined consistently with your model's training protocol.

- Perform Error Analysis: Categorize the types of embryos or cases where the model is failing. Determine if errors are random or systematic (e.g., the model consistently misclassifies a specific morphological feature).

Solutions:

- Implement Data Augmentation: If data drift is detected, augment your training dataset to better represent the variations in the new clinical environment. This may include simulating different image qualities or patient demographics.

- Initiate Model Retraining: Fine-tune your model on a small, carefully curated dataset from the new clinical site. This helps the model adapt to local variations without forgetting previously learned knowledge.

- Review Labeling Protocols: Work with clinical embryologists to re-validate the ground truth labels for a subset of problematic cases, ensuring they align with the original training standards.

Guide 2: Managing Unacceptable Computational Speed During Inference

Problem: The AI model's inference speed is too slow for practical clinical use, causing delays in the embryo transfer workflow or requiring prohibitively expensive computational hardware.

Diagnosis Steps:

- Profile Model Architecture: Use profiling tools to identify the specific layers or operations in your deep learning model that are the primary bottlenecks (e.g., specific convolutional layers).

- Assess Hardware Compatibility: Determine if the current deployment environment (e.g., CPU vs. GPU, memory bandwidth) is suitable for the model's architecture.

- Evaluate Model Complexity: Check the model's size (number of parameters) and computational complexity (FLOPs - Floating Point Operations). Excessively large models are often slow.

Solutions:

- Apply Model Optimization Techniques:

- Pruning: Remove redundant neurons or weights from the network that contribute little to the final decision [1] [2].

- Quantization: Convert the model's weights from 32-bit floating-point numbers to lower-precision formats (e.g., 16-bit or 8-bit integers). This drastically reduces model size and increases inference speed [1] [2].

- Invest in Optimized Hardware: Deploy the model on hardware optimized for AI inference, such as GPUs with TensorRT or specialized edge AI processors, which can significantly reduce latency [2].

Guide 3: Resolving the Trade-off Between High Sensitivity and Specificity

Problem: Tuning your model to achieve higher sensitivity (detecting more viable embryos) results in an unacceptable drop in specificity (increased false positives of viability), or vice versa.

Diagnosis Steps:

- Analyze the ROC Curve: Plot the Receiver Operating Characteristic (ROC) curve to visualize the trade-off at different classification thresholds. The Area Under the Curve (AUC) provides a single measure of overall performance [3].

- Review Clinical Priorities: Consult with clinical partners to determine the acceptable balance for your specific use case. Is it more critical to avoid discarding a viable embryo (high sensitivity) or to maximize the chance of success for each transfer (high specificity)?

- Inspect Class Imbalance: Check if your training data has a significant imbalance between "viable" and "non-viable" embryo classes, which can bias the model.

Solutions:

- Adjust the Decision Threshold: Move the classification threshold away from the default value of 0.5. Lowering the threshold increases sensitivity, while raising it increases specificity.

- Use a Weighted Loss Function: During training, assign a higher cost to misclassifying the minority class. This encourages the model to pay more attention to those cases.

- Explore Advanced Architectures: Investigate models or loss functions specifically designed for imbalanced data or that directly optimize for the clinical metric of interest.

Frequently Asked Questions (FAQs)

FAQ 1: What are the typical performance benchmarks for AI in embryo selection? Performance can vary, but recent meta-analyses provide aggregate benchmarks. One systematic review reported that AI-based embryo selection methods achieved a pooled sensitivity of 0.69 and specificity of 0.62 in predicting implantation success, with an Area Under the Curve (AUC) of 0.7 [3]. Specific commercial systems, like Life Whisperer, have demonstrated an accuracy of 64.3% for predicting clinical pregnancy [3].

FAQ 2: My model has high accuracy but clinicians don't trust it. How can I improve interpretability? High accuracy alone is often insufficient for clinical adoption. To build trust, you should:

- Provide Explainable AI (XAI) Outputs: Use techniques like Grad-CAM or attention maps to generate visual explanations that highlight which image features (e.g., specific cell structures) the model used to make its decision.

- Conduct Rigorous Clinical Validation: Perform prospective studies that demonstrate the model's performance improves upon standard morphological assessment by embryologists.

- Integrate into Workflow Seamlessly: Ensure the AI tool fits into the existing clinical workflow without disrupting efficiency, presenting clear and actionable information to the embryologist [4].

FAQ 3: What are the key regulatory considerations when validating a clinical AI model? Regulatory bodies require robust evidence of both analytical and clinical validity.

- Analytical Validation: You must prove the model is accurate, reliable, and reproducible. This involves extensive benchmarking on diverse datasets to establish performance metrics like sensitivity, specificity, and precision [5].

- Clinical Validation: You must demonstrate that the model's predictions lead to clinically beneficial outcomes, such as improved pregnancy or live birth rates, through well-designed studies [4].

- Transparency and Monitoring: Be prepared to address potential ethical concerns, provide transparency into the AI's limitations, and implement plans for post-market surveillance to monitor performance over time [4].

FAQ 4: How can I reduce the computational cost of training without sacrificing performance? Several optimization techniques can achieve this balance:

- Hyperparameter Tuning: Use automated tools like Amazon SageMaker Automatic Model Tuning or Optuna to find the most efficient model configuration [1].

- Knowledge Distillation: Train a large, accurate "teacher" model, then use it to train a smaller, faster "student" model that retains most of the performance [1].

- Efficient Model Architectures: Start with inherently efficient architectures (e.g., MobileNet, EfficientNet) that are designed for performance and speed.

- Cloud-Based Optimized Hardware: Utilize cloud services that offer hardware (e.g., AWS Inferentia) specifically designed for cost-efficient model training and inference [1].

Table 1: Diagnostic Performance Metrics of AI in Clinical Applications

| Clinical Application | Sensitivity | Specificity | Accuracy | AUC | Source / Model |

|---|---|---|---|---|---|

| Embryo Selection (IVF) | 0.69 (Pooled) | 0.62 (Pooled) | N/A | 0.70 (Pooled) | Diagnostic Meta-Analysis [3] |

| Embryo Selection (IVF) | N/A | N/A | 64.3% | N/A | Life Whisperer AI Model [3] |

| Embryo Selection (IVF) | N/A | N/A | 65.2% | 0.70 | FiTTE System [3] |

| E-FAST Exam (Trauma) | 81.25% (Hemoperitoneum) | 100% (Hemoperitoneum) | 96.2% (Hemoperitoneum) | 0.91 (Hemoperitoneum) | Buyurgan et al. [6] |

| Nanopore Sequencing (Meningitis) | 50.0% | 55.6% | 47.1% | N/A | Clinical Pathogen Detection [7] |

Table 2: Impact of Model Optimization Techniques on Performance and Efficiency

| Optimization Technique | Primary Effect | Typical Performance Trade-off | Best-Suited Deployment Environment |

|---|---|---|---|

| Pruning | Reduces model size and inference latency. | Potential for minimal accuracy loss (<1%), which can often be recovered with retraining. | Edge devices, mobile applications. |

| Quantization | Speeds up inference and reduces memory usage. | Slight, often negligible, accuracy drop for significant speed gains. | Mobile, IoT, and cloud CPUs. |

| Knowledge Distillation | Creates a smaller, faster model from a larger one. | Student model accuracy should be very close to the teacher model. | When a large, accurate model exists but is too slow for production. |

| Hyperparameter Tuning | Improves model accuracy and efficiency by finding optimal settings. | Generally improves performance without trade-offs, but is computationally expensive. | Used during model development before final deployment. |

Experimental Protocols

Protocol 1: Clinical Validation of an AI Model for Embryo Selection

Objective: To prospectively validate the diagnostic accuracy of an AI model for predicting clinical pregnancy from blastocyst images.

Materials: Time-lapse microscopy images of day-5 blastocysts, associated de-identified patient data, and confirmed clinical pregnancy outcomes.

Methodology:

- Data Curation: Collect a cohort of blastocyst images with linked clinical outcomes. Divide the dataset into a training set (e.g., 70%), a validation set (e.g., 15%), and a held-out test set (e.g., 15%).

- Model Training: Train a convolutional neural network (CNN) on the training set, using the validation set for hyperparameter tuning and to prevent overfitting.

- Performance Assessment: Apply the trained model to the held-out test set. Calculate sensitivity, specificity, accuracy, and AUC by comparing model predictions against the confirmed clinical pregnancy outcomes [3].

- Benchmarking: Compare the AI model's performance against the success rates of traditional embryo selection by trained embryologists to establish clinical utility.

Protocol 2: Benchmarking Variant Calling Pipelines in Genomic Analysis

Objective: To evaluate the analytical performance (sensitivity, specificity, precision) of a germline variant calling pipeline for a clinical diagnostic assay.

Materials: Whole exome or genome sequencing data from reference samples with known truth sets (e.g., from the Genome in a Bottle consortium).

Methodology:

- Data Processing: Run the sequencing data through the variant calling pipeline (e.g., based on GATK HaplotypeCaller or SpeedSeq) to generate a VCF file of variant calls [5].

- Variant Comparison: Use a standardized benchmarking workflow (e.g., incorporating hap.py or vcfeval) to compare the pipeline's variant calls against the known truth set [5].

- Metric Calculation: The workflow calculates the number of true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN). From these, it derives sensitivity (TP/(TP+FN)), specificity (TN/(TN+FP)), and precision (TP/(TP+FP)) across the genome and within specific regions of interest [5].

- Reporting: Generate a report detailing the pipeline's performance for different variant types (SNPs, InDels) and sizes, fulfilling regulatory requirements for assay validation [5].

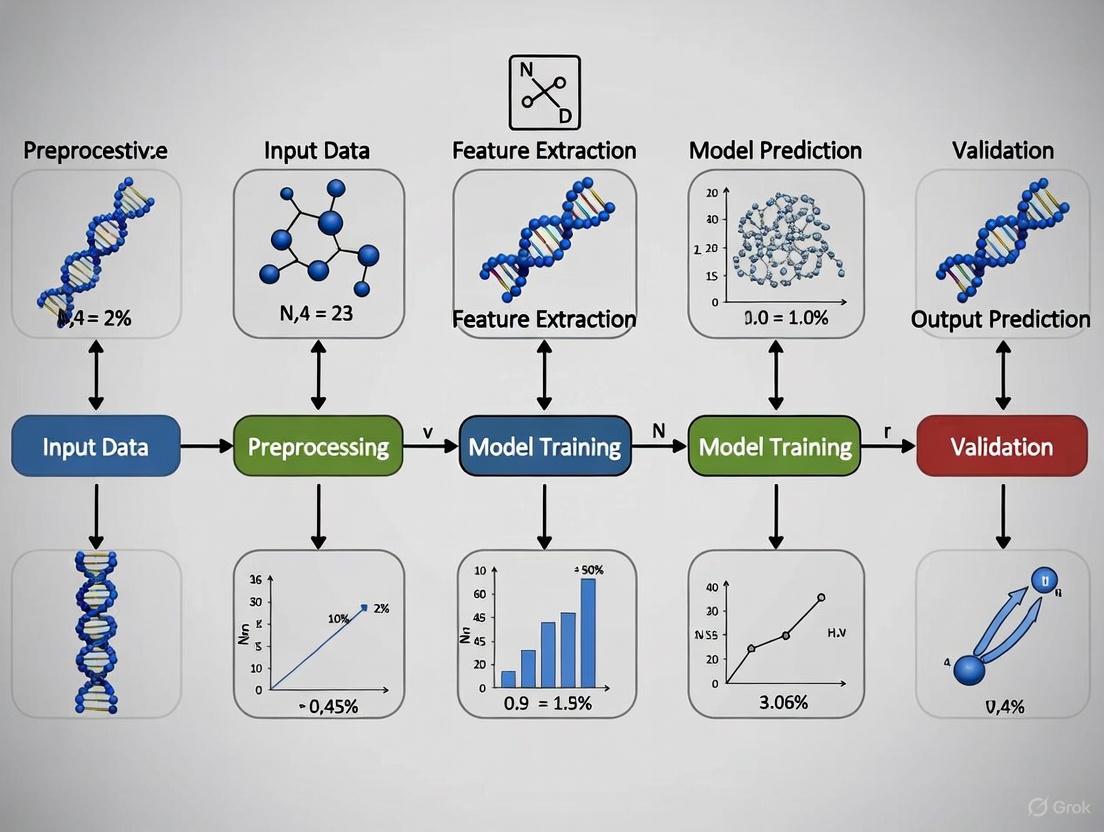

Workflow and Pathway Diagrams

Clinical AI Validation Workflow

Model Optimization Techniques Pipeline

The Scientist's Toolkit

Table 3: Essential Research Reagents and Tools for Clinical AI Research

| Tool / Reagent | Function / Purpose | Example Use Case |

|---|---|---|

| Time-lapse Microscopy Systems | Captures continuous images of embryo development for creating morphokinetic datasets. | Generating the primary image data used to train and validate embryo selection AI models. |

| Convolutional Neural Network (CNN) | A class of deep learning models designed for processing pixel data and automatically learning relevant image features. | The core architecture for analyzing embryo images and predicting viability. |

| Benchmarking Workflows (e.g., hap.py, vcfeval) | Standardized software tools for comparing variant calls against a known truth set to calculate performance metrics. | Essential for validating the analytical performance of genomic pipelines in a clinical lab [5]. |

| Model Optimization Tools (e.g., TensorRT, ONNX Runtime) | Software development kits (SDKs) and libraries designed to optimize trained models for faster inference and deployment on specific hardware. | Used to prune and quantize a large, accurate model for deployment in a real-time clinical setting [1] [2]. |

| Reference Truth Sets (e.g., GIAB) | Genomic datasets from reference samples where the true variants have been extensively validated by consortiums like Genome in a Bottle (GIAB). | Serves as the ground truth for benchmarking and validating the accuracy of clinical genomic pipelines [5]. |

| Daurichromenic acid | Daurichromenic acid, CAS:82003-90-5, MF:C23H30O4, MW:370.5 g/mol | Chemical Reagent |

| Bromomonilicin | Bromomonilicin, CAS:101023-71-6, MF:C16H11BrO7, MW:395.16 g/mol | Chemical Reagent |

The integration of artificial intelligence (AI) into in-vitro fertilization (IVF) represents a paradigm shift in reproductive medicine, offering the potential to enhance precision, standardize procedures, and improve clinical outcomes [8] [9]. This technical resource examines the global adoption and performance of AI in IVF, with a specific focus on the critical balance between model accuracy and operational speed. It provides troubleshooting guidance and foundational knowledge for researchers and clinicians navigating this evolving field.

Global AI Adoption & Performance: A Quantitative Snapshot

The tables below summarize key quantitative data on the adoption, performance, and perceived benefits of AI in IVF, based on recent global surveys and meta-analyses.

Table 1: Trends in Global AI Adoption among IVF Professionals [8]

| Metric | 2022 Survey (n=383) | 2025 Survey (n=171) |

|---|---|---|

| Overall AI Usage | 24.8% | 53.22% (Regular & Occasional) |

| Regular AI Use | Not Specified | 21.64% |

| Primary Application | Embryo Selection (86.3% of AI users) | Embryo Selection (32.75% of respondents) |

| Familiarity with AI | Indirect evidence of lower familiarity | 60.82% (at least moderate familiarity) |

Table 2: Diagnostic Performance of AI in Embryo Selection [3] Data from a systematic review and meta-analysis.

| Performance Metric | Pooled Result |

|---|---|

| Sensitivity | 0.69 |

| Specificity | 0.62 |

| Positive Likelihood Ratio | 1.84 |

| Negative Likelihood Ratio | 0.5 |

| Area Under the Curve (AUC) | 0.7 |

Table 3: Key Barriers to AI Adoption in IVF [8]

| Barrier | Percentage of 2025 Respondents (n=171) |

|---|---|

| Cost | 38.01% |

| Lack of Training | 33.92% |

| Ethical Concerns / Over-reliance on Technology | 59.06% |

Experimental Protocols & Methodologies

Protocol 1: Validating an AI Model for Embryo Implantation Prediction

This protocol is based on multi-center studies validating AI tools for embryo selection [10].

- 1. Objective: To validate the diagnostic accuracy of an AI model in predicting embryo implantation potential and to compare its performance against experienced embryologists.

- 2. Data Sourcing:

- Input Data: Collect time-lapse images or videos of blastocyst-stage embryos from multiple international IVF centers.

- Dataset Size: Use a large, diverse dataset (e.g., 2,075 embryo pairs from six centers) to ensure generalizability.

- Outcome Data: Pair embryo media with known clinical outcomes: implantation success or failure.

- 3. Experimental Setup:

- Test Design: Employ a randomized, multicenter study design.

- Control Group: Embryos are selected for transfer by experienced embryologists using standard morphological grading (e.g., the Gardner scale).

- Test Group: Embryos are selected based on the AI model's recommendations.

- 4. Performance Analysis:

- Primary Endpoint: Compare clinical pregnancy rates between the AI-selected and embryologist-selected groups.

- Model Benchmarking: Compare the AI's performance against individual embryologists and an expert consensus.

- Statistical Measures: Calculate accuracy, sensitivity, specificity, and AUC to quantify predictive performance.

- 5. Troubleshooting:

- Challenge: AI model performance degrades with images from a new clinic due to different microscopes or settings.

- Solution: Implement a calibration step using a small set of standardized images from the new clinic to fine-tune the model and minimize center-specific bias.

Protocol 2: Optimizing Ovarian Stimulation Trigger Timing with Machine Learning

This protocol details the methodology for using AI to improve the timing of the ovulation trigger [11].

- 1. Objective: To determine if a machine-learning model can optimize the day of ovulation trigger to improve mature oocyte yield.

- 2. Model Development:

- Training Data: Train a predictive algorithm on a large dataset of completed ovarian stimulation cycles (e.g., >53,000 cycles from 11 centers).

- Input Features: Use clinical data from the day of potential triggering, including hormone levels (e.g., estradiol) and ultrasound follicle measurements.

- Output: The model predicts the expected yield of total oocytes and mature (MII) oocytes for three potential trigger days: the current day, the next day, and the day after.

- 3. Validation & Analysis:

- Performance Metrics: Validate model performance using metrics like R² (e.g., 0.81 for total oocytes) [11].

- Outcome Comparison: Compare cycle outcomes between cycles where the physician followed the AI recommendation versus those where they triggered earlier.

- Statistical Testing: Use statistical tests (e.g., t-tests) to determine if differences in oocyte and embryo yields are significant (p < 0.001).

- 4. Troubleshooting:

- Challenge: Physicians frequently trigger earlier than the AI model recommends, potentially due to clinical intuition or risk of ovarian hyperstimulation syndrome (OHSS).

- Solution: The AI tool should function as a decision-support system, presenting predictions for multiple days to inform—not replace—clinical judgment. Integrate OHSS risk scores into the algorithm.

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for AI-Assisted IVF Research

| Item | Function in Research |

|---|---|

| Time-Lapse Incubation System (TLS) | Provides continuous, non-invasive imaging of embryo development, generating the morphokinetic data essential for training and deploying AI models. |

| Annotated Embryo Image Datasets | Large, diverse, and accurately labeled datasets of embryo images with known implantation outcomes are the fundamental substrate for training robust AI models. |

| AI Software Platform (e.g., EMA, Life Whisperer) | Commercial or proprietary software that contains the algorithms for embryo evaluation, sperm analysis, or follicular tracking. |

| Cloud Computing & Data Storage Infrastructure | Essential for handling the computational load of deep learning and for secure, centralized storage of large-scale, multi-center data. |

| Federated Learning Frameworks | Enables training AI models across multiple institutions without sharing sensitive patient data, addressing a major barrier in medical AI development [12]. |

| SspF protein | SspF protein, CAS:161705-83-5, MF:C9H8BrF3 |

| Ginsenoside RG4 | Ginsenoside RG4, CAS:181225-33-2, MF:C42H70O12, MW:767 g/mol |

Experimental Workflow Visualization

The following diagram illustrates a standard workflow for developing and validating an AI model for embryo selection.

AI Model Development Workflow for Embryo Selection

Frequently Asked Questions (FAQs) for Researchers

Q1: Our AI model for embryo selection shows high accuracy on internal validation but performs poorly on external data. What are the primary causes and solutions?

A: This is a common challenge related to model generalizability.

- Cause: Data Bias. The training data may lack diversity in patient demographics, laboratory protocols, or equipment (e.g., microscope types) [12] [4].

- Solution: Employ Federated Learning, which allows model training across multiple institutions without centralizing data, thus exposing the model to more varied data sources [12]. Ensure training datasets are large and represent a broad patient population.

- Cause: Overfitting. The model has learned noise and specific patterns from the training set that do not generalize.

- Solution: Implement rigorous regularization techniques during training and use external test sets from completely independent clinics for validation before clinical implementation.

Q2: How can we balance the need for a highly accurate, complex AI model with the speed required for clinical workflow efficiency?

A: The trade-off between accuracy and speed is central to clinical AI.

- Strategy 1: Model Optimization. After training a complex model, techniques like pruning and quantization can reduce its computational load and size, increasing inference speed with minimal accuracy loss.

- Strategy 2: Tiered Analysis. Use a fast, less complex model for initial, high-volume triage (e.g., initial embryo grading). A slower, more accurate model can then be used for final decision-making on a pre-selected subset.

- Strategy 3: Hardware Integration. Deploying models on dedicated, high-performance hardware within the clinic's infrastructure can significantly speed up processing times.

Q3: What are the key ethical considerations and potential biases we must address when developing AI for IVF?

A: Ethical and bias-related issues are critical for responsible AI deployment.

- Algorithmic Bias: AI models can perpetuate and even amplify existing biases in training data. If trained predominantly on data from specific ethnic or age groups, performance may be suboptimal for other groups, exacerbating health disparities [9] [13].

- Mitigation: Intentionally curate diverse training datasets and perform rigorous subgroup analysis to test for performance disparities.

- Transparency & Explainability: Many AI models are "black boxes." Clinicians may be hesitant to trust a recommendation without understanding the reasoning.

- Mitigation: Focus on developing explainable AI (XAI) techniques that highlight the image features or data points influencing the model's decision [12] [4].

- Over-reliance: A significant risk is that embryologists may defer to the AI's judgment, potentially overlooking errors.

- Mitigation: Design AI systems as decision-support tools, not autonomous decision-makers. The final clinical decision must remain with the human expert [8] [14] [4].

FAQs: Core Architectural Concepts

Q1: What is the fundamental difference between Traditional Machine Learning and Deep Learning for fertility research?

A1: The choice between Traditional Machine Learning and Deep Learning involves a direct trade-off between interpretability and automatic feature discovery, which is crucial in a sensitive field like fertility research.

- Traditional Machine Learning (e.g., Logistic Regression, Random Forest, XGBoost) requires researchers to manually define and engineer relevant features (e.g., follicle size, hormone levels) from the raw data. These models are typically more interpretable, computationally less intensive, and can be effective with smaller datasets [15]. For example, a study predicting natural conception used an XGB Classifier, achieving an accuracy of 62.5% [16].

- Deep Learning (e.g., CNNs, RNNs) automates feature extraction by learning hierarchical data representations through multiple network layers. This is powerful for complex, unstructured data like embryo time-lapse videos or ultrasound images, where manual feature engineering is difficult. However, these models are often seen as "black boxes," require large datasets, and are computationally expensive [15] [17].

Q2: My deep learning model for embryo classification performs well on training data but poorly on new clinical images. What is happening?

A2: This is a classic case of overfitting [18]. Your model has likely memorized the noise and specific patterns in your training data rather than learning generalizable features. Key strategies to overcome this are:

- Regularization & Dropout: Randomly deactivate neurons during training to prevent the model from over-relying on any single node [18] [19].

- Data Augmentation: Artificially expand your training dataset using techniques like rotation, flipping, or adjusting brightness on existing images to make the model more robust [18].

- Early Stopping: Halt the training process when the model's performance on a validation dataset stops improving, preventing it from learning the training data too specifically [18].

Q3: How can I make my large fertility prediction model fast enough for real-time clinical use without sacrificing accuracy?

A3: Several AI model optimization techniques can significantly improve inference speed:

- Pruning: Identifies and removes unnecessary weights or neurons in the network that contribute little to the final prediction, creating a smaller and faster model [20] [2] [21].

- Quantization: Reduces the numerical precision of the model's parameters (e.g., from 32-bit floating-point to 8-bit integers). This shrinks the model size and speeds up computation, which is ideal for deployment on edge devices [20] [2] [21].

- Knowledge Distillation: Trains a compact "student" model to mimic the performance of a larger, more accurate "teacher" model, preserving much of the accuracy while being far more efficient [21].

Troubleshooting Guides

Problem: Model Performance Degradation Over Time

Symptoms: A model that was once accurate for predicting ovarian response now shows declining performance on new patient data.

Diagnosis: This is likely model drift, where the statistical properties of the real-world data have changed over time compared to the data the model was originally trained on [21].

Resolution Protocol:

- Data Verification: Implement a continuous data monitoring pipeline to compare incoming data distributions with the original training data.

- Retraining Schedule: Establish a regular schedule for retraining the model with newly collected, validated data.

- Transfer Learning: Consider using a pre-trained model and fine-tuning its final layers on the new data, which can be more efficient than training from scratch [20] [21].

Problem: The "Black Box" Problem in Clinical Deployment

Symptoms: Clinicians are hesitant to trust an AI model's recommendation for embryo selection because the reasoning behind the decision is not transparent [17] [22].

Diagnosis: Lack of model interpretability, a common challenge with complex deep learning models.

Resolution Protocol:

- Model Selection: Prioritize "explainable AI†(XAI) methods or inherently more interpretable models where possible. For instance, research into follicle size optimization has used explainable AI to identify the specific follicle sizes most likely to yield mature oocytes, making the model's reasoning clear to clinicians [22].

- Utilize Interpretation Tools: Employ techniques like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) to generate post-hoc explanations for individual predictions.

- Clinical Validation & Collaboration: Conduct robust prospective validation studies to build trust and foster collaboration between AI engineers and clinicians to integrate AI as a decision-support tool, not a replacement [22].

Table 1: Performance Comparison of Machine Learning Models in a Fertility Study [16]

| Model Name | Accuracy | Sensitivity | Specificity | ROC-AUC |

|---|---|---|---|---|

| XGB Classifier | 62.5% | Not Reported | Not Reported | 0.580 |

| Logistic Regression | Not Reported | Not Reported | Not Reported | Not Reported |

| Random Forest | Not Reported | Not Reported | Not Reported | Not Reported |

| Study Context | This study used 63 sociodemographic and sexual health variables from 197 couples to predict natural conception. The limited performance highlights the complexity of fertility prediction. |

Table 2: Comparison of AI Optimization Techniques [20] [2] [21]

| Technique | Primary Benefit | Potential Drawback | Best Suited For |

|---|---|---|---|

| Pruning | Reduces model size and inference time. | May require fine-tuning to recover accuracy. | Deployment on mobile or edge devices. |

| Quantization | Decreases memory usage and power consumption. | Can lead to a slight loss in precision. | Real-time inference on hardware with limited resources. |

| Hyperparameter Tuning | Maximizes model accuracy and training efficiency. | Computationally intensive and time-consuming. | The initial model development phase to find the optimal configuration. |

| Knowledge Distillation | Creates a compact model that retains much of a larger model's knowledge. | Requires a high-quality, large teacher model. | Distributing models to clinical settings with lower computational power. |

Experimental Protocols

Protocol: Developing a Machine Learning Model for Natural Conception Prediction

Objective: To predict the likelihood of natural conception among couples using sociodemographic and sexual health data via machine learning [16].

Methodology:

- Data Collection:

- Cohorts: Recruit two distinct groups: fertile couples (achieved conception within one year) and infertile couples (unable to conceive after 12 months).

- Variables: Collect 63 parameters from both partners, including age, BMI, menstrual cycle characteristics, medical history, lifestyle factors (caffeine, smoking), and varicocele presence [16].

- Data Preprocessing:

- Apply inclusion/exclusion criteria to ensure clean cohort definitions.

- Use Permutation Feature Importance to select the 25 most predictive variables from the initial 63 [16].

- Model Training & Evaluation:

- Models: Train multiple models, such as XGB Classifier, Random Forest, and Logistic Regression.

- Training Scheme: Split data into 80% for training and 20% for testing.

- Metrics: Evaluate performance using accuracy, sensitivity, specificity, and ROC-AUC, with cross-validation to assess robustness [16].

Protocol: AI Workflow for Follicle Size Optimization in IVF

Objective: To use explainable AI to identify optimal follicle sizes that maximize mature oocyte yield and live birth rates during ovarian stimulation [22].

Methodology:

- Data Curation: Gather a large dataset (e.g., from over 19,000 patients) containing detailed follicle tracking data from ultrasound scans and corresponding cycle outcomes (oocyte maturity, live birth) [22].

- Model Development & Analysis:

- Employ explainable AI methods to analyze the entire cohort of follicles, moving beyond the simplification of using only lead follicles.

- The model identifies the specific size range of follicles most likely to yield mature oocytes post-trigger.

- Validation: Correlate the proportion of follicles within the AI-identified optimal range with key outcomes, specifically mature oocyte yield and live birth rates, to validate the clinical utility of the findings [22].

Workflow and Pathway Diagrams

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential "Reagents" for Fertility AI Research

| Item | Function in the AI "Experiment" |

|---|---|

| Pre-trained Models (e.g., ImageNet, BERT) | Models already trained on massive general datasets. They serve as a starting point for transfer learning, reducing the data and time needed to develop specialized models for tasks like analyzing embryo images or medical literature [15] [21]. |

| Optimization Frameworks (e.g., TensorRT, ONNX Runtime) | Software tools used to "refine" the final model. They implement techniques like pruning and quantization to make models faster and smaller for clinical deployment [20] [2] [21]. |

| Data Augmentation Libraries | Algorithms that artificially expand training datasets by creating slightly modified versions of existing images (e.g., rotations, flips, contrast changes). This helps improve model robustness and combat overfitting [18] [21]. |

| Hyperparameter Tuning Tools (e.g., Optuna) | Automated systems that search for the best combination of model settings (hyperparameters), much like optimizing a chemical reaction's conditions to maximize yield (accuracy) [20] [21]. |

| Explainable AI (XAI) Toolkits | Software packages that help interpret the predictions of complex "black box" models. This is crucial for building clinical trust and understanding the model's reasoning, for example, in embryo selection [22]. |

| AR25 | AR25 Chromone Derivative |

| aTAG 2139 | aTAG 2139, MF:C42H38N8O8, MW:782.81 |

Troubleshooting Guides

Poor Model Generalizability to New Clinical Sites

Problem: Your model, trained on data from a single fertility center, performs poorly when validated on data from a new clinic, showing significant performance degradation.

Explanation: This is often caused by a distribution shift between your training data and the new site's data. Variations in laboratory protocols, equipment, patient demographics, or embryo grading practices can create this shift, making the model's learned patterns less applicable.

Solution:

- Action 1: Enhance Dataset Representativeness: Proactively collect training data from multiple clinical sites with varying protocols and patient populations. Ensure the data encompasses the diversity you expect in real-world deployment [23].

- Action 2: Implement Rigorous External Validation: Before deployment, always test your model on a completely held-out dataset from a different fertility center. This provides a realistic estimate of real-world performance [23] [12].

- Action 3: Adhere to Regulatory Guidance: Follow emerging regulatory frameworks, such as the FDA's credibility assessment, which emphasizes characterizing training data and ensuring its representativeness for the intended patient population [24] [25].

High Model Instability and Inconsistent Predictions

Problem: When retrained on the same data with different random seeds, your model produces vastly different embryo rankings, undermining clinical reliability.

Explanation: This instability indicates that the model is highly sensitive to small changes in initial training conditions. This is a fundamental issue in some AI architectures for IVF, leading to low agreement between replicate models and a high frequency of critical errors, such as ranking non-viable embryos as top candidates [23].

Solution:

- Action 1: Quantify Instability Metrics: Systematically evaluate model consistency. Train multiple replicate models (e.g., 50x with different seeds) and measure the agreement in their rankings using metrics like Kendall’s W. Also, track the critical error rate—how often poor-quality embryos are top-ranked [23].

- Action 2: Explore Alternative Modeling Approaches: If using Single Instance Learning (SIL) models, investigate whether more stable AI frameworks or architectures are available. The high variability in SIL models may necessitate a different methodological approach [23].

- Action 3: Prioritize Interpretability: Use tools like SHAP (SHapley Additive exPlanations) or gradient-weighted class activation mapping to understand the divergent decision-making strategies of unstable models. This can provide clues for improving model design [23] [26].

Inadequate Transparency and Reporting for Regulatory Scrutiny

Problem: Your model's development and performance details are insufficiently documented, making it difficult to satisfy internal review boards or regulatory body requirements.

Explanation: A lack of methodological transparency is a common challenge with complex AI models. Regulators are increasingly focusing on this issue, requiring detailed disclosures about data provenance, model development, and performance metrics to assess credibility and potential biases [27] [25] [28].

Solution:

- Action 1: Adopt a Comprehensive Documentation Framework: Create a detailed report covering:

- Data Management: Sources, collection methods, cleaning, annotation procedures, and demographic characteristics [25] [28].

- Model Development: Architecture, features, hyperparameters, and training protocols [25].

- Validation Results: Performance metrics (sensitivity, specificity, AUROC) on independent test sets, with subgroup analyses [29] [28].

- Action 2: Use Model Cards: Consider using a "model card," a concise document summarizing the model's intended use, performance, limitations, and training data, as suggested by the FDA for medical devices [25].

- Action 3: Follow Reporting Guidelines: Adhere to established guidelines like TRIPOD+AI for clinical prediction models to ensure all critical aspects of development and validation are reported [29].

Frequently Asked Questions (FAQs)

Q1: What is the minimum dataset size required to train a reliable fertility AI model? There is no universal minimum; the required size depends on model complexity and task difficulty. The key is to ensure the dataset is representative. However, performance is more critically linked to data quality and diversity than to sheer volume. A smaller, well-annotated, and multi-center dataset is far more valuable than a large, homogenous, single-center one [23] [12]. One study achieving reasonable performance used datasets of 10,713 and 648 embryos from different centers for training and external testing, respectively [23].

Q2: How can I assess the quality of my training dataset? Evaluate your dataset against these criteria:

- Representativeness: Does it reflect the target patient population? Analyze demographics and clinical characteristics [24].

- Annotation Quality: Are the labels (e.g., live birth outcomes, embryo grades) accurate and consistent? Using a single, expert team for annotations can reduce variability [23].

- Completeness: Is there a high proportion of missing values for key features?

- Balance: For classification tasks, is there a significant class imbalance? Techniques like stratification may be needed.

Q3: What are the most common data-related pitfalls in fertility AI research?

- Single-Center Data: Models trained on data from one clinic often fail to generalize [23] [12].

- Insufficient External Validation: Relying only on internal validation (e.g., a simple train-test split from the same source) overestimates real-world performance [12].

- Poor Transparency: Failing to document data sources, demographics, and model details, which is now a major focus of regulatory bodies [28].

- Ignoring Model Instability: Not testing for consistency across multiple training runs, which is crucial for reliable clinical ranking [23].

Q4: Our model works well in internal tests but fails in clinical deployment. What went wrong? This "deployment gap" typically stems from overfitting to the training environment and a failure to account for real-world variability. Internal tests may not capture the full spectrum of data quality, patient profiles, and operational workflows found in a live clinical setting. The solution is to perform robust external validation on data from completely independent sites before deployment [23] [12].

The following table consolidates key quantitative findings from recent studies on data and model performance in fertility AI.

Table 1: Quantitative Evidence on Data and Model Performance in Fertility AI

| Study Focus | Key Metric | Reported Value / Finding | Implication for Data & Model Performance |

|---|---|---|---|

| AI Model Stability in Embryo Selection [23] | Consistency in embryo ranking (Kendall's W) | ~0.35 (where 0=no agreement, 1=perfect agreement) | Highlights significant instability in model rankings even with identical training data. |

| Critical Error Rate | ~15% | High rate of non-viable embryos being top-ranked, a major clinical risk. | |

| Performance on External Data | Error variance increased by 46.07%² | Demonstrates high sensitivity to distribution shifts between datasets. | |

| Transparency in FDA-Reviewed AI Devices [28] | Average Transparency (ACTR Score) | 3.3 out of 17 points | Indicates a severe lack of transparency in reporting model characteristics and data. |

| Devices Reporting Clinical Studies | 53.1% | Nearly half of approved AI devices lack publicly reported clinical studies. | |

| Devices Reporting Any Performance Metric | 48.4% | Over half of devices do not report basic performance metrics, hindering evaluation. | |

| Machine Learning for Blastocyst Yield Prediction [29] | Model Performance (R²) | 0.673 - 0.676 (Machine Learning) vs. 0.587 (Linear Regression) | Machine learning models better capture complex, non-linear relationships in IVF data. |

| Model Accuracy for Multi-class Prediction | 0.675 - 0.71 | Demonstrates the predictive potential of ML with structured, cycle-level data. |

Experimental Protocol: Evaluating AI Model Stability

This protocol is based on a study that systematically investigated the instability of AI models for embryo selection [23].

Objective: To assess the stability and reliability of a Single Instance Learning (SIL) model for embryo rank ordering.

Materials & Methods:

- Datasets: Use at least two independent, retrospective datasets from different fertility centers.

- Primary Dataset: For model training and validation (e.g., 10,713 embryos from 1,258 patients).

- External Test Dataset: For final evaluation only (e.g., 648 embryos from 53 patients).

- Model Training:

- Define a fixed model architecture (e.g., a convolutional neural network).

- Train 50 replicate models using the exact same architecture and training data, but with different random seeds for weight initialization.

- Evaluation:

- Rank Order Consistency: For each patient cohort in the test sets, generate embryo rank orders based on the model's live-birth probability output from all 50 replicates. Calculate Kendall’s W coefficient to measure agreement between the rankings.

- Critical Error Rate: Determine the frequency at which a model ranks a low-quality (e.g., degenerate) embryo as the top candidate when a higher-quality blastocyst is available.

- Interpretability Analysis: Use techniques like gradient-weighted class activation mapping to visualize the image regions influencing each model's decision, helping to identify divergent focus areas.

The workflow for this experiment is summarized in the following diagram:

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials and Computational Tools for Fertility AI Research

| Item / Tool Name | Function / Application in Research |

|---|---|

| Time-Lapse Microscopy Systems (e.g., Embryoscope) | Generates high-volume, time-series imaging data of embryo development, which is the primary input for many deep learning models in embryo selection. |

| Convolutional Neural Networks (CNNs) | A class of deep learning models, particularly effective for analyzing visual imagery like embryo pictures. They are commonly used in both research and commercial embryo assessment platforms [23]. |

| SHapley Additive exPlanations (SHAP) | A game theory-based method for interpreting the output of any machine learning model. It is used to explain feature importance, helping researchers understand which factors (e.g., embryo morphology) most influence the model's prediction [26]. |

| XGBoost / LightGBM | Powerful machine learning algorithms based on gradient boosting. They are highly effective for structured data tasks, such as predicting cycle-level outcomes (e.g., blastocyst yield) from clinical and morphological features, and often offer high performance and interpretability [29] [26]. |

| Prophet | A time-series forecasting procedure developed by Facebook, useful for analyzing and projecting long-term fertility trends based on population-level data [26]. |

| Model Cards | A framework for transparent reporting of model characteristics, intended use, and performance metrics. Their use is encouraged by regulatory bodies like the FDA to improve communication between developers and users [25]. |

| BDPSB | BDPSB, MF:C36H28N6O8S2, MW:736.77 |

| GSK-LSD1 Dihydrochloride | GSK-LSD1 Dihydrochloride, CAS:1821798-25-7, MF:C14H22Cl2N2, MW:289.24 |

In the context of assisted reproductive technology (ART), the integration of artificial intelligence (AI) into clinical workflows represents a paradigm shift from retrospective analysis to real-time decision support. For researchers and drug development professionals, a central thesis is emerging: the ultimate clinical value of an AI model is contingent not only on its accuracy but also on its speed of integration into existing clinical workflows. AI tools that generate predictions in real time (<1 second) are essential to avoid disrupting the carefully timed processes of ovarian stimulation and embryo culture [30]. The primary challenge is to balance this requisite for instantaneous processing with the rigorous, evidence-based accuracy demanded of a medical intervention. This technical support document outlines the critical troubleshooting steps, experimental protocols, and key reagents for developing and validating AI solutions that meet these dual demands of speed and accuracy.

Troubleshooting Guides & FAQs

FAQ 1: Our AI model is accurate on retrospective data, but clinicians report it disrupts their workflow. What are the primary integration points we should optimize for speed?

Answer: The most critical speed-sensitive integration points in the ART workflow involve real-time monitoring and triggering decisions. Seamless integration is achieved through API-based EMR integration that avoids multiple logins, manual data entry, or switching between screens [31].

- Troubleshooting Steps:

- Verify EMR API Connectivity: Confirm that your AI tool uses the clinic's EMR API for real-time, bidirectional data exchange. This eliminates manual data transfer, a major source of delay and error [31].

- Benchmark Data Retrieval and Prediction Time: Measure the time from a clinician opening a patient's record in the EMR to the AI insights being displayed. This end-to-end latency should be under one second to be considered real-time [30].

- Profile Model Inference Speed: Isolate the AI model's prediction time. For image-based models (e.g., embryo analysis), optimize the deep learning architecture (e.g., using lighter-weight CNNs) to reduce processing time without sacrificing predictive performance.

FAQ 2: How can we validate that our model's speed does not come at the cost of clinical accuracy and patient safety?

Answer: Robust, prospective validation is the cornerstone of ensuring that speed does not compromise safety. A purpose-built AI must be validated against clinically relevant endpoints in a setting that mimics real-world use [22] [32].

- Troubleshooting Steps:

- Conduct a "Human-in-the-Loop" Simulation: Design a study where embryologists or clinicians use your AI tool in a simulated, time-pressured environment. Compare the accuracy and efficiency of decisions made with and without the AI support.

- Implement a "Silent Trial": Run the AI tool in parallel with the standard clinical workflow without showing its results to the clinical team. Record the AI's predictions and compare them to both the clinical decisions and the ultimate patient outcomes (e.g., blastocyst formation, live birth). This validates efficacy without risking patient safety [22].

- Audit for Model Drift: Establish a continuous monitoring system to track the model's performance over time as new patient data is acquired. A drop in accuracy, even if speed remains high, indicates model drift and the need for retraining.

FAQ 3: Our model for predicting blastocyst formation is accurate but computationally intensive, causing delays. What architectural strategies can improve inference speed?

Answer: For time-lapse image analysis, the choice of deep learning architecture directly impacts speed. Replacing a single, complex model with a staged or hybrid architecture can significantly reduce processing time [33].

- Troubleshooting Steps:

- Analyze Computational Bottlenecks: Use profiling tools to identify if the lag is due to data preprocessing, feature extraction, or the model's inference.

- Consider a Two-Stage Model: As demonstrated in a study predicting blastocyst formation, a two-stage model that first identifies cellular events and then uses a sequential model like a Gated Recurrent Unit (GRU) for prediction can achieve high accuracy (93%) with efficient processing [33].

- Optimize for Hardware Acceleration: Ensure the model software stack is configured to leverage GPU acceleration, which is critical for processing the high-volume image data from time-lapse systems in real-time.

Experimental Protocols for Validating Speed and Accuracy

To empirically balance speed and accuracy, researchers should adopt the following experimental protocols.

Protocol 1: Real-World Workflow Impact Study

This protocol assesses the integration of an AI clinical decision support system (CDSS) for FSH starting dose selection and trigger timing.

- Objective: To evaluate whether the adjunctive use of AI software changes treatment decisions and patient outcomes without introducing workflow delays [30].

- Methodology:

- Design: Retrospective cohort study with matched historical controls.

- Intervention: Physicians use an AI CDSS (e.g., Stim Assist) integrated into the EMR to guide FSH starting dose and trigger timing. The software provides predictions in real-time (<1 second) [30].

- Control: Historical patients treated by the same physicians without AI.

- Primary Endpoints:

- Speed Metric: Time from EMR access to AI recommendation display.

- Efficacy Metrics: Starting FSH dose (IU), total FSH dose (IU), number of metaphase II (MII) oocytes retrieved.

- Statistical Analysis: T-test to compare means between groups for efficacy metrics. Descriptive statistics for speed metrics.

Protocol 2: Prospective Validation of an AI for Embryo Selection

This protocol validates a deep learning model for predicting blastocyst formation from cleavage-stage embryos using time-lapse images.

- Objective: To predict blastocyst formation at the cleavage stage (Day 3) with high accuracy and speed, enabling earlier embryo transfer [33].

- Methodology:

- Model Architecture: A ResNet-GRU hybrid model.

- Stage 1 (Feature Extraction): A Residual Neural Network (ResNet) processes individual time-lapse frames to extract spatial features.

- Stage 2 (Temporal Analysis): A Gated Recurrent Unit (GRU) analyzes the sequence of extracted features to model embryo development over time [33].

- Data Input: Time-lapse video frames from Day 0 to Day 3 (72 hours post-insemination).

- Outcome: Binary classification (Blastocyst/No Blastocyst).

- Validation: Performance evaluated on a hold-out test set with metrics including accuracy, sensitivity, specificity, and per-image inference time.

- Model Architecture: A ResNet-GRU hybrid model.

Table 1: Performance Metrics of a ResNet-GRU Model for Blastocyst Prediction

| Metric | Value | Interpretation |

|---|---|---|

| Validation Accuracy | 93% | The model correctly classified blastocyst outcome in 93% of cases [33]. |

| Sensitivity | 0.97 | The model correctly identifies 97% of embryos that will form a blastocyst [33]. |

| Specificity | 0.77 | The model correctly identifies 77% of embryos that will not form a blastocyst [33]. |

| Inference Speed | Real-time (<1 sec/video) | The model processes a full time-lapse video sequence fast enough for clinical workflow integration [30]. |

Workflow Visualization: AI Integration in the IVF Pipeline

The following diagram illustrates the key touchpoints for real-time AI decision support within a standard IVF cycle, highlighting where speed of integration is most critical.

AI Integration in the IVF Pipeline

The Scientist's Toolkit: Research Reagent Solutions

For researchers developing and validating fertility AI models, the following table details essential "research reagents" – key data types and software components required to build effective systems.

Table 2: Essential Components for Fertility AI Research & Development

| Component | Function in the Experiment | Example in Context |

|---|---|---|

| Clinical & Demographic Data | Provides baseline patient characteristics for personalizing treatment protocols and understanding population biases. | Age, Body Mass Index (BMI), infertility diagnosis [30] [34]. |

| Endocrine & Biomarker Data | Used as key input features for models predicting ovarian response and optimizing drug dosing. | Anti-Müllerian Hormone (AMH), Antral Follicle Count (AFC), baseline Estradiol (E2) [30] [35]. |

| Ultrasound & Follicle Metrics | Serves as temporal, image-based data for monitoring follicle growth and predicting oocyte maturity. | 2D/3D ultrasound images; follicle diameters and areas grouped by size cohorts (e.g., 14-15mm, 16-17mm) [22] [34]. |

| Time-Lapse Imaging (TLI) Data | Provides continuous, non-invasive visual data of embryo development for morphokinetic analysis and blastocyst prediction. | Video frames from embryo culture incubators, annotated for key cellular events (e.g., cell division) [33]. |

| Electronic Medical Record (EMR) API | The critical conduit for seamless, real-time data exchange between the AI model and the clinical workflow. | An API connection that allows the AI to pull patient data and push predictions directly into the clinician's view without manual steps [31]. |

| Deep Learning Frameworks | Software libraries used to build, train, and validate complex AI models for image and sequence analysis. | TensorFlow or PyTorch used to implement architectures like CNNs for image analysis or GRUs for temporal modeling [33]. |

| KuWal151 | KuWal151|Potent CLK Inhibitor|For Research Use | KuWal151 is a potent, selective CLK4/1/2 inhibitor for cancer research. It shows antiproliferative activity in vitro. For Research Use Only. Not for human use. |

| MS31 | MS31|Spindlin-1 Inhibitor | MS31 is a potent Spindlin-1 inhibitor for cancer research. It is supplied for Research Use Only (RUO). Not for human, veterinary, or household use. |

High-Performance Architectures: Methodologies for Efficient and Accurate Fertility AI

Technical Troubleshooting Guide: Common LightGBM Issues in Fertility Research

This section addresses specific challenges you might encounter when using LightGBM for reproductive medicine research.

FAQ 1: My computer runs out of RAM when training LightGBM on a large dataset of IVF cycles. What can I do?

This is a common issue when working with extensive medical datasets. Several solutions exist [36]:

- Set the

histogram_pool_sizeparameter to control the MB of memory you want LightGBM to use. - Lower the

num_leavesparameter, as this is a primary controller of model complexity. - Reduce the

max_binparameter to decrease the granularity of feature binning.

FAQ 2: The results from my LightGBM model are not reproducible between runs, even with the same random seed. Why?

This is normal and expected behavior when using the GPU version of LightGBM [36]. For reproducibility, you can:

- Use the

gpu_use_dp = trueparameter to enable double precision (though this may slow down training). - Alternatively, use the CPU version of LightGBM for fully reproducible results [36].

FAQ 3: LightGBM crashes randomly with an error about "libiomp5.dylib" and "libomp.dylib". What does this mean?

This error indicates a conflict between multiple OpenMP libraries installed on your system [36]. If you are using Conda as your package manager, a reliable solution is to source all your Python packages from the conda-forge channel, as it contains built-in patches for this conflict. Other workarounds include creating symlinks to a single system-wide OpenMP library or removing MKL optimizations with conda install nomkl [36].

FAQ 4: My LightGBM model training hangs or gets stuck when I use multiprocessing. How can I fix this?

This is a known issue when using OpenMP multithreading and forking in Linux simultaneously [36]. The most straightforward solution is to disable multithreading within LightGBM by setting nthreads=1. A more resource-intensive solution is to use new processes instead of forking, though this requires creating multiple copies of your dataset in memory [36].

FAQ 5: Why is early stopping not enabled by default in LightGBM?

LightGBM requires users to specify a validation set for early stopping because the appropriate strategy for splitting data into training and validation sets depends heavily on the task and domain [36]. This design gives researchers, who understand their data's structure (such as time-series data from sequential IVF cycles), the flexibility to define the most suitable validation approach.

Experimental Protocol: Predicting Blastocyst Yield in IVF Cycles

The following methodology is based on a 2025 study that developed and validated machine learning models to quantitatively predict blastocyst yields [37] [29].

Data Source and Study Population

- Dataset: The study analyzed 9,649 IVF/ICSI cycles [38] [29].

- Outcome Distribution: The dataset included cycles that produced no usable blastocysts (40.7%), 1-2 usable blastocysts (37.7%), and 3 or more usable blastocysts (21.6%) [38] [29].

- Data Splitting: The dataset was randomly split into a training set and a test set for model development and internal validation [29].

Feature Preprocessing and Selection

- Initial Feature Set: The study incorporated potential clinical predictors established in reproductive medicine.

- Feature Selection: A recursive feature elimination (RFE) process was used. The analysis found that model performance remained stable with 8 to 21 features but declined sharply with 6 or fewer features [29].

- Final Feature Set: The optimal LightGBM model utilized 8 key features [37] [29].

Model Training and Validation

- Algorithms Compared: Three machine learning models (Support Vector Machine (SVM), LightGBM, and XGBoost) were trained and compared against a traditional Linear Regression baseline [37].

- Performance Metrics: Models were evaluated using the R-squared (R²) coefficient, Mean Absolute Error (MAE), and Root Mean Square Error (RMSE) for regression tasks. For the multi-classification task (predicting 0, 1-2, or ≥3 blastocysts), accuracy and Kappa coefficients were used [37] [29].

- Validation: Internal validation was performed on the held-out test set [29].

The workflow for this experiment is summarized in the diagram below.

Performance Results and Key Features

The following tables summarize the quantitative outcomes of the cited study and the essential "research reagents" – the key input features required for the model.

Table 1: Comparative Model Performance for Blastocyst Yield Prediction (Regression Task) [37] [29]

| Model | Number of Features | R² | Mean Absolute Error (MAE) | Root Mean Square Error (RMSE) |

|---|---|---|---|---|

| LightGBM | 8 | 0.675 | 0.813 | 1.12 |

| XGBoost | 11 | 0.673 | 0.809 | 1.12 |

| SVM | 10 | 0.676 | 0.793 | 1.12 |

| Linear Regression | 8 | 0.587 | 0.943 | 1.26 |

Table 2: LightGBM Performance on Multi-Class Prediction Task [29]

| Cohort | Accuracy | Kappa Coefficient |

|---|---|---|

| Overall Test Set | 0.678 | 0.500 |

| Advanced Maternal Age Subgroup | 0.710 | 0.472 |

| Poor Embryo Morphology Subgroup | 0.690 | 0.412 |

| Low Embryo Count Subgroup | 0.675 | 0.365 |

Table 3: Research Reagent Solutions - Critical Features for Prediction

| Key Feature | Function / Rationale | Relative Importance |

|---|---|---|

| Number of Extended Culture Embryos | The total number of embryos available for blastocyst culture is the fundamental base input. | 61.5% |

| Mean Cell Number on Day 3 | Indicates normal and timely embryo cleavage, a strong marker of developmental potential. | 10.1% |

| Proportion of 8-cell Embryos on Day 3 | The presence of embryos at the ideal cell stage on day 3 is a critical positive predictor. | 10.0% |

| Proportion of Symmetrical Embryos on Day 3 | Reflects embryo quality; symmetrical cleavage is associated with higher viability. | 4.4% |

| Proportion of 4-cell Embryos on Day 2 | Indicates early and timely embryo development. | 7.1% |

| Female Age | A well-established non-lab factor influencing overall oocyte and embryo quality. | 2.4% |

Balancing Accuracy and Speed in Fertility AI Models

The case study demonstrates that LightGBM effectively balances predictive accuracy and computational efficiency, a crucial consideration for clinical AI models.

- Accuracy vs. Interpretability: While all three ML models showed comparable performance, LightGBM was selected as optimal because it achieved this performance with fewer features (8) than SVM (10) and XGBoost (11), reducing overfitting risk and enhancing simplicity for clinical application [37] [29].

- Computational Efficiency: LightGBM is engineered for speed and lower memory usage. It uses a histogram-based algorithm to bucket continuous feature values, which accelerates the training process. Furthermore, it grows trees leaf-wise rather than level-wise, which can lead to higher accuracy with fewer trees, directly contributing to faster training times – a significant advantage when iterating on model development [39].

- Clinical Utility: The model's strong performance in poor-prognosis subgroups (e.g., advanced maternal age, low embryo count) is particularly valuable [29]. These patients face more urgent dilemmas regarding extended culture, and a fast, accurate prediction can directly support critical treatment decisions.

Frequently Asked Questions (FAQs)

Q1: What are the primary benefits of combining neural networks with bio-inspired optimization algorithms? Integrating neural networks (NNs) with bio-inspired optimization algorithms (e.g., Ant Colony Optimization) creates a powerful synergy. The neural network, often a Graph Neural Network (GNN), learns to generate instance-specific heuristic priors from data. The bio-inspired algorithm, such as ACO, then uses these learned heuristics to guide its stochastic search more efficiently through the solution space. This hybrid approach leverages the pattern recognition and generalization capabilities of NNs with the powerful exploration and combinatorial optimization strength of algorithms like ACO, often leading to faster convergence and higher-quality solutions than either method could achieve alone [40].

Q2: My hybrid model is converging to suboptimal solutions. How can I improve its exploration? Premature convergence often indicates an imbalance between exploration and exploitation. You can address this by:

- Adjusting Pheromone Parameters: In ACO-based hybrids, increase the influence of the heuristic information (beta parameter) relative to the pheromone trails (alpha parameter) in the early stages of training to encourage exploration of new paths [40].

- Entropy Regularization: Incorporate entropy regularization into your reinforcement learning training protocol (e.g., when using Proximal Policy Optimization). This technique encourages the policy to be more stochastic during training, preventing it from becoming overconfident in a narrow set of actions and promoting broader exploration [40].

- Hybridization for Balance: Intentionally combine algorithms with complementary strengths. For example, one study integrated the strong exploitation phase of Bacterial Foraging Optimization (BFO) into the Artificial Bee Colony (ABC) algorithm to improve its local search capabilities and accelerate convergence, achieving a more balanced search [41].

Q3: The inference speed of my hybrid model is too slow for practical use. What optimizations can I make? Slow inference is a common challenge. Consider these strategies:

- Implement Focused Search: Instead of rebuilding complete solutions from scratch every iteration, use a method like Focused ACO (FACO). This technique performs targeted modifications around a high-quality reference solution (provided by the neural network), preserving strong substructures and only refining weaker parts of the solution, which drastically reduces computational overhead [40].

- Use Candidate Lists: Restrict the neighborhood search for each node to a "candidate list" of the most promising connections, rather than evaluating all possible connections. This significantly reduces the decision space and speeds up each iteration of the optimization algorithm [40].

- Optimize Feature Set: For the neural network component, ensure you are not using redundant features. Perform feature importance analysis and recursive feature elimination to identify the minimal set of highly predictive features, which can reduce model complexity and inference time without sacrificing accuracy [29].

Q4: How can I effectively map a real-world fertility treatment problem, like embryo selection, onto this hybrid framework? Framing a fertility AI problem requires careful definition of the problem components:

- Problem as a Graph: Define your fertility data as a graph. For example, in time-lapse imaging of embryo development, each time point or morphological feature can be a node, with edges representing temporal or structural relationships.

- Solution as a Path/Ranking: The task of selecting the best embryo can be formulated as finding the optimal "path" or sequence of developmental stages that leads to a positive outcome (e.g., blastocyst formation or fetal heartbeat), or simply as a ranking problem where the hybrid model scores and ranks embryos.

- Neural Network's Role: A GNN or CNN processes the graph or image data to extract meaningful features and generate a heuristic matrix (H_θ). This matrix predicts the "desirability" of certain developmental patterns or morphological features [40].

- Optimizer's Role: The ACO (or other bio-inspired algorithm) uses these learned heuristics to intelligently explore the vast space of possible embryo quality rankings, efficiently identifying the embryos with the highest predicted potential for success, as demonstrated by AI models that correlate embryo images with implantation success [10].

Troubleshooting Guides

Performance Degradation: Low Predictive Accuracy

Description: The hybrid model's predictions are inaccurate and do not generalize well to unseen data, failing to outperform baseline models.

| Possible Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Poor Heuristic Guidance | Check the correlation between the NN's output heuristics and solution quality on a validation set. | Refine the NN's training. Use a more stable RL algorithm like Proximal Policy Optimization (PPO) with a value function to reduce variance and improve the quality of the learned heuristics [40]. |

| Feature Inefficacy | Perform feature importance analysis (e.g., using LightGBM's built-in methods) to identify non-predictive features [29]. | Conduct recursive feature elimination to find the optimal subset of features. Incorporate domain knowledge (e.g., number of extended culture embryos, mean cell number on Day 3 for blastocyst prediction) to select biologically relevant features [29]. |

| Algorithm Imbalance | Analyze the search behavior; is it stuck in local optima (over-exploitation) or wandering randomly (over-exploration)? | Fine-tune the metaheuristic's parameters. For ACO, adjust the α (pheromone weight) and β (heuristic weight) parameters. Consider hybridizing two bio-inspired algorithms to balance exploration and exploitation [41]. |

Training Instability and Non-Convergence

Description: During training, the model's loss or performance metric fluctuates wildly and fails to stabilize or improve over time.

| Possible Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| High-Variance Gradients | Monitor the gradient norms and the variance of the reward signals in RL-based training. | Implement Gradient Clipping and Entropy Regularization. Using PPO, which constrains policy updates, is specifically designed to enhance training stability and prevent destructive policy changes [40]. |

| Incompatible Components | Test the neural network and the optimization algorithm independently to see if one is fundamentally failing. | Ensure the NN's output scale is compatible with the optimizer's expected input. Normalize heuristic values and pheromone trails to prevent one from dominating the other prematurely [40]. |

| Data Inconsistency | Verify the consistency of data preprocessing and labeling between training and validation splits. | Standardize data pipelines and augment the training set with techniques like synthetic data generation, which has been used to refine embryo evaluation models and improve robustness [14]. |

Computational Bottlenecks and Scalability Issues

Description: The model takes too long to train or perform inference, especially as problem size (e.g., number of nodes in a network, number of embryo features) increases.

| Possible Cause | Diagnostic Steps | Recommended Solution |

|---|---|---|

| Inefficient Search | Profile the code to identify if the bio-inspired optimizer is the bottleneck. | Integrate Focused ACO (FACO) and candidate lists. FACO refines existing solutions instead of building new ones from scratch, which narrows the search space and improves scalability for large problems [40]. |

| Overly Complex NN | Analyze the NN's architecture; is it deeper than necessary? | Simplify the NN model. Explore more efficient architectures or use model compression techniques like pruning. A study on blastocyst prediction found that LightGBM provided excellent accuracy with fewer features, enhancing simplicity and speed [29]. |

| Inadequate Hardware | Monitor GPU/CPU and memory utilization during training and inference. | Leverage hardware acceleration. Ensure the framework is configured to utilize GPUs for the NN's forward/backward passes and that the optimizer's code is efficiently vectorized. |

Experimental Protocols & Workflows

Protocol: Implementing a Neural-FACO Hybrid for Combinatorial Optimization

This protocol outlines the steps for building a hybrid framework like NeuFACO, which combines a GNN with a Focused Ant Colony Optimization for problems like optimal resource scheduling in IVF labs [40].

1. Problem Formulation:

- Define the problem on a graph

G = (V, E), whereVrepresents entities (e.g., cities for TSP, treatment steps, embryo samples) andErepresents connections with associated costs or distances.

2. Neural Network Training (Amortized Inference):

- Architecture: Employ a Graph Neural Network (GNN) to process the graph

G. - Outputs: The GNN should output two things: 1) A heuristic matrix

H_θ, which provides learned priors over edges, and 2) A value estimateV_θ, which predicts the expected solution quality for the instance. - Training Method: Train the GNN using Proximal Policy Optimization (PPO), an on-policy Reinforcement Learning algorithm. The reward is typically the negative of the solution cost (e.g.,

R = -C(Ï€)). Using PPO with entropy regularization encourages exploration and stabilizes training [40].

3. Focused ACO for Solution Refinement:

- Initialization: Initialize pheromone trails, often using the neural heuristic

H_θas a prior. - Solution Construction: Let ants build solutions probabilistically using a rule that combines pheromone (

τ) and neural heuristic (H_θ):p_ij ∠(τ_ij^α) * (H_θ(i,j)^β). - Focused Search: Instead of rebuilding full tours, implement FACO. Select a high-quality reference solution (e.g., the best solution from the initial phase or the NN's greedy solution). FACO then iteratively identifies and refines the weakest segments of this reference solution through local search operators like 2-opt or node relocation, dramatically improving efficiency [40].

- Pheromone Update: Update pheromone trails based on the quality of the new solutions found, reinforcing paths in the promising regions identified by the focused search.

The workflow below visualizes the architecture and data flow of this hybrid system.

Protocol: Quantitative Blastocyst Yield Prediction with Machine Learning

This protocol is adapted from a study that successfully used machine learning models (LightGBM, SVM, XGBoost) to quantitatively predict blastocyst yields in IVF cycles, a key task for balancing accuracy and speed in fertility AI [29].

1. Data Collection and Preprocessing:

- Cohort: Collect data from a large number of IVF cycles (e.g., n > 9,000).

- Feature Set: Compile a comprehensive set of potential predictors, including:

- Demographic: Female age.

- Stimulation-related: Number of oocytes retrieved, number of 2PN embryos.

- Embryo Morphology (Day 2 & 3): Number of extended culture embryos, mean cell number, proportion of 8-cell embryos, proportion of 4-cell embryos, proportion of symmetry, mean fragmentation.

- Outcome: The target variable is the number of usable blastocysts formed per cycle.

- Data Splitting: Randomly split the dataset into training and test sets (e.g., 70/30 or 80/20).

2. Model Training and Feature Selection:

- Model Selection: Train multiple machine learning models, such as LightGBM, XGBoost, and Support Vector Machines (SVM).

- Baseline Comparison: Include a traditional linear regression model as a baseline.

- Feature Selection: Use Recursive Feature Elimination (RFE) to identify the optimal subset of features. Iteratively remove the least important features until model performance (e.g., R², Mean Absolute Error) begins to drop significantly. The goal is a parsimonious model for speed and interpretability [29].

3. Model Evaluation and Interpretation:

- Performance Metrics: Evaluate models on the held-out test set using:

- R² (Coefficient of Determination): Measures the proportion of variance explained.

- Mean Absolute Error (MAE): The average absolute error between predicted and actual blastocyst counts.

- Model Interpretation: For the chosen model (e.g., LightGBM), perform:

- Feature Importance Analysis: Identify the top predictors of blastocyst yield.

- Partial Dependence Plots (PDPs) & Individual Conditional Expectation (ICE) Plots: Visualize the relationship between key features and the predicted outcome [29].

The following workflow diagram illustrates the key stages of this predictive modeling process.

Performance Data & Benchmarks

Performance of Hybrid Optimization Models

The table below summarizes the performance of various hybrid models as reported in recent research, providing benchmarks for expected improvements.

| Model / Protocol Name | Core Hybrid Approach | Key Performance Improvement | Application Context |

|---|---|---|---|