Machine Learning Prediction Models for Rare Fertility Outcomes: From Data to Clinical Decision Support

This article provides a comprehensive examination of machine learning (ML) applications in predicting rare and complex fertility outcomes for researchers, scientists, and drug development professionals. It explores the foundational principles underpinning ML prediction models in assisted reproductive technology (ART), analyzes diverse methodological approaches and their specific clinical applications, addresses critical optimization challenges in model development, and evaluates validation frameworks and comparative performance across algorithms. By synthesizing recent advancements and evidence, this review aims to guide the development of more robust, clinically applicable prediction tools that can enhance patient counseling, personalize treatment strategies, and ultimately improve success rates in infertility treatment.

Machine Learning Prediction Models for Rare Fertility Outcomes: From Data to Clinical Decision Support

Abstract

This article provides a comprehensive examination of machine learning (ML) applications in predicting rare and complex fertility outcomes for researchers, scientists, and drug development professionals. It explores the foundational principles underpinning ML prediction models in assisted reproductive technology (ART), analyzes diverse methodological approaches and their specific clinical applications, addresses critical optimization challenges in model development, and evaluates validation frameworks and comparative performance across algorithms. By synthesizing recent advancements and evidence, this review aims to guide the development of more robust, clinically applicable prediction tools that can enhance patient counseling, personalize treatment strategies, and ultimately improve success rates in infertility treatment.

Understanding ML for Rare Fertility Outcomes: Foundations and Clinical Imperatives

Quantitative Definitions of Key Fertility Outcomes

Fertility outcomes represent critical endpoints for evaluating assisted reproductive technology (ART) success. The table below summarizes quantitative definitions and performance metrics for key outcomes based on clinical and laboratory standards.

Table 1: Definitions and Performance Metrics for Key Fertility Outcomes

| Outcome | Definition | Key Performance Metrics | Reported Rates |

|---|---|---|---|

| Clinical Pregnancy | Detection of an intrauterine gestational sac via transvaginal ultrasound 28–35 days post-embryo transfer [1]. | Clinical Pregnancy Rate (CPR) = (Number of clinical pregnancies / Number of embryo transfers) × 100 [1]. | 46.08% (overall CPR in FET cycles); 61.14% (blastocyst transfers) vs. 34.13% (cleavage-stage transfers) [1]. |

| Live Birth | Delivery of one or more living infants after ≥24 weeks of gestation [2]. | Live Birth Rate (LBR) = (Number of live births / Number of embryo transfers) × 100 [2]. | 26.96% (overall LBR in IVF/ICSI cycles) [2]. |

| Blastocyst Formation | Development of a fertilized egg to a blastocyst by day 5 or 6, characterized by blastocoel expansion, inner cell mass (ICM), and trophectoderm (TE) [3]. | Blastocyst Formation Rate = (Number of blastocysts / Number of fertilized eggs cultured to day 5/6) × 100 [3]. | 53.6% (from good-quality day 3 embryos) vs. 19.3% (from poor-quality day 3 embryos) [3]. |

Experimental Protocols for Outcome Assessment

Protocol for Clinical Pregnancy Confirmation

Objective: To confirm clinical pregnancy post-embryo transfer. Workflow:

- Serum β-hCG Testing:

- Transvaginal Ultrasound:

Diagram 1: Clinical Pregnancy Confirmation Workflow (79 characters)

Protocol for Blastocyst Formation Assessment

Objective: To evaluate embryo development to the blastocyst stage using standardized grading. Workflow:

- Embryo Culture:

- Culture fertilized eggs in sequential media under tri-gas incubators (6% CO₂, 5% O₂, 89% N₂) at 37°C until day 5/6 [3].

- Blastocyst Grading (Gardner Criteria):

- High-Quality Blastocyst Definition: Expansion stage ≥3 with ICM and TE grades ≥B [1].

Diagram 2: Blastocyst Formation Assessment Workflow (85 characters)

Protocol for Live Birth Documentation

Objective: To document live birth resulting from ART cycles. Workflow:

- Post-Transfer Monitoring:

- Track pregnancy progress via obstetric care until delivery.

- Live Birth Certification:

- Criteria: Delivery of ≥1 living infant at ≥24 weeks gestation [2].

- Data Collection: Record gestational age, birth weight, and neonatal outcomes.

Machine Learning Prediction Models for Fertility Outcomes

Machine learning (ML) models leverage demographic, clinical, and laboratory variables to predict ART success. The table below outlines key predictors and ML applications for each fertility outcome.

Table 2: Machine Learning Models and Predictors for Fertility Outcomes

| Outcome | Key Predictors | ML Algorithms | Model Performance |

|---|---|---|---|

| Clinical Pregnancy | Female age (OR: 0.93), number of high-quality blastocysts (OR: 1.67), AMH level (OR: 1.03), blastocyst transfer (OR: 2.31), endometrial thickness on transfer day (OR: 1.10) [1]. | Random forest, binary logistic regression [1]. | Random forest identified 7 top predictors; logistic regression provided odds ratios (OR) with 95% CI [1]. |

| Live Birth | Maternal age, duration of infertility, basal FSH, progressive sperm motility, progesterone on HCG day, estradiol on HCG day, luteinizing hormone on HCG day [2]. | Random forest, XGBoost, LightGBM, logistic regression [2]. | AUROC: 0.674 (logistic regression), 0.671 (random forest); Brier score: 0.183 [2]. |

| Blastocyst Formation | Day 3 embryo morphology, maternal age, fertilization method [3]. | Predictive models using lab-environment data (e.g., incubator metrics) [4]. | Blastocyst euploidy rate unaffected by day 3 quality (42.6–43.8%) [3]. |

Diagram 3: ML Prediction Model Framework (81 characters)

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents and Materials for Fertility Outcomes Research

| Item | Function | Application Example |

|---|---|---|

| Tri-Gas Incubators | Maintain physiological Oâ‚‚ (5%), COâ‚‚ (6%), and Nâ‚‚ (89%) levels for optimal embryo culture [3]. | Blastocyst formation assays [3]. |

| Sequential Culture Media | Support embryo development from cleavage to blastocyst stage with stage-specific nutrients [3]. | Embryo culture to day 5/6 [3]. |

| Anti-Müllerian Hormone (AMH) ELISA Kits | Quantify serum AMH levels to assess ovarian reserve [1]. | Predicting clinical pregnancy (OR: 1.03) [1]. |

| Preimplantation Genetic Testing for Aneuploidy (PGT-A) | Screen blastocysts for chromosomal abnormalities to select euploid embryos [3]. | Live birth prediction; euploidy rate assessment (42.6–43.8%) [3]. |

| β-hCG Immunoassay Kits | Detect pregnancy via serum β-hCG levels 12–14 days post-transfer [1]. | Biochemical pregnancy confirmation [1]. |

| Embryo Grading Materials | Standardize blastocyst assessment using Gardner criteria (ICM, TE, expansion) [1]. | Classifying high-quality blastocysts [1]. |

| Ledipasvir D-tartrate | Ledipasvir D-tartrate|CAS 1502654-87-6|HCV Inhibitor | Ledipasvir D-tartrate is a potent, research-grade HCV NS5A inhibitor. This product is for Research Use Only and is not intended for diagnostic or therapeutic applications. |

| Tigecycline hydrochloride | Tigecycline hydrochloride, CAS:197654-04-9, MF:C29H40ClN5O8, MW:622.1 g/mol | Chemical Reagent |

Assisted Reproductive Technology (ART) represents a landmark achievement in treating infertility, a condition affecting an estimated 15% of couples globally [5]. Despite the growing utilization of ART, success rates have plateaued at approximately 30-40% per cycle, presenting a significant clinical challenge [6] [5]. The unpredictable nature of ART outcomes generates substantial emotional and financial burdens for patients, underscoring the critical need for reliable prognostic tools.

Traditional methods for predicting ART success have historically relied on clinicians' subjective assessments, often based primarily on patient age and historical clinic success rates [5]. However, the complex, multifactorial nature of human reproduction involves numerous interrelated variables, making accurate prediction a formidable task. Machine learning (ML), a subset of artificial intelligence, has emerged as a promising approach to enhance predictive accuracy by analyzing complex patterns in large datasets that may elude conventional statistical methods or human interpretation [7]. This application note explores the clinical challenges in ART prediction and details advanced ML methodologies to address them within rare fertility outcomes research.

Quantitative Landscape of ART Prediction Models

The performance of machine learning models in predicting ART success varies considerably based on algorithm selection, feature sets, and dataset characteristics. The table below summarizes the performance metrics of various ML algorithms as reported in recent studies, providing a comparative overview for researchers.

Table 1: Performance Metrics of Machine Learning Models for ART Outcome Prediction

| Study Reference | ML Algorithms Used | Dataset Size | Key Predictors | Best Performing Model | Performance (AUC/Accuracy) |

|---|---|---|---|---|---|

| Systematic Review (2025) [6] | SVM, RF, LR, KNN, ANN, GNB | 107 features across 27 studies | Female age (most common) | Support Vector Machine (SVM) | AUC: 0.997 (Best reported) |

| Wang et al. (2024) [2] | RF, XGBoost, LightGBM, LR | 11,486 couples | Maternal age, duration of infertility, basal FSH, progressive sperm motility, P on HCG day, E2 on HCG day, LH on HCG day | Logistic Regression | AUC: 0.674 (95% CI 0.627-0.720) |

| Shanghai Cohort (2025) [5] | RF, XGBoost, GBM, AdaBoost, LightGBM, ANN | 11,728 records | Female age, grades of transferred embryos, number of usable embryos, endometrial thickness | Random Forest | AUC: >0.8 |

| Advanced ML Paradigms (2024) [7] | LR, Gaussian NB, SVM, MLP, KNN, Ensemble Models | Not specified | Patient demographics, infertility factors, treatment protocols | Logit Boost | Accuracy: 96.35% |

The variation in model performance across studies highlights several critical challenges in ART prediction. First, feature heterogeneity is apparent, with different studies prioritizing distinct predictor combinations. Second, dataset size and quality significantly impact model robustness, with larger datasets generally yielding more reliable models. Third, algorithm selection plays a crucial role, with no single model consistently outperforming others across all datasets and contexts.

Key Experimental Protocols in ML-Driven ART Prediction

Data Collection and Preprocessing Protocol

Purpose: To systematically collect and prepare ART cycle data for predictive modeling.

Materials:

- Electronic Health Record (EHR) system with ART cycle data

- Data anonymization software

- Statistical software (R, Python, SPSS)

Procedure:

- Data Extraction: Retrieve comprehensive ART cycle data including:

- Demographic parameters (female and male age, BMI, ethnicity)

- Infertility factors (duration, type, cause)

- Treatment parameters (Gn dosage, stimulation protocol, insemination method)

- Laboratory values (basal FSH, E2, LH, progesterone on HCG day)

- Embryology data (number of oocytes, fertilization rate, embryo quality)

- Outcome measures (clinical pregnancy, live birth) [2] [5]

Data Cleaning:

Feature Engineering:

Data Partitioning:

Predictive Model Development Protocol

Purpose: To construct and validate ML models for ART success prediction.

Materials:

- ML platforms (R with caret, xgboost, bonsai packages; Python with PyTorch)

- High-performance computing resources

Procedure:

- Algorithm Selection: Choose multiple ML algorithms representing different approaches:

Hyperparameter Tuning:

- Implement grid search or random search approaches

- Use 5-fold cross-validation on training data

- Optimize for AUC (Area Under the ROC Curve) as primary metric [5]

Model Training:

- Train each algorithm on the training dataset

- Apply regularization techniques to prevent overfitting

- For ensemble methods, set appropriate number of estimators and learning rates

Model Validation:

Model Interpretation:

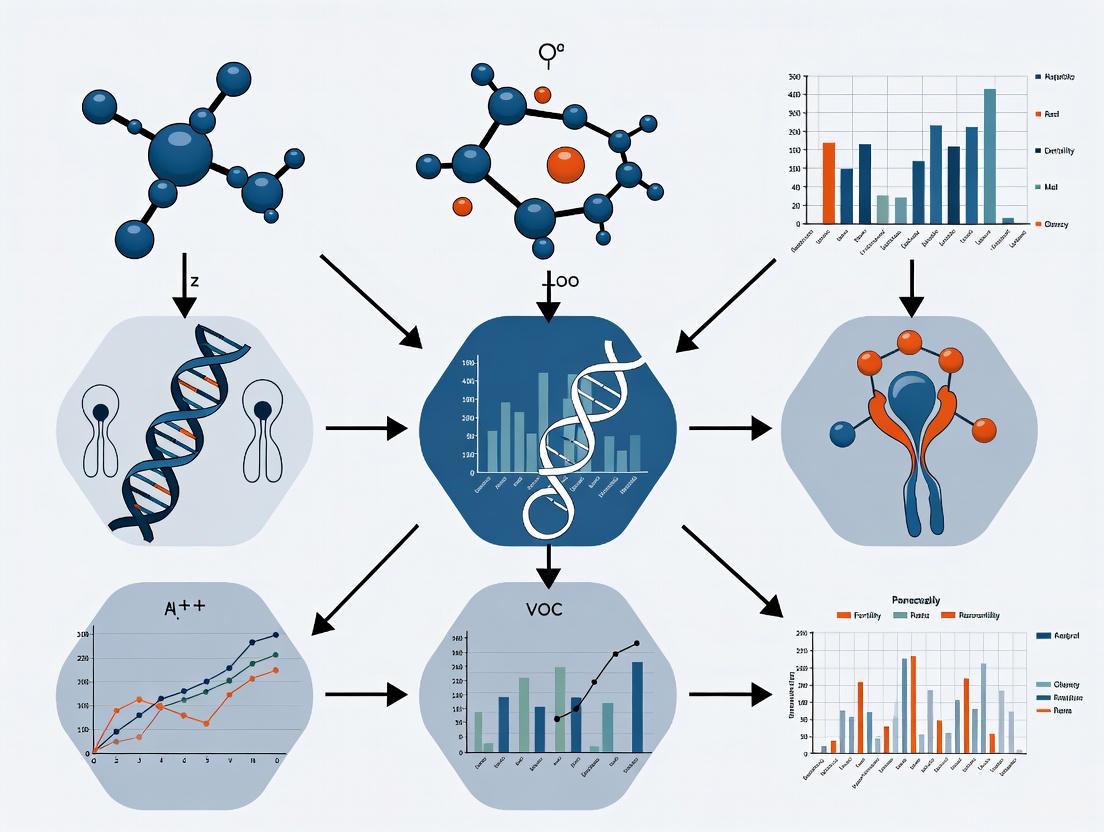

Visualization of ML Workflow for ART Prediction

The following diagram illustrates the comprehensive workflow for developing ML models in ART success prediction, from data collection to clinical application.

Diagram 1: ML Workflow for ART Outcome Prediction. This diagram illustrates the comprehensive process from data collection to clinical implementation, highlighting key challenges at each stage.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Research Materials and Computational Tools for ML in ART Research

| Item Category | Specific Examples | Function in ART Prediction Research |

|---|---|---|

| Data Collection Tools | Electronic Health Record (EHR) systems, Laboratory Information Management Systems (LIMS), Clinical data abstraction forms | Standardized capture of demographic, clinical, and laboratory parameters essential for model development [2] [5] |

| Statistical Software | R (version 4.4.0+), Python (version 3.8+), SPSS (version 26+) | Data preprocessing, statistical analysis, and implementation of machine learning algorithms [2] [5] |

| Machine Learning Libraries | caret (R), xgboost (R/Python), bonsai (R), Scikit-learn (Python), PyTorch (Python) | Provides algorithms for classification, regression, and ensemble methods; enables model training and validation [5] |

| Feature Selection Tools | Random Forest importance scores, Multivariate logistic regression, Recursive feature elimination | Identifies most predictive variables from numerous potential features to create parsimonious models [2] |

| Model Validation Frameworks | k-fold cross-validation, Bootstrap methods, Train-test split | Assesses model performance and generalizability while mitigating overfitting [2] [5] |

| Mycophenolate Mofetil-d4 | Mycophenolate Mofetil-d4, MF:C23H31NO7, MW:437.5 g/mol | Chemical Reagent |

| D,L-erythro-PDMP | D,L-erythro-PDMP, MF:C23H38N2O3, MW:390.6 g/mol | Chemical Reagent |

Discussion and Future Directions

The clinical challenge of predicting ART success persists due to the complex, multifactorial nature of human reproduction and the limitations of traditional statistical approaches. Machine learning offers promising avenues to address these challenges by identifying complex, non-linear patterns in high-dimensional data. However, several methodological considerations must be addressed to advance the field.

First, feature standardization across studies is crucial. While female age consistently emerges as the most significant predictor across studies [6], the inclusion of additional features varies considerably. Developing a core outcome set for ART prediction research would enhance comparability and facilitate model generalizability. Second, model interpretability remains essential for clinical adoption. While complex ensemble methods and neural networks may achieve high accuracy, their "black box" nature can limit clinical utility. Techniques such as partial dependence plots and feature importance rankings help bridge this gap [5].

Future research should prioritize external validation of existing models across diverse populations and clinical settings. Most current models demonstrate robust performance in internal validation but lack verification in external cohorts [2] [5]. Additionally, temporal validation is necessary to assess model performance over time as clinical practices evolve. The integration of novel data types, including imaging data (embryo morphology), -omics data (genomics, proteomics), and time-series laboratory values, may further enhance predictive accuracy.

Finally, the development of user-friendly implementation tools, such as web-based calculators and clinical decision support systems integrated into electronic health records, will be essential for translating predictive models into routine clinical practice [5]. Such tools can facilitate personalized treatment planning, set realistic patient expectations, and ultimately improve the efficiency and success of ART treatments.

Core Machine Learning Concepts for Biomedical Researchers

The application of machine learning (ML) in biomedical research represents a paradigm shift from traditional statistical methods, offering powerful capabilities for identifying complex patterns in high-dimensional data. Within reproductive medicine, this is particularly crucial for researching rare fertility outcomes, where conventional approaches often struggle due to limited sample sizes and multifactorial determinants. ML predictive models can analyze extensive datasets to uncover subtle relationships that may escape human observation or standard analysis, potentially accelerating discoveries in assisted reproductive technology (ART) success optimization [8]. For researchers investigating rare fertility events—such as specific implantation failure patterns or unusual treatment responses—these methods provide an unprecedented opportunity to develop personalized prognostic tools and enhance clinical decision-making.

The inherent complexity of human reproduction, combined with the ethical and practical challenges of conducting large-scale clinical trials in fertility research, makes ML approaches particularly valuable. By leveraging existing clinical data, ML models can help identify key predictive features for outcomes like live birth following embryo transfer, enabling more targeted interventions and improved resource allocation in fertility treatments [9]. However, the implementation of ML in this sensitive domain requires rigorous methodology and a thorough understanding of both computational and clinical principles to ensure models are both technically sound and clinically relevant.

Core Machine Learning Concepts

Fundamental Terminology and Processes

Machine learning encompasses a diverse set of algorithms that can learn patterns from data without explicit programming. For biomedical researchers, understanding several key concepts is essential for appropriate model selection and interpretation:

Supervised Learning: The most common approach in biomedical prediction research, where models learn from labeled training data to make predictions on unseen data. This includes both classification (predicting categorical outcomes) and regression (predicting continuous values) tasks. In fertility research, this might involve predicting live birth (categorical) or estimating implantation potential (continuous) based on patient characteristics [8].

Unsupervised Learning: Algorithms that identify inherent patterns or groupings in data without pre-existing labels. These methods are particularly valuable for exploratory analysis, such as identifying novel patient subgroups with similar phenotypic characteristics that may correlate with rare fertility outcomes.

Overfitting: A critical challenge in ML where a model learns the training data too well, including its noise and random fluctuations, consequently performing poorly on new, unseen data. This risk is especially pronounced when working with rare outcomes where positive cases may be limited [8].

Data Leakage: Occurs when information from outside the training dataset is used to create the model, potentially leading to overly optimistic performance estimates that fail to generalize to real-world settings. This can happen when future information inadvertently influences model training, violating the temporal sequence of clinical events [8].

Machine Learning Model Categories

Table 1: Common Machine Learning Algorithms in Biomedical Research

| Algorithm Category | Key Examples | Strengths | Weaknesses | Fertility Research Applications |

|---|---|---|---|---|

| Tree-Based Ensembles | Random Forest, XGBoost, GBM, LightGBM | High predictive accuracy, handles mixed data types, provides feature importance | Can become complex, computationally intensive with large datasets | Live birth prediction, embryo selection, treatment response forecasting [9] |

| Neural Networks | Artificial Neural Networks (ANN), Deep Learning | Highly flexible, models complex non-linear relationships | Requires substantial computational resources, prone to overfitting | Image analysis (embryo quality assessment), complex pattern recognition |

| Other Ensemble Methods | AdaBoost | Focuses on misclassified instances, straightforward implementation | May struggle with noisy data and outliers | Risk stratification, outcome classification |

Practical Protocols for Predictive Modeling in Fertility Research

Data Preparation and Preprocessing Protocol

Objective: To transform raw clinical data into a structured format suitable for machine learning analysis while preserving biological relevance and preventing data leakage.

Materials and Reagents:

- R statistical environment (version 4.4 or higher) or Python (version 3.8 or higher)

- Specialized packages:

caret(R),missForest(R),xgboost(R/Python),bonsai(R) for LightGBM [9] - Clinical dataset with appropriate ethical approvals

Step-by-Step Procedure:

- Data Collection and Ethical Compliance: Retrieve anonymized patient data from electronic health records with appropriate institutional review board approval. For fertility research, key data elements may include patient age, ovarian reserve markers, embryo quality metrics, and treatment protocols [9].

Cohort Definition: Apply inclusion and exclusion criteria specific to the research question. For example, in studying fresh embryo transfer outcomes, one might include patients undergoing cleavage-stage embryo transfer while excluding those using donor gametes or preimplantation genetic testing [9].

Missing Data Imputation: Address missing values using appropriate methods such as the non-parametric

missForestalgorithm, which is particularly effective for mixed-type data commonly encountered in clinical datasets [9].Feature Selection: Implement a tiered approach combining statistical criteria (e.g., p < 0.05 in univariate analysis) and clinical expert validation to eliminate biologically irrelevant variables while retaining clinically meaningful predictors [9].

Data Partitioning: Split data into derivation (training) and validation sets using appropriate strategies such as random split, time-based split, or patient-based split to ensure independent model evaluation [8].

Model Training and Validation Protocol

Objective: To develop and validate robust predictive models using appropriate machine learning algorithms with rigorous evaluation protocols.

Step-by-Step Procedure:

- Algorithm Selection: Choose multiple ML algorithms based on the specific prediction task and dataset characteristics. Common choices include Random Forest, XGBoost, and Artificial Neural Networks [9].

Hyperparameter Tuning: Implement a grid search approach with 5-fold cross-validation to optimize model hyperparameters, using the area under the receiver operating characteristic curve (AUC) as the primary evaluation metric [9].

Model Training: Train each algorithm on the derivation dataset using the optimized hyperparameters, ensuring proper separation between training and validation data throughout the process.

Performance Evaluation: Assess model performance on the testing data using multiple metrics including AUC, accuracy, sensitivity, specificity, precision, recall, and F1-score to provide a comprehensive view of model capabilities [9] [8].

Validation and Generalizability Assessment: Conduct sensitivity analyses including subgroup analysis (stratified by key clinical variables) and perturbation analysis to assess model stability and generalizability across different patient populations [9].

Model Interpretation and Clinical Implementation Protocol

Objective: To extract clinically meaningful insights from trained models and facilitate their translation into practical tools for fertility research and clinical decision support.

Step-by-Step Procedure:

- Feature Importance Analysis: Identify the most influential predictors from the best-performing model using built-in importance metrics or permutation-based methods.

Partial Dependence Analysis: Generate partial dependence (PD) plots to visualize the marginal effect of key features on the predicted outcome, helping to elucidate complex relationships between predictors and fertility outcomes [9].

Interaction Effects Exploration: Construct 2D partial dependence plots to explore interaction effects among important features, revealing how combinations of factors jointly influence predicted outcomes.

Clinical Tool Development: For promising models, develop user-friendly interfaces such as web-based tools to assist clinicians in predicting outcomes and individualizing treatments based on patient-specific data [9].

Reporting and Documentation: Comprehensively document all aspects of the modeling process following established guidelines for transparent reporting of predictive models in biomedical research [8].

Visualization of Machine Learning Workflows

Figure 1: End-to-end machine learning workflow for fertility outcomes research, showing the progression from data collection through clinical implementation.

Figure 2: Model validation framework illustrating the process of algorithm comparison, hyperparameter tuning, and rigorous performance assessment essential for trustworthy fertility outcome predictions.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for ML in Fertility Research

| Tool Category | Specific Solutions | Key Functionality | Application in Fertility Research |

|---|---|---|---|

| Programming Environments | R (v4.4+), Python (v3.8+) | Statistical computing, machine learning implementation | Primary platforms for data analysis and model development [9] |

| ML Packages & Libraries | caret, xgboost, bonsai, Torch | Algorithm implementation, hyperparameter tuning | Model training for outcome prediction [9] |

| Data Imputation Tools | missForest | Nonparametric missing value estimation | Handling missing clinical data in fertility datasets [9] |

| Model Interpretation Packages | PD, LD, AL profile generators | Visualization of feature effects and interactions | Understanding key predictors of ART success [9] |

| Web Development Frameworks | Shiny (R), Flask (Python) | Interactive tool development | Creating clinical decision support systems [9] |

| sec-O-Glucosylhamaudol | sec-O-Glucosylhamaudol, CAS:80681-44-3, MF:C21H26O10, MW:438.4 g/mol | Chemical Reagent | Bench Chemicals |

| Monodes(N-carboxymethyl)valine Daclatasvir | Monodes(N-carboxymethyl)valine Daclatasvir, CAS:1007884-60-7, MF:C33H39N7O3, MW:581.7 g/mol | Chemical Reagent | Bench Chemicals |

Application to Rare Fertility Outcomes Research

The implementation of machine learning in rare fertility outcomes research requires special methodological considerations. When dealing with infrequent events, several strategies can enhance model performance and clinical utility:

Addressing Class Imbalance: Rare outcomes naturally create imbalanced datasets where positive cases are substantially outnumbered by negative cases. Techniques such as strategic sampling, algorithm weighting, or ensemble methods can help mitigate the bias toward the majority class that might otherwise dominate model training.

Feature Selection for Rare Outcomes: Identifying predictors specifically relevant to rare outcomes often requires hybrid approaches combining data-driven selection with deep clinical expertise. Domain knowledge becomes particularly valuable in recognizing biologically plausible relationships that may have strong predictive power despite limited occurrence in the dataset.

Multi-Model Validation: Given the challenges of predicting rare events, employing multiple algorithms with different inductive biases provides a more robust approach than reliance on a single method. The comparative analysis of Random Forest, XGBoost, and other algorithms in fertility research has demonstrated that performance can vary significantly across different outcome types and patient subgroups [9].

Clinical Integration Pathways: For rare outcome prediction models to impact clinical practice, they must be integrated into workflows in ways that complement clinical expertise. Web-based tools that provide individualized risk estimates based on model outputs can support shared decision-making without replacing clinical judgment [9].

By adhering to rigorous methodology and maintaining focus on clinical relevance, biomedical researchers can leverage machine learning to advance understanding of rare fertility outcomes despite the inherent challenges of limited data. The continuous refinement of these models through iterative development and validation promises to enhance their predictive accuracy and ultimately improve outcomes for patients facing complex fertility challenges.

Within the expanding field of assisted reproductive technology (ART), a paradigm shift is underway towards data-driven prognostication. Infertility affects an estimated 15% of couples globally, yet success rates for interventions like in vitro fertilization (IVF) have plateaued around 30% [9]. This clinical challenge has intensified the focus on developing robust prediction models to enhance outcomes and personalize treatment. Machine learning (ML) models are now demonstrating superior performance for live birth prediction (LBP) compared to traditional statistical methods, with center-specific models (MLCS) showing significant improvements in minimizing false positives and negatives [10]. The clinical utility of these models hinges on identifying and accurately measuring key predictive features. This application note details the core biomarkers—female age, embryo quality, and critical hormonal and ultrasonographic markers—within the context of advanced predictive analytics for rare fertility outcomes research. We provide structured quantitative summaries and detailed experimental protocols to standardize their assessment for model integration.

Quantitative Data Synthesis of Key Predictive Features

The following tables consolidate quantitative evidence on the impact of key predictive features on fertility outcomes, as reported in recent clinical studies and ML model analyses.

Table 1: Impact of Female Age on Pregnancy and Live Birth Outcomes

| Age Group | Clinical Pregnancy Rate (CPR) | Ongoing Pregnancy Rate (OPR) | Live Birth Rate (LBR) | Key Statistical Findings |

|---|---|---|---|---|

| <30 years | 61.40% [11] | 54.21% [11] | Significantly higher [12] | Reference group for comparisons [12] |

| 30-34 years | Not Specified | Not Specified | Significantly higher than ≥35 group [12] | Implantation rate significantly lower than <30 group [12] |

| ≥35 years | Significantly lower [12] | Not Specified | Significantly lower [12] | CPR decreased by 10% per year after 34 (aOR 0.90, 95% CI 0.84–0.96) [11] |

| ≥40 years (Donor Oocytes) | Not Applicable | Not Applicable | Decreasing after age 40 [13] | Annual increase in implantation failure (RR=1.042) and pregnancy loss (RR=1.032) [13] |

Table 2: Impact of Embryo and Treatment Cycle Factors on Outcomes

| Predictive Feature | Outcome Measured | Effect Size & Statistical Significance | Study Details |

|---|---|---|---|

| Number of High-Quality Embryos Transferred | Clinical Pregnancy | Significantly higher in pregnancy group (t=5.753, P<0.0001) [12] | FET Cycles (N=1031) [12] |

| Number of Embryos Transferred | Clinical Pregnancy | Significantly higher in pregnancy group (t=4.092, P<0.0001) [12] | FET Cycles (N=1031) [12] |

| Blastocyst Transfer (vs. Cleavage) | Pregnancy Outcomes | "Significantly better," pronounced in older patients [11] | eSET Cycles (N=7089) [11] |

| Endometrial Thickness | Live Birth | Key predictive feature in ML model [9] | Fresh Embryo Transfer (N=11,728) [9] |

| Oil-Based Contrast (HSG) | Pregnancy Rate | 51% higher vs. water-based (OR=1.51, 95% CI 1.23-1.86) [14] | Meta-analysis (N=4,739 patients) [14] |

Experimental Protocols for Predictive Feature Analysis

Protocol 1: Development and Validation of a Center-Specific ML Model for Live Birth Prediction

This protocol outlines the procedure for developing a machine learning model to predict live birth outcomes following fresh embryo transfer, as validated in a large clinical dataset [9].

1. Data Collection and Preprocessing

- Data Source: Collect retrospective ART cycle records from a single or multicenter database. A study by Liu et al. (2025) initiated this process with 51,047 records [9].

- Inclusion/Exclusion Criteria:

- Inclusion: Cycles involving fresh embryo transfer with fully tracked outcomes.

- Exclusion: Apply filters for female age >55 years, male age >60 years, use of donor sperm, and non-cleavage-stage transfers. This refined the dataset to 11,728 records for analysis [9].

- Feature Set: Extract a comprehensive set of pre-pregnancy features (e.g., 55-75 variables), including female age, embryo grades, number of usable embryos, endometrial thickness, and hormonal markers [9].

- Data Imputation: Handle missing values using a non-parametric method such as

missForest, which is efficient for mixed-type data [9].

2. Model Training and Validation

- Algorithm Selection: Employ multiple machine learning algorithms to construct and compare prediction models. Common choices include:

- Random Forest (RF)

- eXtreme Gradient Boosting (XGBoost)

- Gradient Boosting Machines (GBM)

- Light Gradient Boosting Machine (LightGBM)

- Artificial Neural Network (ANN) [9]

- Hyperparameter Tuning: Use a grid search approach with 5-fold cross-validation on the training data to optimize hyperparameters, selecting those that yield the highest average Area Under the Curve (AUC) [9].

- Model Validation: Split the data into training and testing sets. Evaluate the final model's performance on the held-out test set using metrics including AUC, accuracy, sensitivity, specificity, precision, and F1 score [9].

3. Model Interpretation and Deployment

- Feature Importance: Analyze the best-performing model (e.g., Random Forest) to identify the most influential predictive features. Key features often include female age, grades of transferred embryos, number of usable embryos, and endometrial thickness [9].

- Model Explanation: Utilize techniques like Partial Dependence (PD) plots, Accumulated Local (AL) profiles, and breakdown profiles to explain the model's predictions at both the dataset and individual patient levels [9].

- Clinical Tool Development: Develop a web-based tool to allow clinicians to input patient data and receive a personalized live birth probability, facilitating individualized treatment planning [9].

Protocol 2: Assessing the Impact of Female Age on Outcomes in Elective Single Embryo Transfer (eSET) Cycles

This protocol describes a retrospective cohort study design to elucidate the non-linear relationship between female age and pregnancy outcomes in a first eSET cycle [11].

1. Cohort Definition and Data Acquisition

- Study Population: Identify patients undergoing their first IVF/ICSI cycle with an elective single embryo transfer, defined as a transfer where supernumerary embryos are available for freezing [11].

- Exclusion Criteria: Exclude patients with chromosomal abnormalities, endocrine diseases, recurrent abortion, or those undergoing operative sperm extraction cycles [11].

- Data Extraction: Obtain de-identified data from the ART database, including female age, infertility diagnosis, stimulation protocol, embryo stage and quality, and outcomes.

2. Outcome Measures and Statistical Analysis

- Primary Outcomes:

- Clinical Pregnancy Rate (CPR): Confirmed by the detection of at least one gestational sac via ultrasound 4-5 weeks post-transfer.

- Ongoing Pregnancy Rate (OPR): Defined as a living intrauterine pregnancy lasting until the 12th week of gestation [11].

- Statistical Modeling:

- Use a Generalized Additive Model (GAM) to examine the dose-response correlation between female age as a continuous variable and the log-odds of CPR/OPR, allowing for non-linear relationships.

- Employ a logistic regression model to ascertain the correlation and calculate odds ratios (ORs) with 95% confidence intervals (CIs), adjusting for potential confounders such as embryo stage (cleavage vs. blastocyst) [11].

- Threshold Analysis: Model the specific age at which CPR and OPR begin to decrease significantly. For example, one study found that for patients aged ≥34, CPR decreased by 10% for each 1-year age increase (aOR 0.90) [11].

Protocol 3: Evaluation of Contrast Media in Hysterosalpingography (HSG) for Fertility Enhancement

This protocol is based on a systematic review and meta-analysis methodology to compare the therapeutic effects of oil-based versus water-based contrast media in HSG [14].

1. Literature Search and Study Selection

- Search Strategy: Execute searches in major electronic databases (e.g., PubMed, Web of Science, Scopus) using keywords related to "hysterosalpingography," "oil-based contrast," "water-based contrast," and "tubal flushing" until the current date of analysis.

- Eligibility Criteria:

- Inclusion: Include all primary Randomized Controlled Trials (RCTs) comparing oil-based versus water-based contrast media in women of childbearing age with infertility.

- Exclusion: Exclude non-RCTs, such as case reports, reviews, and studies without a comparison group or that do not evaluate fertility outcomes [14].

2. Data Extraction and Quality Assessment

- Outcome Extraction: From each included RCT, extract data on primary and secondary outcomes, including:

- Pregnancy rate

- Live birth rate

- Miscarriage rate

- Ectopic pregnancy rate

- Adverse effects (abdominal pain, vaginal bleeding, intravasation) [14]

- Risk of Bias Assessment: Assess the quality of each included RCT using the Cochrane risk of bias tool, evaluating domains like random sequence generation, allocation concealment, blinding of participants and personnel, and blinding of outcome assessment [14].

3. Statistical Synthesis

- Meta-Analysis: Perform statistical analysis using software like RevMan. For dichotomous outcomes (e.g., pregnancy rate), calculate pooled odds ratios (ORs) with 95% CIs using the Mantel-Haenszel method.

- Heterogeneity: Assess statistical heterogeneity among the studies using the I² statistic and the chi-square test (p-value < 0.1 considered significant). If substantial heterogeneity exists, explore sources and consider using a random-effects model or performing sensitivity analyses (e.g., leave-one-out method) [14].

Visualizations

ML Model Workflow for Live Birth Prediction

Key Predictive Features and Pathways to Outcome

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Analytical Tools for Fertility Prediction Research

| Item / Solution | Function / Application | Specific Example / Note |

|---|---|---|

| Oil-Based Contrast Media | Used in HSG for tubal patency evaluation and therapeutic flushing. | Ethiodized poppyseed oil (e.g., Lipiodol). Associated with significantly higher subsequent pregnancy rates [14] [15]. |

| Water-Based Contrast Media | Aqueous agent for diagnostic HSG. | Provides diagnostic images but may be less effective in enhancing fertility compared to oil-based agents [14] [15]. |

| Gonadotropins (Gn) | Stimulate follicular development during controlled ovarian stimulation. | Dosage is personalized to maximize oocyte yield while minimizing OHSS risk [11] [12]. |

| GnRH Agonist/Antagonist | Prevents premature luteinizing hormone (LH) surge during ovarian stimulation. | Agonist (e.g., Diphereline) or antagonist protocol used based on patient profile [11]. |

| Human Chorionic Gonadotropin (hCG) | Triggers final oocyte maturation. | Administered subcutaneously (e.g., 4,000-10,000 IU) when follicles reach optimal size [11] [12]. |

| Vitrification Kit | For cryopreservation of supernumerary embryos. | Essential for freeze-thaw embryo transfer (FET) cycles. Includes equilibration and vitrification solutions [12]. |

| R Software with Caret Package | Primary platform for statistical analysis and machine learning model development. | Used for data preprocessing, model training (RF, GBM, AdaBoost), and validation [9]. |

| Python with Torch | Platform for developing complex models like Artificial Neural Networks (ANN). | Used for implementing deep learning architectures in predictive modeling [9]. |

| Phenylbutazone(diphenyl-d10) | Phenylbutazone(diphenyl-d10), CAS:1219794-69-0, MF:C19H20N2O2, MW:318.4 g/mol | Chemical Reagent |

| D-Arabinopyranose | D-Arabinopyranose, CAS:28697-53-2, MF:C5H10O5, MW:150.13 g/mol | Chemical Reagent |

ML Algorithms in Action: Methodologies for Fertility Outcome Prediction

This application note provides a structured framework for the comparative analysis of supervised learning algorithms—Random Forest (RF), eXtreme Gradient Boosting (XGBoost), Support Vector Machine (SVM), and Artificial Neural Networks (ANN)—within the context of rare fertility outcomes research. We present standardized protocols for model development, performance assessment, and implementation, supported by quantitative performance data from recent fertility studies. The document aims to equip researchers and drug development professionals with practical tools to build robust, clinically applicable prediction models for outcomes such as live birth, missed abortion, and clinical pregnancy.

Predicting rare fertility outcomes, such as live birth or specific complications following Assisted Reproductive Technology (ART), presents a significant challenge in reproductive medicine. Traditional statistical methods often fall short in capturing the complex, non-linear relationships between multifaceted patient characteristics and these outcomes. Supervised machine learning (ML) offers a powerful alternative for constructing prognostic models. This document details a standardized protocol for comparing four prominent algorithms—RF, XGBoost, SVM, and ANN—to facilitate their effective application in predicting rare fertility endpoints, thereby supporting clinical decision-making and advancing personalized treatment strategies in reproductive health [9] [16].

Quantitative Performance Comparison in Fertility Research

The performance of ML algorithms can vary significantly based on the dataset, specific fertility outcome, and feature set. The following table summarizes the reported performance metrics of RF, XGBoost, SVM, and ANN across recent studies focused on ART outcomes.

Table 1: Comparative Performance of Supervised Learning Algorithms on Various Fertility Outcomes

| Fertility Outcome | Study/Context | Best Performing Algorithm(s) (Performance Metric) | Comparative Performance of Other Algorithms |

|---|---|---|---|

| Live Birth | Fresh embryo transfer (n=11,728); 55 features [9] | RF (AUC > 0.80) | XGBoost was second-best; GBM, AdaBoost, LightGBM, ANN were also tested. |

| Live Birth | IVF treatment (n=11,486); 7 key predictors [2] | Logistic Regression (AUC 0.674) & RF (AUC 0.671) | XGBoost and LightGBM were also constructed but were not top performers. |

| Live Birth | Prediction before IVF treatment [16] | RF (F1-score: 76.49%, AUC: 84.60%) | Models were also tested with and without feature selection. |

| Missed Abortion | IVF-ET patients (n=1,017) [17] | XGBoost (Training AUC: 0.877, Test AUC: 0.759) | Outperformed a traditional logistic regression model (Test AUC: 0.695). |

| Clinical Pregnancy | Embryo morphokinetics analysis [18] | RF (AUC: 0.70) | Used a supervised random forest algorithm on time-lapse microscopy data. |

| Fertility Preferences | Population survey in Nigeria (n=37,581) [19] | RF (Accuracy: 92%, AUC: 92%) | Outperformed Logistic Regression, SVM, K-Nearest Neighbors, Decision Tree, and XGBoost. |

Key Insights from Comparative Data

- Algorithm Dominance: Tree-based ensemble methods, particularly Random Forest and XGBoost, consistently rank among the top performers across diverse fertility prediction tasks, from clinical outcomes like live birth [9] to population-level analyses [19].

- Context Matters: The optimal algorithm is use-case dependent. For instance, while complex models like XGBoost excelled in predicting missed abortion [17], a simpler Logistic Regression model performed on par with Random Forest in a study focused on a limited set of seven key predictors for live birth [2].

- Performance Range: Areas Under the Curve (AUC) for these models in fertility research typically range from approximately 0.67 to over 0.80, and accuracy can exceed 90% for specific classification tasks [19], demonstrating the potential of ML to deliver clinically relevant predictive power.

Experimental Protocols for Model Development

Protocol 1: Data Preprocessing and Feature Engineering

Objective: To prepare a raw clinical dataset for robust model training by addressing data quality and enhancing predictive features.

Materials: Raw clinical data (e.g., from Electronic Health Records), computing environment (R or Python).

Procedure:

- Data Cleaning:

- Handle missing values using techniques like multiple imputation by chained equations (MICE) or the

missForestalgorithm for mixed-type data [9] [19]. - Address class imbalance in the outcome variable (e.g., using the Synthetic Minority Oversampling Technique (SMOTE)) to prevent model bias toward the majority class [19].

- Handle missing values using techniques like multiple imputation by chained equations (MICE) or the

- Feature Engineering:

- Create new, potentially informative variables from existing ones. For example, generate interaction terms such as the "Average," "Summation," and "Difference" of biochemical markers like hCG MoM and PAPP-A MoM in prenatal screening [20].

- Recode continuous variables into categorical bins and group low-frequency categories in categorical variables [19].

- Feature Selection:

- Employ a multi-step approach to identify the most predictive features:

- Initial Screening: Use bivariate analysis (e.g., logistic regression) to assess individual feature associations with the outcome [19].

- Advanced Filtering: Apply Recursive Feature Elimination (RFE) to iteratively remove the least important features [19].

- Expert Validation: Combine data-driven criteria (e.g., p-value < 0.05 or top-ranked features by RF importance) with clinical expert validation to eliminate biologically irrelevant variables and retain clinically critical ones [9]. This ensures model parsimony and clinical relevance.

- Employ a multi-step approach to identify the most predictive features:

Protocol 2: Model Training and Hyperparameter Tuning

Objective: To train the four candidate algorithms and optimize their hyperparameters to achieve maximum predictive performance.

Materials: Preprocessed dataset from Protocol 1, software libraries (e.g., scikit-learn, xgboost, caret in R).

Procedure:

- Data Splitting: Partition the preprocessed data into a training set (e.g., 70-80%) and a hold-out test set (e.g., 20-30%) [17].

- Model Training Setup:

- Initialize the four algorithms with a set of default or reasonable starting hyperparameters.

- Random Forest: Key parameters include number of trees (

n_estimators), maximum tree depth (max_depth), and number of features considered for a split (max_features). - XGBoost: Key parameters include learning rate (

eta), maximum depth (max_depth), number of boosting rounds (n_estimators), and L1/L2 regularization terms (alpha,lambda) [21]. - Support Vector Machine: Key parameter is the kernel type (e.g., Radial Basis Function, linear), and regularization parameter (

C). - Artificial Neural Network: Key parameters include the number of hidden layers and units, activation functions, learning rate, and dropout rate for regularization [9].

- Hyperparameter Tuning:

- Employ a grid search or random search approach [9].

- Use 5-fold or 10-fold cross-validation on the training set to evaluate each hyperparameter combination. This involves splitting the training data into k folds, training the model on k-1 folds, and validating on the remaining fold, repeating this process k times [2].

- Select the hyperparameter set that yields the highest average performance (e.g., AUC) across the k validation folds.

- Final Model Training: Retrain each algorithm on the entire training set using its respective optimized hyperparameters.

Protocol 3: Model Evaluation and Interpretation

Objective: To assess the generalizability and clinical utility of the trained models and interpret their predictions.

Materials: Trained models from Protocol 2, hold-out test set.

Procedure:

- Performance Evaluation:

- Apply the final models to the hold-out test set.

- Calculate a comprehensive set of metrics [9] [16]:

- Discrimination: Area Under the Receiver Operating Characteristic Curve (AUC).

- Calibration: Brier Score (closer to 0 indicates better calibration) [2].

- Overall Performance: Accuracy, F1-score, Precision, Recall.

- Model Interpretation:

- Global Interpretability: Use permutation importance or Gini importance (for tree-based models) to identify which features had the most significant overall impact on the model's predictions [19].

- Local Interpretability: For specific predictions, use techniques like SHAP (SHapley Additive exPlanations) or Breakdown profiles to understand the contribution of each feature to an individual patient's risk score [9].

- Visualization: Examine Partial Dependence Plots (PDPs) or Accumulated Local (AL) plots to understand the marginal effect of a key feature (e.g., maternal age) on the predicted outcome [9].

Visualization of the Model Development Workflow

The following diagram illustrates the end-to-end workflow for developing and validating a machine learning model for rare fertility outcomes, as outlined in the experimental protocols.

The Scientist's Toolkit: Essential Research Reagents & Solutions

This section catalogues critical data types and methodological components required for constructing robust fertility prediction models.

Table 2: Essential "Research Reagents" for Fertility Outcome Prediction Models

| Category | Item / Data Type | Function / Relevance in the Experiment | Example from Literature |

|---|---|---|---|

| Clinical Data | Maternal Age | Single most consistent predictor of ART success [2]. | Used in all cited studies; identified as a top feature [2] [9] [16]. |

| Clinical Data | Hormone Levels (FSH, AMH, LH, P, E2) | Assess ovarian reserve and endocrine status; key predictors of response and outcome [2] [9] [17]. | Basal FSH, E2/LH/P on HCG day were key for live birth model [2]. AMH was a selected feature [9]. |

| Clinical Data | Embryo Morphology/Grade | Assesses embryo viability for selection in fresh transfers [9]. | Grades of transferred embryos were a key predictive feature [9]. |

| Clinical Data | Endometrial Thickness | Assess uterine receptivity for embryo implantation [9]. | Identified as a significant feature for live birth prediction [9]. |

| Clinical Data | Semen Parameters | Evaluates male factor infertility (concentration, motility, morphology) [2] [16]. | Progressive sperm motility was a key predictor [2]. |

| Immunological Factors | Anticardiolipin Antibody (ACA), TPO-Ab | Identify immune dysregulations associated with pregnancy loss [17]. | Were independent risk factors for missed abortion [17]. |

| Methodology | Hyperparameter Optimization (HPO) | Systematically search for the best model parameters to maximize performance and avoid overfitting. | Grid search with cross-validation was used to optimize models [9]. |

| Methodology | Synthetic Data Generation (e.g., GPT-4) | Addresses class imbalance for rare outcomes by generating synthetic minority-class samples [20]. | Used GPT-4o to generate synthetic samples for Down Syndrome risk prediction [20]. |

| Software & Libraries | R (caret, xgboost) / Python (scikit-learn) | Primary programming environments and libraries for data preprocessing, model building, and evaluation. | R (caret, xgboost, bonsai) and Python (Torch) were used for model development [9]. |

| Erythromycin-d6 | Erythromycin-d6, CAS:959119-25-6, MF:C37H67NO13, MW:740.0 g/mol | Chemical Reagent | Bench Chemicals |

| 4-Methylpentanal-d7 | 4-Methylpentanal-d7|CAS 1794978-55-4|Isotopic Labeled Reagent | Bench Chemicals |

This application note establishes a standardized, end-to-end protocol for the comparative analysis of RF, XGBoost, SVM, and ANN in predicting rare fertility outcomes. The empirical evidence strongly supports the efficacy of ensemble tree-based methods, while emphasizing that the optimal model is context-dependent. By adhering to the detailed protocols for data preprocessing, rigorous model training with hyperparameter tuning, and comprehensive evaluation outlined herein, researchers can develop transparent, robust, and clinically actionable tools. These tools hold the potential to significantly advance the field of reproductive medicine by enabling personalized prognosis and improving success rates for patients undergoing fertility treatments.

Neural Networks and Support Vector Machines for Complex Pattern Recognition

The application of artificial intelligence (AI) in reproductive medicine represents a paradigm shift in the approach to diagnosing and treating infertility. Machine learning (ML) prediction models, particularly those designed for forecasting rare fertility outcomes, are increasingly critical in a field where treatment success hinges on complex, multifactorial processes. Among the plethora of ML algorithms, neural networks (NNs) and support vector machines (SVMs) have emerged as powerful tools for complex pattern recognition tasks. These models excel at identifying subtle, non-linear relationships within high-dimensional biomedical data, which often elude conventional statistical methods and human observation. Within in vitro fertilization (IVF), the ability to predict outcomes such as implantation, clinical pregnancy, or live birth can directly influence clinical decision-making, optimize laboratory processes, and ultimately improve patient success rates. This document provides detailed application notes and experimental protocols for employing NNs and SVMs in fertility research, framed within the context of a broader thesis on predicting rare fertility outcomes.

Performance Comparison of ML Models in Fertility Outcomes Prediction

Quantitative data from recent studies demonstrate the comparative performance of various ML models, including NNs and SVMs, in predicting critical fertility outcomes. The following tables summarize key performance metrics, providing a benchmark for researchers.

Table 1: Model Performance in Predicting Pregnancy and Live Birth Outcomes

| Study Focus | Best Performing Model(s) | Key Performance Metrics | Dataset Characteristics |

|---|---|---|---|

| General IVF/ICSI Success Prediction [22] | Random Forest (RF) | AUC: 0.97 | 10,036 patient records, 46 clinical features |

| General IVF/ICSI Success Prediction [22] | Neural Network (NN) | AUC: 0.95 | 10,036 patient records, 46 clinical features |

| Live Birth in Endometriosis Patients [23] | XGBoost | AUC (Test Set): 0.852 | 1,836 patients, 8 predictive features |

| Live Birth in Endometriosis Patients [23] | Random Forest (RF) | AUC (Test Set): 0.820 | 1,836 patients, 8 predictive features |

| Live Birth in Endometriosis Patients [23] | K-Nearest Neighbors (KNN) | AUC (Test Set): 0.748 | 1,836 patients, 8 predictive features |

| Embryo Implantation Success (AI-based selection) [24] | Pooled AI Models | Sensitivity: 0.69, Specificity: 0.62, AUC: 0.7 | Meta-analysis of multiple studies |

Table 2: Prevalence of Machine Learning Techniques in ART Success Prediction

| Machine Learning Technique | Frequency of Use | Reported Accuracy Range | Commonly Reported Metrics |

|---|---|---|---|

| Support Vector Machine (SVM) [6] | Most frequently applied (44.44% of studies) | Not Specified | AUC, Accuracy, Sensitivity |

| Random Forest (RF) [6] [22] [23] | Commonly applied | AUC up to 0.97 [22] | AUC, Accuracy, Sensitivity, Specificity |

| Neural Networks (NN) / Deep Learning [6] [22] | Commonly applied | AUC up to 0.95 [22] | AUC, Accuracy |

| Logistic Regression (LR) [6] [23] | Commonly applied | Not Specified | AUC, Sensitivity, Specificity |

| XGBoost [23] | Applied in recent studies | AUC up to 0.852 [23] | AUC, Calibration, Brier Score |

Experimental Protocols for Model Development and Validation

Protocol: Development of a Neural Network for Embryo Viability Scoring

This protocol outlines the steps for creating a convolutional neural network (CNN) to predict embryo implantation potential from time-lapse imaging data.

1. Data Acquisition and Preprocessing: - Image Collection: Acquire a large dataset of time-lapse images or videos of embryos cultured to the blastocyst stage (Day 5). The dataset should be linked to known outcomes (e.g., implantation, no implantation). Sample sizes in recent studies exceed 1,000 embryos [24]. - Labeling: Annotate each embryo image sequence with a binary label (e.g., 1 for implantation success, 0 for failure). Ensure labeling is based on confirmed clinical outcomes. - Preprocessing: Resize all images to a uniform pixel dimension (e.g., 224x224). Apply min-max normalization to scale pixel intensities to a [0, 1] range. This step ensures consistent scaling across variables and improves model convergence [25]. - Data Augmentation: Artificially expand the dataset by applying random, realistic transformations to the images, such as rotation, flipping, and minor brightness/contrast adjustments. This technique helps prevent overfitting. - Data Partitioning: Randomly split the dataset into three subsets: Training Set (70%), Validation Set (15%), and Test Set (15%). The validation set is used for hyperparameter tuning, and the test set for the final, unbiased evaluation.

2. Model Architecture and Training: - Architecture Design: Implement a CNN architecture, such as: - Input Layer: Accepts preprocessed images. - Feature Extraction Backbone: Use a pre-trained network (e.g., ResNet-50) with transfer learning. Remove its final classification layer and freeze the weights of early layers to leverage pre-learned feature detectors. - Custom Classifier: Append new, trainable layers: a Flatten layer, followed by two Dense (fully connected) layers with ReLU activation (e.g., 128 units, then 64 units), including Dropout layers (e.g., rate=0.5) to reduce overfitting. - Output Layer: A final Dense layer with a single unit and sigmoid activation for binary classification. - Compilation: Compile the model using the Adam optimizer and specify the binary cross-entropy loss function. Monitor the accuracy metric. - Model Training: Train the model on the training set for a specified number of epochs (e.g., 50) using mini-batch gradient descent (e.g., batch size=32). Use the validation set to evaluate performance after each epoch and implement early stopping if validation performance plateaus.

3. Model Validation and Interpretation: - Performance Evaluation: Use the held-out test set to calculate final performance metrics, including Area Under the Curve (AUC), Accuracy, Sensitivity, and Specificity [24] [6]. - Explainability: Apply explainable AI techniques like SHapley Additive exPlanations (SHAP) to interpret the model's predictions. This helps identify which morphological features in the embryo images (e.g., cell symmetry, fragmentation) were most influential in the viability score [23].

Protocol: Building an SVM for Predicting Live Birth from Clinical Data

This protocol details the use of an SVM to predict live birth outcomes using structured clinical and demographic data from patients prior to embryo transfer.

1. Feature Engineering and Dataset Preparation: - Feature Selection: From the patient's electronic health records (EHR), identify and extract relevant predictive features. Studies have shown the importance of female age, anti-Müllerian hormone (AMH), antral follicle count (AFC), infertility duration, body mass index (BMI), and previous IVF cycle history [6] [23]. Use algorithms like Least Absolute Shrinkage and Selection Operator (LASSO) or Recursive Feature Elimination (RFE) to select the most non-redundant, predictive features [23]. - Data Cleaning: Handle missing values through imputation (e.g., mean/median for continuous variables, mode for categorical) or removal of instances with excessive missingness. Address class imbalance in the outcome variable (e.g., more failures than live births) using techniques like SMOTE (Synthetic Minority Over-sampling Technique). - Data Scaling: Standardize all continuous features by removing the mean and scaling to unit variance. This is a critical step for SVMs, as they are sensitive to the scale of the data. - Data Splitting: Partition the data into Training (70%), Validation (15%), and Test (15%) sets, ensuring stratification to maintain the same proportion of outcomes in each set.

2. Model Training with Hyperparameter Optimization:

- Algorithm Selection: Choose the Support Vector Classifier (SVC) from an ML library such as scikit-learn.

- Hyperparameter Search: Define a search space for critical hyperparameters:

- Kernel: ['linear', 'radial basis function (RBF)', 'poly']

- Regularization (C): A range of values on a logarithmic scale (e.g., [0.1, 1, 10, 100])

- Kernel Coefficient (gamma): For RBF kernel, use ['scale', 'auto'] or a range of values.

- Optimization Execution: Use a Grid Search or Randomized Search strategy across the defined hyperparameter space, employing the validation set to evaluate performance. The optimal configuration is the one that maximizes the AUC on the validation set [23] [26].

3. Model Evaluation and Clinical Validation: - Final Assessment: Retrain the model on the combined training and validation sets using the optimal hyperparameters. Evaluate its final performance on the untouched test set, reporting AUC, sensitivity, and specificity. - Clinical Utility Assessment: Perform Decision Curve Analysis (DCA) to quantify the clinical net benefit of using the model across different probability thresholds [23].

Visualization of Workflows

The following diagrams, generated with Graphviz DOT language, illustrate the logical workflows for the experimental protocols described above.

Diagram Title: CNN for Embryo Viability Scoring

Diagram Title: SVM Clinical Prediction Workflow

The Scientist's Toolkit: Research Reagent Solutions

The following table details key software, algorithms, and data resources essential for conducting research in this field.

Table 3: Essential Research Tools for ML in Fertility Outcomes

| Tool / Reagent | Type | Function / Application | Examples / Notes |

|---|---|---|---|

| scikit-learn [6] | Software Library | Provides implementations of classic ML algorithms, including SVM, Random Forest, and data preprocessing tools. | Ideal for structured, tabular clinical data. Used for model development and hyperparameter tuning. |

| TensorFlow / PyTorch | Software Framework | Open-source libraries for building and training deep neural networks. | Essential for developing custom CNN architectures for image analysis (e.g., embryo time-lapse). |

| SHAP (SHapley Additive exPlanations) [23] | Interpretation Algorithm | Explains the output of any ML model by quantifying the contribution of each feature to a single prediction. | Critical for model transparency and identifying key clinical predictors like female age and AMH. |

| Hyperparameter Optimization Algorithms [26] | Methodology | Automated search strategies for finding the best model configuration. | Includes Grid Search and Random Search. Crucial for maximizing SVM and NN performance. |

| Structured Clinical Datasets [6] [22] [23] | Data | Retrospective data from IVF cycles including patient demographics, hormone levels, and treatment outcomes. | Must include key features like female age, AMH, AFC, and infertility duration. Sample sizes >1,000 records are typical. |

| Time-lapse Imaging (TLI) Datasets [24] | Data | Annotated image sequences of developing embryos linked to known implantation outcomes. | Used for training vision-based AI models like Life Whisperer and iDAScore. Requires significant data storage and processing power. |

| Ethylenediaminetetraacetic acid | Ethylenediaminetetraacetic acid, CAS:470462-56-7, MF:C₆¹³C₄H₁₆N₂O₈, MW:296.21 | Chemical Reagent | Bench Chemicals |

| Aldicarb-d3 Sulfone | Aldicarb-d3 Sulfone, CAS:1795135-15-7, MF:C₇H₁₁D₃N₂O₄S, MW:225.28 | Chemical Reagent | Bench Chemicals |

The accurate prediction of rare fertility outcomes, such as live birth following in vitro fertilization (IVF), represents a significant challenge in reproductive medicine. The development of robust machine learning (ML) models for this purpose is often hampered by high-dimensional datasets containing a multitude of clinical, demographic, and laboratory parameters. Feature selection is a critical preprocessing step that mitigates the "curse of dimensionality," enhances model performance, improves computational efficiency, and increases the interpretability of predictive models by identifying the most clinically relevant predictors [27] [28]. Within the specific context of rare fertility outcomes research, where datasets can be complex and imbalanced, the strategic implementation of feature selection is paramount for building reliable and generalizable models. This document provides detailed application notes and protocols for two prominent categories of feature selection strategies—filter methods and genetic algorithms (GAs)—framed within the scope of a broader thesis on ML prediction models for rare fertility outcomes.

Comparative Analysis of Feature Selection Strategies

The table below summarizes the core characteristics, performance, and applications of filter methods and genetic algorithms as identified in recent fertility research.

Table 1: Comparative analysis of feature selection strategies for fertility outcome prediction

| Strategy | Mechanism | Key Advantages | Limitations | Reported Performance in Fertility Research |

|---|---|---|---|---|

| Filter Methods (e.g., Chi-squared, PCA, VT) | Selects features based on statistical measures (e.g., correlation, variance) independent of the ML model [28]. | Computationally fast and efficient; Scalable to high-dimensional data; Less prone to overfitting [29]. | Ignores feature dependencies and model interaction; May select redundant features [27]. | PCA + LightGBM: 92.31% accuracy [30]; VT (Threshold=0.35): Used in hybrid pipeline [28]. |

| Genetic Algorithm (GA) | A wrapper method that uses evolutionary principles (selection, crossover, mutation) to find an optimal feature subset [27]. | Effective search of complex solution spaces; Captures feature interactions; Robust performance [27] [29]. | Computationally intensive; Requires a defined fitness function; Risk of overfitting without validation [29]. | GA + AdaBoost: 89.8% accuracy [27]; GA + Random Forest: 87.4% accuracy [27]. |

| Hybrid Approaches (Filter + GA) | A filter method performs initial feature reduction, followed by GA for refined optimization [29]. | Balances efficiency and performance; Reduces computational burden on GA; Leverages strengths of both methods. | Increased complexity in design and implementation. | Hybrid Filter-GA: Outperformed standalone methods on cancer classification [29]; HFS-based hybrid method: 79.5% accuracy, 0.72 AUC [28]. |

Experimental Protocols for Feature Selection in Fertility Research

Protocol 1: Genetic Algorithm-Based Feature Selection

This protocol outlines the steps for implementing a GA to identify pivotal features for predicting live birth outcomes in an IVF dataset, as demonstrated in recent studies [27].

1. Problem Definition & Initialization

- Objective: To select a subset of features from a pool of

Ntotal features (e.g., female age, AMH, endometrial thickness, sperm count) that maximizes the predictive accuracy for live birth. - Chromosome Encoding: Represent each potential solution (individual) as a binary string of length

N. A value of '1' indicates the feature is selected, and '0' indicates it is excluded. - Initial Population: Generate a population of

Prandom binary strings (e.g., P = 100-500 individuals).

2. Fitness Evaluation

- Fitness Function Definition: The performance of a feature subset is evaluated using a classifier (e.g., Random Forest, AdaBoost). The fitness score is the primary performance metric, typically the Area Under the ROC Curve (AUC) or Accuracy, estimated via cross-validation [27].

- Evaluation Process:

- For each individual in the population, subset the dataset to include only the features marked '1'.

- Train the chosen classifier on a training partition of the data.

- Calculate the fitness score (e.g., AUC) on a held-out validation set or via k-fold cross-validation.

- Assign this score as the individual's fitness.

3. Evolutionary Operations

- Selection: Employ a selection strategy (e.g., tournament selection, roulette wheel) to choose parent individuals for reproduction, favoring those with higher fitness scores.

- Crossover: Apply a crossover operator (e.g., single-point crossover) to pairs of parents to create offspring. This combines feature subsets from two parents.

- Mutation: Apply a mutation operator with a low probability (e.g., 0.01-0.05), which flips random bits in the offspring's chromosome. This introduces new features or removes existing ones, maintaining population diversity.

4. Termination and Output

- Stopping Criteria: Repeat steps 2 and 3 for a predefined number of generations (e.g., 100-500) or until fitness convergence is observed.

- Output: The algorithm returns the individual with the highest fitness score from the final generation, representing the optimal feature subset. Subsequent analysis, such as SHAP (SHapley Additive exPlanations), can be performed on a model trained with this subset to interpret feature importance [31].

Protocol 2: Hybrid Filter and Genetic Algorithm Workflow

This protocol leverages the speed of filter methods and the power of GAs, creating an efficient and high-performing pipeline suitable for high-dimensional fertility datasets [28] [29].

1. Preprocessing and Initial Filtering

- Data Cleaning: Handle missing values and normalize features as required.

- Apply Filter Method: Use a fast, univariate filter method (e.g., Chi-squared, Information Gain, Variance Threshold) to score and rank all

Nfeatures based on their statistical relationship with the outcome. - Feature Space Reduction: Select the top

Kfeatures from the ranked list (e.g., top 50-100 features, or features above a score threshold). This step drastically reduces the dimensionality of the dataset.

2. Genetic Algorithm Optimization on Reduced Set

- Chromosome Encoding: Create a binary chromosome representation of length

K, corresponding to the filtered feature set. - Execute GA: Run the Genetic Algorithm as described in Protocol 1, but using only the

Kfeatures from the filtering step. This significantly reduces the GA's search space and computational runtime. - Final Subset Selection: The GA outputs an optimal subset of the

Kfeatures, which is the final set of predictors for model building.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential computational tools and packages for implementing feature selection protocols

| Item Name | Function/Application | Implementation Example |

|---|---|---|

| Scikit-learn (Python) | Provides a comprehensive library for filter methods (e.g., SelectKBest, VarianceThreshold) and ML classifiers for fitness evaluation. |

from sklearn.feature_selection import SelectKBest, chi2 |

| DEAP (Python) | A robust evolutionary computation framework for customizing Genetic Algorithms, including selection, crossover, and mutation operators. | from deap import base, creator, algorithms, tools |

R caret Package |

A unified interface for building ML models in R, encompassing various filter methods and algorithms for model training and tuning. | library(caret); trainControl <- trainControl(method="cv", number=5) |

| Hesitant Fuzzy Sets (HFS) | A advanced mathematical framework for decision-making under uncertainty, used to rank and combine results from multiple feature selection methods in hybrid pipelines [28]. | Custom implementation as per [28] for scoring and aggregating feature subsets from filter and embedded methods. |

| SHAP (SHapley Additive exPlanations) | A game-theoretic method for explaining the output of any ML model, crucial for interpreting the clinical relevance of features selected by GA or hybrid models [31]. | import shap; explainer = shap.TreeExplainer(model) |

| Loteprednol Etabonate-d3 | Loteprednol Etabonate-d3, MF:C24H31ClO7, MW:470.0 g/mol | Chemical Reagent |

| Damnacanthal-d3 | Damnacanthal-d3, MF:C₁₆H₇D₃O₅, MW:285.27 | Chemical Reagent |

Workflow Visualization

The following diagram illustrates the logical sequence and integration of the two primary protocols detailed in this document.

Diagram 1: Integrated workflow for feature selection strategies

Accurately predicting blastocyst formation is a critical challenge in reproductive medicine, directly influencing decisions regarding extended embryo culture. This case study explores the application of the Light Gradient Boosting Machine (LightGBM) algorithm to predict blastocyst yield in In Vitro Fertilization (IVF) cycles. Within the broader context of machine learning for rare fertility outcomes, we demonstrate how LightGBM can be leveraged to forecast the quantitative number of blastocysts, moving beyond binary classification. The developed model achieved a high coefficient of determination (R²) of 0.673-0.676 and a Mean Absolute Error (MAE) of 0.793-0.809, outperforming traditional linear regression models (R²: 0.587, MAA: 0.943) [32]. Furthermore, when tasked with stratifying outcomes into three clinically relevant categories (0, 1-2, and ≥3 blastocysts), the model demonstrated robust accuracy (0.675-0.71) [32]. This protocol details the end-to-end workflow for constructing, validating, and interpreting a LightGBM-based predictive model for blastocyst yield, providing researchers and clinicians with a tool to potentially optimize embryo selection and culture strategies.

Infertility affects a significant portion of the global population, with assisted reproductive technologies (ART), particularly in vitro fertilization (IVF), serving as a primary treatment [30] [5]. A pivotal stage in IVF is extended embryo culture to the blastocyst stage (day 5-6), which allows for better selection of viable embryos and is associated with higher implantation rates [32]. However, not all embryos survive this extended culture, and a cycle yielding no blastocysts represents a significant clinical and emotional setback for patients.

The prediction of blastocyst formation has traditionally been challenging. While previous research often focused on predicting the binary outcome of obtaining at least one blastocyst, the quantitative prediction of blastocyst yield provides a more nuanced and clinically valuable metric [32]. This capability allows for personalized decision-making, setting realistic expectations, and potentially altering treatment strategies for predicted poor responders.

Machine learning (ML) models, known for identifying complex, non-linear patterns in high-dimensional data, are increasingly applied in reproductive medicine [30] [6] [33]. Among these, LightGBM has emerged as a powerful gradient-boosting framework. It offers high computational efficiency, lower memory usage, and often superior accuracy, making it suitable for clinical datasets [30] [32] [5]. This case study situates the use of LightGBM for blastocyst yield prediction within the broader research objective of developing robust ML models for rare and critical fertility outcomes.

Materials and Methods

Data Source and Study Population

A retrospective analysis is typically performed on data from a single or multi-center reproductive clinic.

- Data Origin: The dataset should comprise cycles from couples undergoing IVF or intracytoplasmic sperm injection (ICSI) treatment. For example, the model developed by Huo et al. was based on data from Nanfang Hospital [32].

- Inclusion/Exclusion Criteria: Standard criteria include women within a specific age range (e.g., 20-40 years) undergoing a fresh IVF/ICSI cycle with own gametes. Cycles with incomplete data, use of donor gametes, or preimplantation genetic testing are often excluded [32] [34].

- Ethical Approval: The study protocol must be approved by the relevant Institutional Review Board or Ethical Committee (e.g., approval number NFEC-2024-326 in the cited study [32]). Informed consent is often waived for retrospective studies by the ethics committee.

The Scientist's Toolkit: Predictor Variables and Outcome Definitions