Navigating the Data Deluge: Advanced Strategies for Handling High-Dimensional Fertility Data

The integration of artificial intelligence (AI) and big data analytics is revolutionizing reproductive medicine, offering data-driven solutions to long-standing challenges in infertility treatment. This article provides a comprehensive framework for researchers, scientists, and drug development professionals to efficiently manage and interpret complex, high-dimensional fertility datasets. We explore the foundational sources of this data, from medical imaging and omics analysis to electronic health records. The review delves into cutting-edge methodological applications of machine learning for tasks such as embryo selection and treatment outcome prediction. Critical challenges in data quality, model generalization, and clinical integration are addressed, alongside rigorous validation frameworks and comparative analyses of AI tools. By synthesizing current progress with open challenges, this work aims to equip professionals with the knowledge to harness high-dimensional data for accelerating innovation in fertility research and clinical care.

Navigating the Data Deluge: Advanced Strategies for Handling High-Dimensional Fertility Data

Abstract

The integration of artificial intelligence (AI) and big data analytics is revolutionizing reproductive medicine, offering data-driven solutions to long-standing challenges in infertility treatment. This article provides a comprehensive framework for researchers, scientists, and drug development professionals to efficiently manage and interpret complex, high-dimensional fertility datasets. We explore the foundational sources of this data, from medical imaging and omics analysis to electronic health records. The review delves into cutting-edge methodological applications of machine learning for tasks such as embryo selection and treatment outcome prediction. Critical challenges in data quality, model generalization, and clinical integration are addressed, alongside rigorous validation frameworks and comparative analyses of AI tools. By synthesizing current progress with open challenges, this work aims to equip professionals with the knowledge to harness high-dimensional data for accelerating innovation in fertility research and clinical care.

The Landscape of High-Dimensional Data in Reproductive Medicine

Definitions and Characteristics

What is considered "high-dimensional data" in fertility research? High-dimensional data in fertility research refers to datasets where the number of features or variables (p) is much larger than the number of observations or samples (n). This encompasses various 'omics' technologies and complex clinical measurements that provide a comprehensive, multi-factorial view of reproductive health [1] [2]. Common data types include:

- Genomics: Variability in DNA sequence across the genome

- Epigenomics: Epigenetic modifications of DNA

- Transcriptomics: Gene expression profiling and messenger RNA (mRNA) levels

- Proteomics: Variability in composition and abundance of proteins

- Metabolomics: Variability in composition and abundance of metabolites

What are the main sources of high-dimensional data in reproductive medicine? The primary sources include [1] [3] [2]:

- Molecular profiling: Endometrial transcriptome patterns, proteomic analyses of uterine fluid, metabolic profiling

- Medical imaging: Time-lapse embryo imaging, sperm morphology analysis, endometrial structure characterization

- Clinical records: Structured and unstructured data from electronic health records (EHRs)

- Biological samples: Semen analysis, follicular fluid composition, endometrial tissue biopsies

Table: Characteristics of High-Dimensional Data Types in Fertility Research

| Data Type | Typical Dimensionality | Primary Applications in Fertility | Common Analysis Challenges |

|---|---|---|---|

| Genomic (GWAS) | 500,000 - 1,000,000 SNPs | Endometriosis risk loci identification, polygenic risk scores | Multiple testing correction, population stratification |

| Transcriptomic | 20,000-60,000 genes | Endometrial receptivity assessment, implantation failure | Batch effects, normalization, RNA quality issues |

| Proteomic | 1,000-10,000 proteins | Sperm quality assessment, embryo secretome analysis | Dynamic range, protein identification confidence |

| Metabolomic | 100-1,000 metabolites | Embryo viability prediction, oocyte quality assessment | Spectral alignment, compound identification |

Troubleshooting Common Experimental Issues

How do we handle missing data in high-dimensional fertility datasets? Multiple Imputation by Chained Equations (MICE) has demonstrated superior performance for handling missing values in fertility datasets. In analyses of the Pune Maternal Nutrition Study (PMNS) dataset encompassing over 5000 variables, MICE preserved temporal consistency in longitudinal data with 89% accuracy, significantly outperforming K-Nearest Neighbors (KNN) imputation (74% accuracy) [4]. Implementation protocol:

- Assess missingness pattern: Determine whether data is missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNRA)

- Select variables for imputation: Include all variables that are part of the analytical model, even those without missing values

- Specify imputation models: Choose appropriate models for different variable types (logistic regression for binary, predictive mean matching for continuous)

- Generate multiple imputations: Create typically 5-20 complete datasets to account for imputation uncertainty

- Analyze and pool results: Perform analysis on each dataset and combine results using Rubin's rules

What feature selection methods are most effective for high-dimensional fertility data? Tree-based feature selection methods, particularly Boruta and embedded methods like LASSO regularization, have demonstrated superior capability in identifying the most relevant predictors from high-dimensional fertility data [4]. The selection methodology depends on data characteristics:

- Filter methods: Use statistical tests (Pearson correlation, Mutual Information) for initial feature screening

- Wrapper methods: Evaluate feature subsets based on model performance (forward selection, backward elimination)

- Embedded methods: Leverage model-based feature importance (LASSO, tree-based importance)

- Hybrid methods: Combine multiple approaches based on ensemble learning

Why is data normalization critical for fertility 'omics' studies, and which methods are recommended? Normalization ensures that technical variations don't obscure biological signals, which is particularly crucial for endometrial studies where samples may be collected across different menstrual cycle phases and processing batches [1] [2]. Recommended approaches:

- For transcriptomic data: Quantile normalization, TPM (Transcripts Per Million) or FPKM (Fragments Per Kilobase Million) normalization

- For proteomic data: Variance-stabilizing normalization, quantile normalization

- For metabolomic data: Probabilistic quotient normalization, sample-specific scaling

- Batch effect correction: ComBat, Remove Unwanted Variation (RUV)

Experimental Protocols and Workflows

Standardized Protocol for Endometrial Transcriptome Analysis

Sample Processing Workflow

Sample Collection and Preservation

- Time endometrial biopsies according to ovulation (LH surge) for receptivity studies

- Immediately preserve tissue in RNAlater or similar stabilization reagent

- Store at -80°C until processing

- Document precise cycle day and patient characteristics

RNA Extraction and Quality Control

- Use column-based extraction methods with DNase treatment

- Assess RNA integrity using Bioanalyzer or TapeStation (RIN > 7.0 required)

- Verify concentration using fluorometric methods (Qubit)

- Minimum requirement: 100ng total RNA for library preparation

Library Preparation and Sequencing

- Use poly-A selection for mRNA enrichment

- Employ strand-specific library preparation protocols

- Sequence to minimum depth of 30 million reads per sample on Illumina platform

- Include spike-in controls for quality monitoring

Bioinformatic Processing

- Quality trimming with Trimmomatic or similar tool

- Alignment to reference genome (STAR or HISAT2)

- Gene-level quantification (featureCounts or HTSeq)

- Normalization and batch effect correction

Workflow for High-Dimensional Embryo Selection Data Integration

Embryo Selection Data Pipeline

Data Visualization Techniques for High-Dimensional Fertility Data

Which dimensionality reduction techniques are most effective for visualizing high-dimensional fertility data? The choice of technique depends on the specific analytical goal and data structure [5] [6]:

Table: Comparison of Dimensionality Reduction Techniques for Fertility Data

| Technique | Best For | Advantages | Limitations | Implementation in Fertility Research |

|---|---|---|---|---|

| PCA | Linear dimensionality reduction, data exploration | Fast, preserves global structure, maximizes variance | Limited for non-linear data, requires scaling | Initial data exploration, quality control, batch effect detection |

| t-SNE | Cluster visualization, identifying patient subgroups | Excellent for local structure, reveals complex relationships | Computational intensive, non-deterministic, loses global structure | Identifying endometrial receptivity subtypes, patient stratification |

| UMAP | Large datasets, preserving local and global structure | Faster than t-SNE, better global structure preservation | Sensitive to hyperparameters, complex implementation | Visualizing developmental trajectories in embryo time-lapse data |

| Parallel Coordinates | Multi-parameter analysis, pattern recognition | Preserves all dimensions, shows correlations | Cluttered with many features, requires interaction | Multi-omics data integration, biomarker panel development |

Protocol for Visualizing High-Dimensional Fertility Data Using PCA

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table: Key Research Reagent Solutions for High-Dimensional Fertility Studies

| Reagent/Category | Specific Product Examples | Primary Function | Technical Considerations |

|---|---|---|---|

| RNA Stabilization Reagents | RNAlater, PAXgene Tissue System | Preserves RNA integrity in endometrial biopsies | Immediate immersion required, optimal penetration in 4mm thickness |

| Single-Cell Isolation Kits | 10x Genomics Chromium, Takara Living Cell | Enables single-cell transcriptomics of rare cell populations | Viability >90% critical, concentration optimization needed |

| Multiplex Immunoassay Panels | Luminex, Olink, MSD panels | Simultaneous quantification of multiple proteins in limited samples | Dynamic range verification, sample dilution optimization |

| Library Preparation Kits | Illumina TruSeq, SMARTer Stranded | Preparation of sequencing libraries from limited input RNA | Input amount critical, ribosomal depletion for FFPE samples |

| Antibody Panels for Cytometry | BD Biosciences, BioLegend panels | High-dimensional immunophenotyping of endometrial immune cells | Spectral overlap compensation, titration required |

| Mass Spectrometry Standards | SILAC, TMT, iTRAQ reagents | Quantitative proteomics of follicular fluid/uterine lavage | Labeling efficiency verification, multiplexing level optimization |

| Embryo Culture Media | G-TL, Continuous Single Culture | Metabolic profiling and time-lapse imaging compatibility | Batch-to-batch consistency, quality control essential |

| Cryopreservation Media | Vitrification kits, slow-freeze media | Preserves cellular integrity for multi-omics studies | Post-thaw viability assessment critical |

| N-methyl Norcarfentanil (hydrochloride) | N-methyl Norcarfentanil (hydrochloride), MF:C17H24N2O3 · HCl, MW:340.9 | Chemical Reagent | Bench Chemicals |

| MOME | MOME (Aqueous Cationic Polymer) for Research | Bench Chemicals |

Machine Learning Implementation for Predictive Modeling

What machine learning approaches show promise for high-dimensional fertility data? Ensemble-based regression models, particularly Gradient Boosting and Random Forest, have proven highly effective in capturing non-linear relationships and complex maternal-fetal interactions within high-dimensional fertility data [4] [3]. Implementation framework:

Data Preparation and Splitting

- Stratified splitting to maintain class distribution (especially important for rare outcomes)

- Temporal splitting if dealing with time-series data (morphokinetics)

- External validation set from different clinic when possible

Model Selection and Training

- Start with tree-based methods (Random Forest, XGBoost) for baseline performance

- Consider deep learning for very large datasets (>10,000 samples)

- Employ automated hyperparameter optimization (Bayesian optimization, grid search)

- Use nested cross-validation to avoid overfitting

Model Interpretation and Validation

- Calculate feature importance using SHAP or permutation importance

- Validate on external datasets when possible

- Perform clinical utility analysis (decision curve analysis)

- Document model performance across patient subgroups

Protocol for Developing a Birth Weight Prediction Model

Based on successful implementations in predicting fetal birth weight from high-dimensional maternal data [4]:

The field of reproductive medicine is undergoing a data-driven transformation. Fertility clinics now generate vast amounts of complex information, from time-lapse embryo imaging and genetic sequencing results to electronic health records and patient-reported outcomes. This data, characterized by its immense volume, diverse variety, and rapid velocity, holds the key to personalized treatment and improved success rates. However, it also presents significant challenges in management, integration, and analysis. This technical support center addresses the specific data-handling issues researchers and scientists encounter, providing troubleshooting guidance and methodological frameworks to navigate the complexities of high-dimensional fertility data efficiently.

Core Data Challenges & Troubleshooting FAQs

FAQ 1: How can we effectively structure and integrate unstructured clinical notes with structured lab data?

- The Problem: A significant portion of valuable clinical information, such as physician notes and medical history, exists in unstructured text format, which is difficult to integrate with structured data from lab systems and electronic health records (EHRs). This limits the ability to perform comprehensive analyses [7].

- The Solution: Implement Natural Language Processing (NLP) pipelines. These systems use a two-step approach:

- Named Entity Recognition (NER): Identifies and extracts key clinical concepts (e.g., specific diagnoses, medication names) from free-text notes [7].

- Relation Extraction (RE): Determines the relationships between the extracted entities, transforming unstructured text into a structured, analyzable format [7].

- Technical Consideration: Ensure the NLP models are trained or fine-tuned on domain-specific (reproductive medicine) corpora to accurately recognize specialized terminology.

FAQ 2: What is the best way to ensure data consistency and integrity when linking parent and child records?

- The Problem: Tracking the family history and linking a baby's future medical records, especially when they are born without an official ID, is a common challenge that can lead to data fragmentation and loss of crucial longitudinal information [7].

- The Solution: Establish a robust unified coding system. At birth, use the parents' IDs as the unique identifier for the newborn. This creates a critical link for tracking family history and ensures future records can be accurately associated [7]. All personally identifiable information must be stored separately from the clinical data and be accessible only to authorized personnel to maintain privacy.

FAQ 3: Our existing EHR is not designed for fertility workflows. How can we manage multi-party records without a complete system overhaul?

- The Problem: Standard EHRs often lack the flexibility to handle the complex relationships in fertility care, such as linking partners, donors, and surrogates to a single treatment cycle, forcing clinicians to rely on manual workarounds [8].

- The Solution: If a purpose-built fertility EHR is not an option, consider a complementary software platform. No-code or low-code platforms can be used to build custom applications for specific workflows, such as partner-linked records, consent form management, and patient communication portals. These can integrate with your core EHR, bridging the functionality gap without a full system replacement [8].

FAQ 4: What are the key barriers to adopting AI in a clinical research setting, and how can we address them?

- The Problem: Despite the promise of AI, many clinics and research teams face hurdles in implementation. Understanding these barriers is the first step to overcoming them [9].

- The Solution: The primary barriers, as identified by international fertility specialists, are summarized in the table below, along with mitigation strategies.

Table 1: Barriers to AI Adoption and Proposed Mitigation Strategies

| Barrier | Prevalence (2025 Survey) | Mitigation Strategy |

|---|---|---|

| High Implementation Cost | 38.01% | Explore modular AI solutions; prioritize tools with clear ROI (e.g., time-saving). |

| Lack of Staff Training | 33.92% | Invest in vendor training; allocate dedicated time for skill development. |

| Over-reliance on Technology | 59.06% (cited as a risk) | Frame AI as a decision-support tool, not a replacement for clinical expertise. |

| Ethical and Legal Concerns | Significant concern | Develop internal guidelines for AI use; choose validated, explainable AI models. |

Experimental Protocols & Data Analysis Workflows

This section provides detailed methodologies for key experiments and data analysis tasks common in fertility research.

Protocol: Developing a Machine Learning Model for Blastocyst Yield Prediction

This protocol is based on a study that developed models to quantitatively predict the number of blastocysts an IVF cycle will produce, a critical factor in deciding whether to pursue extended embryo culture [10].

1. Objective: To develop and validate a machine learning model that predicts blastocyst yield using cycle-level demographic and embryological features.

2. Dataset Preparation:

- Data Source: Retrospective data from 9,649 IVF/ICSI cycles.

- Outcome Variable: Number of usable blastocysts (categorized as 0, 1-2, or ≥3).

- Feature Set: The initial feature set should include:

- Female age

- Number of oocytes retrieved

- Number of 2PN embryos

- Number of embryos placed in extended culture

- Day 2 & 3 embryo morphology metrics (e.g., mean cell number, proportion of 8-cell embryos, fragmentation rate) [10].

- Data Splitting: Randomly split the dataset into training (e.g., 70%) and testing (e.g., 30%) subsets.

3. Model Training and Selection:

- Algorithms: Train multiple models, such as LightGBM, XGBoost, and Support Vector Machines (SVM).

- Feature Selection: Use Recursive Feature Elimination (RFE) to identify the optimal subset of features that maintains model performance, enhancing simplicity and interpretability.

- Performance Metrics: Evaluate models using R-squared (R²), Mean Absolute Error (MAE), and for categorical classification, accuracy and Kappa coefficient.

4. Validation and Interpretation:

- Internal Validation: Assess model performance on the held-out test set.

- Model Interpretation: Use feature importance analysis (e.g., LightGBM's built-in methods) and Individual Conditional Expectation (ICE) plots to understand how the top features influence the prediction [10].

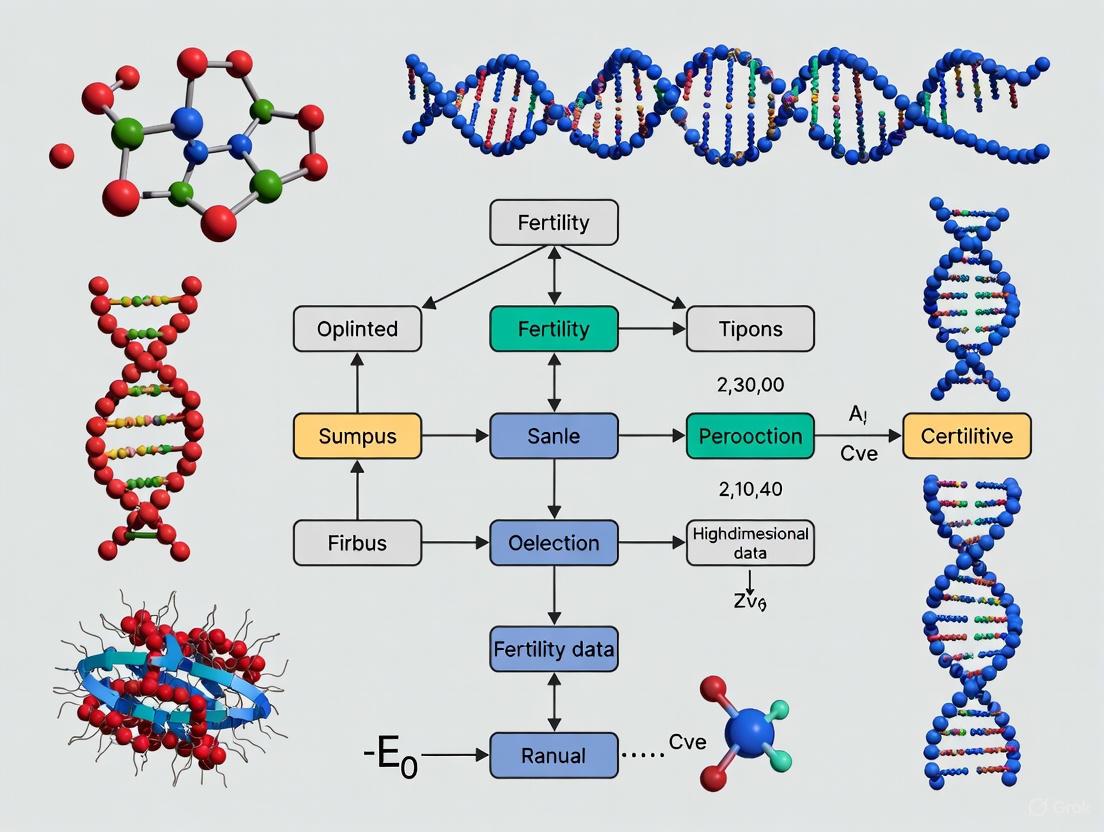

The following workflow diagram illustrates the key stages of this machine learning project.

Workflow: AI-Assisted Embryo Selection for Implantation Prediction

This workflow outlines the process for validating an AI tool designed to select embryos with the highest implantation potential, a major application in modern IVF labs [11].

1. Input Data Acquisition:

- Image Data: Collect high-resolution static images or time-lapse videos of blastocysts.

- Clinical Data (Optional): Integrate patient age and other clinical factors to enhance predictive accuracy, as done in systems like FiTTE [11].

2. AI Model Execution:

- Model Types: Typically a Convolutional Neural Network (CNN) for image analysis, potentially combined with other algorithms for clinical data.

- Output: The model generates a viability score or a classification (e.g., high/low implantation potential) for each embryo.

3. Validation and Clinical Integration:

- Diagnostic Assessment: Compare AI predictions against known clinical outcomes (implantation success/failure) to calculate standard diagnostic metrics.

- Performance Benchmarks: A recent meta-analysis found AI models for embryo selection have a pooled sensitivity of 0.69 and specificity of 0.62, with an Area Under the Curve (AUC) of 0.7 [11].

- Prospective Validation: Crucially, any AI model must be validated in a prospective trial before routine clinical use to ensure it does not reduce live birth rates compared to standard methods [12].

Table 2: Diagnostic Performance of AI in Embryo Selection (Meta-Analysis Results)

| Metric | Pooled Result |

|---|---|

| Sensitivity | 0.69 |

| Specificity | 0.62 |

| Positive Likelihood Ratio | 1.84 |

| Negative Likelihood Ratio | 0.50 |

| Area Under the Curve (AUC) | 0.70 |

Data sourced from a 2025 systematic review and meta-analysis [11].

The Scientist's Toolkit: Essential Reagents & Computational Solutions

Table 3: Key Research Reagent Solutions for Fertility Data Science

| Item | Function/Description |

|---|---|

| Time-Lapse Incubation System | Generates high-volume, high-velocity morphokinetic data on embryo development, serving as a primary data source for AI models [11]. |

| Preimplantation Genetic Testing (PGT) Kits | Provide genetic "ground truth" data (e.g., ploidy status) used for training and validating AI models that predict embryo viability from morphology alone [13]. |

| Specialized Fertility EHR/EMR | Purpose-built databases designed to handle the variety of fertility data, including cycle tracking, partner-linking, and donor information, which are challenging for generic systems [8]. |

| Natural Language Processing (NLP) Library | Software tools (e.g., in Python or R) used to structure unstructured clinical text, enabling the extraction of precise terms from narrative reports for analysis [7]. |

| Machine Learning Frameworks (e.g., LightGBM, XGBoost) | Code libraries used to build predictive models that capture complex, non-linear relationships in fertility data, surpassing the performance of traditional statistical methods [10]. |

| Piomy | Piomy, CAS:11121-57-6, MF:C11H20O3 |

| edil | edil, CAS:129420-93-5, MF:C7H8OS |

Data Management & AI Integration Architecture

A robust data architecture is foundational to addressing the challenges of volume, variety, and velocity. The following diagram outlines a logical workflow for managing fertility data and integrating AI tools, from raw data acquisition to clinical decision support.

Technical Support Center: AI for High-Dimensional Fertility Data Analysis

This support center provides troubleshooting guides and FAQs for researchers using artificial intelligence (AI) to analyze complex, high-dimensional fertility data. The content is designed to help you overcome common technical and methodological challenges in your experiments.

Frequently Asked Questions (FAQs)

FAQ 1: What are the primary AI techniques for analyzing high-dimensional fertility data, and how do I choose between them?

Your choice of AI technique should be guided by your specific research question and data type. The field commonly uses a combination of time-series forecasting, machine learning (ML), and explainable AI (XAI) methods [14] [15].

- Time-Series Forecasting (e.g., Prophet): Use this for predicting future fertility trends, such as annual birth totals, based on historical data. It is ideal for capturing long-term trends and seasonal patterns from temporal data [14].

- Machine Learning Models (e.g., XGBoost): Employ these for tasks like non-linear regression, classification (e.g., embryo selection), or identifying complex, non-linear relationships between numerous input variables (e.g., patient health records, omics data) and outcomes (e.g., pregnancy success) [14] [15].

- Explainable AI (XAI) methods (e.g., SHAP): Use SHapley Additive exPlanations (SHAP) to interpret the output of your ML models. It quantifies the contribution of each predictor variable (e.g., miscarriage totals, abortion access) to the final prediction, making the "black box" of AI transparent and providing actionable biological insights [14].

FAQ 2: Our AI model for embryo selection performs well on our internal data but fails to generalize to external datasets. What could be the cause and solution?

This is a common challenge often stemming from limited model generalizability due to data bias or overfitting [15].

- Potential Causes:

- Data Bias: Your training data may not represent the broader population. This could be due to limited demographic diversity, specific clinic protocols, or inconsistent data collection methods [15].

- Overfitting: The model has learned patterns specific to your training set, including noise, rather than generalizable biological principles.

- Troubleshooting Steps:

- Data Diversification: Actively collaborate with other institutions to gather more diverse datasets. Utilize techniques like federated learning, which allows models to be trained across multiple institutions without sharing sensitive patient data, thus improving generalizability while preserving privacy [15].

- Algorithmic Validation: Rigorously validate your models using external validation cohorts from completely independent clinics. Implement cross-validation strategies that are stratified to ensure representation of key subgroups within your data [15].

- Multi-Modal Learning: Enhance your model's robustness by integrating multiple data modalities. Instead of relying solely on embryo images, combine them with structured electronic health records (EHR) and omics data to create a more comprehensive predictive system [15].

FAQ 3: What are the key regulatory and validation considerations when developing an AI tool for clinical fertility applications?

The transition of an AI tool from a research concept to a clinically validated application requires careful planning. Regulatory bodies like the FDA emphasize a risk-based framework [16].

- Regulatory Guidance: The FDA has published draft guidance on the use of AI in drug and biological product development, which provides a framework for evaluating AI models intended to support regulatory decisions. The core principle is to assess the model's impact on the final product's safety, efficacy, and quality [16].

- Validation Protocol: Your validation must go beyond standard performance metrics.

- Clinical Utility: Design studies to demonstrate that the AI tool improves clinical decision-making and patient outcomes (e.g., higher live birth rates) in a real-world setting [15].

- Transparency and Explainability: For regulatory approval and clinical trust, you must be able to explain your model's predictions. Integrating XAI techniques like SHAP is not just best practice but is becoming a regulatory expectation [14] [16].

- Audit Trails: Maintain rigorous audit trails for your AI models to ensure reproducibility and compliance. This is critical for regulated bioanalysis to prevent risks like data hallucination or manipulation [17].

Troubleshooting Guides

Issue: Poor Performance and Interpretability of a Predictive Model for Birth Totals

- Problem: A linear regression model is resulting in high prediction errors (RMSE) and offers no insight into which factors are driving fertility trends.

- Solution: Implement a hybrid Explainable AI (XAI) workflow that combines advanced forecasting with model interpretability [14].

- Experimental Protocol:

- Data Preparation: Acquire state-level aggregated data (e.g., annual totals for births, abortions, miscarriages, pregnancies from 1973-2020) from a reputable source like the Open Science Framework [14].

- Forecasting with Prophet:

- Format your data into a time series with a

ds(date) andy(value, e.g., birth totals) column. - Train a Prophet model to decompose the series into trend and seasonal components and forecast future values.

- Validate performance using Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE). Prophet has been shown to substantially outperform linear regression benchmarks (e.g., RMSE = 6,231.41 for California) [14].

- Format your data into a time series with a

- Predictor Analysis with XGBoost and SHAP:

- Use the historical data to train an XGBoost regression model, with birth totals as the target (y) and variables like abortion totals, miscarriage totals, and pregnancy rates as predictors (X).

- Calculate SHAP values for the trained model.

- Generate SHAP summary plots to visually identify which predictors (e.g., miscarriage totals, abortion access) have the largest impact on your model's predictions [14].

Table 1: Performance Comparison of Forecasting Models on State-Level Birth Data (1973-2020)

| State | Model | RMSE | MAPE |

|---|---|---|---|

| California | Linear Regression (Baseline) | Not Reported | Not Reported |

| California | Prophet | 6,231.41 | 0.83% |

| Texas | Linear Regression (Baseline) | Not Reported | Not Reported |

| Texas | Prophet | 8,625.96 | 1.84% |

Source: Adapted from [14]. Prophet consistently demonstrated lower error metrics than the baseline.

Issue: Integrating Multi-Modal Data for Embryo Selection in IVF

- Problem: Subjective and labor-intensive manual embryo assessment leads to modest IVF success rates. You want to build an AI system that leverages different types of data to improve objectivity.

- Solution: Develop a multi-modal AI framework that can process and learn from structured data, images, and omics data simultaneously [15].

- Experimental Protocol:

- Data Modality Collection:

- Structured Data: Collect patient clinical records (age, hormone levels, medical history).

- Image Data: Acquire time-lapse microscopy images of embryo development.

- Omics Data: Procure molecular data such as metabolomic or proteomic profiles from spent embryo culture media.

- Model Architecture:

- Use a separate deep learning model (e.g., a Convolutional Neural Network) to extract features from the embryo images.

- Process structured and omics data with a standard ML model like XGBoost or a neural network.

- Create a fusion model that combines the features from all modalities to make a final, integrated prediction on embryo viability [15].

- Validation: Perform rigorous clinical validation to ensure the model generalizes across different patient populations and clinic environments.

- Data Modality Collection:

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Components for AI-Driven Fertility Research

| Item / Reagent | Function in AI Research Context |

|---|---|

| Curated Clinical Datasets | Provides the structured, high-dimensional data (birth totals, abortion rates, miscarriage totals) required for training and validating time-series and ML models [14]. |

| Explainable AI (XAI) Library (e.g., SHAP) | A software tool used to interpret complex AI models, quantifying the contribution of each input feature to the model's prediction, thereby providing biological insights [14]. |

| Time-Series Forecasting Tool (e.g., Prophet) | Software specifically designed to model temporal data, decomposing trends and seasonality to project future fertility outcomes [14]. |

| Multi-Modal Learning Framework | A software architecture that enables the integration and joint analysis of diverse data types (e.g., clinical records, images, omics) to build more robust predictive systems [15]. |

| Federated Learning Platform | A secure computational platform that enables model training on data from multiple institutions without centralizing the data, addressing privacy concerns and improving model generalizability [15]. |

| FLOX4 | FLOX4 Research Compound: FOLFOX4 Component for Cancer Studies |

| MS453 | MS453, MF:C20H27N5O3, MW:385.468 |

Machine Learning Methodologies for Fertility Data Analysis

Supervised Learning for Embryo Selection and Ploidy Prediction

Frequently Asked Questions (FAQs)

FAQ 1: What is the clinical value of predicting embryo ploidy status? Embryo ploidy status, referring to the chromosomal constitution of an embryo, is a critical determinant of in vitro fertilization (IVF) success. Euploid embryos (normal chromosomal count) typically lead to successful pregnancies, while aneuploid embryos (with chromosomal aberrations) are associated with miscarriage, failed pregnancies, and chromosomal disorders. Accurately predicting ploidy helps select the embryo with the highest potential for implantation and live birth. [18]

FAQ 2: How can supervised learning, specifically classification, be applied to embryo assessment? Supervised learning classification is ideal for predicting discrete categories in embryo assessment. The goal is to assign embryo data to predefined classes. In this context, common classification tasks include:

- Binary Classification: Distinguishing between two classes, such as Euploid (EUP) vs. Aneuploid (ANU) embryos, or High-Quality vs. Low-Quality embryos. [18] [19] [20]

- Multiclass Classification: Categorizing embryos into more than two groups, for example, differentiating between Euploid, Single Aneuploid (SA), and Complex Aneuploid (CxA) embryos. [18] Algorithms like Convolutional Neural Networks (CNNs), Random Forests, and Support Vector Machines are frequently used for these tasks. [21] [22] [20]

FAQ 3: What types of data are used to train these supervised learning models? Training robust models requires diverse and high-dimensional data sources:

- Time-lapse imaging (TLI) sequences: These are videos compiled from images taken at regular intervals (e.g., every 0.3 hours) over 5 days of embryo development. They provide rich morphological and morphokinetic data. [18] [22]

- Morphological and morphokinetic parameters: Manual or model-derived scores for Inner Cell Mass (ICM), Trophectoderm (TE), and expansion. [18]

- Clinical and demographic data: Maternal age at oocyte retrieval is a highly important feature. Other data can include Body Mass Index (BMI), hormonal levels (e.g., FSH, AMH), and infertility diagnosis. [18] [21]

FAQ 4: What are the main limitations of current AI models for ploidy prediction? While promising, current AI models have several limitations:

- They cannot replace preimplantation genetic testing for aneuploidy (PGT-A). PGT-A remains the gold standard. [18] [23]

- Performance variability: Model accuracy can be inconsistent across different clinics and imaging systems due to heterogeneity in data. [23]

- Data dependency: Models require large, high-quality, and well-annotated datasets for training, which can be difficult and expensive to acquire. [18] [22]

Troubleshooting Guides

Issue 1: Poor Model Performance and Overfitting

Problem: Your model performs well on training data but poorly on validation or test sets, indicating overfitting to the training data.

Solution:

- Increase Data Volume and Diversity: Use data augmentation techniques on time-lapse images (e.g., rotation, flipping, contrast adjustment) to artificially expand your dataset. Collaborate with multiple clinics to gather a more heterogeneous dataset. [22]

- Apply Regularization Techniques: Incorporate L1 (Lasso) or L2 (Ridge) regularization into your model to penalize complex models and prevent over-reliance on any single feature. [20]

- Utilize Cross-Validation: Employ k-fold cross-validation (e.g., 4-fold or 5-fold) during training to ensure your model's performance is consistent across different data splits and not dependent on a single train-test split. [18] [21]

- Simplify the Model Architecture: If using a deep learning model, reduce the number of layers or neurons. For traditional machine learning, try a less complex algorithm (e.g., switch from a complex CNN to a simpler Random Forest as a baseline). [19]

Issue 2: Handling High-Dimensional and Multimodal Fertility Data

Problem: Integrating different types of data (videos, categorical clinical data, continuous scores) into a single, efficient model is computationally challenging.

Solution:

- Employ Multitask Learning: As demonstrated by the BELA model, train a single model to perform multiple related tasks simultaneously. For example, a model can be designed to predict blastocyst score components (ICM, TE, expansion) and then use those predictions to infer ploidy status. This allows the model to learn generalized features from correlated tasks. [18]

- Use Effective Feature Extraction: For image and video data, use pre-trained CNNs (like ResNet) as spatial feature extractors. This converts high-dimensional images into lower-dimensional feature vectors that are easier for downstream models to process. [18]

- Leverage Hybrid Model Architectures: Combine different neural network architectures. For instance, use a CNN to extract features from individual time-lapse frames and a Recurrent Neural Network (RNN) like a BiLSTM to model the temporal sequence and relationships between these features over the embryo's development. [18]

- Perform Rigorous Feature Selection: Before training, use algorithms like XGBoost to rank the importance of clinical features. This helps reduce dimensionality by retaining only the most predictive features, improving model efficiency and performance. [21]

Issue 3: Interpreting Model Predictions and Ensuring Clinical Trust

Problem: The "black box" nature of complex models like CNNs makes it difficult for embryologists to understand and trust the AI's predictions.

Solution:

- Implement Model Interpretability Frameworks: Use tools like SHapley Additive exPlanations (SHAP) to explain the output of any machine learning model. SHAP can show which features (e.g., specific time points in a video or clinical variables) contributed most to a particular ploidy prediction, providing transparency. [18] [21]

- Visualize Model Attention: For deep learning models, generate visualizations that highlight the regions of the embryo images the model focused on when making a decision. This can help validate whether the model is using biologically plausible cues. [22]

- Create Clear Performance Visualizations: Use standard evaluation plots like ROC curves, precision-recall curves, and confusion matrices to communicate the model's strengths and weaknesses clearly to clinical stakeholders. [21] [24]

The following tables summarize quantitative findings from recent studies to aid in benchmarking your models.

Table 1: Performance of AI Models in Embryonic Ploidy Prediction (Meta-Analysis Data)

| Model Type / Study | Pooled AUC (95% CI) | Pooled Sensitivity | Pooled Specificity | Key Findings |

|---|---|---|---|---|

| AI Algorithms (Overall) [23] | 0.80 (0.76–0.83) | 0.71 (0.59–0.81) | 0.75 (0.69–0.80) | Meta-analysis of 12 studies (6879 embryos). Performance heterogeneity linked to validation type and model design. |

| BELA Model (with maternal age) [18] | 0.76 (EUP vs. ANU) | Not Specified | Not Specified | Uses multitask learning on time-lapse videos. Matches performance of models using manual embryologist scores. |

| BELA Model (with maternal age) [18] | 0.83 (EUP vs. CxA) | Not Specified | Not Specified | Shows higher performance in identifying complex aneuploidies. |

Table 2: Comparison of Model Performance on Live Birth Prediction (EMR Data)

| Model | Accuracy | AUC | Precision | Recall | Interpretability |

|---|---|---|---|---|---|

| Convolutional Neural Network (CNN) [21] | 0.9394 ± 0.0013 | 0.8899 ± 0.0032 | 0.9348 ± 0.0018 | 0.9993 ± 0.0012 | High (with SHAP) |

| Random Forest [21] | 0.9406 ± 0.0017 | 0.9734 ± 0.0012 | Not Specified | Not Specified | High |

| Decision Tree [21] | Lower than CNN/RF | Lower than CNN/RF | Not Specified | Not Specified | Very High |

Experimental Protocol: Implementing a Ploidy Prediction Model

This protocol outlines the key steps for developing a supervised learning model for embryo ploidy prediction, based on methodologies from recent literature. [18] [21] [23]

1. Data Collection and Curation

- Data Sources: Collect time-lapse videos from time-lapse incubators (e.g., Embryoscope). Ensure videos are captured at regular intervals (e.g., every 0.3 hours) over the 5-day development period.

- Ground Truth: Obtain PGT-A results for each embryo, classifying them as Euploid (EUP), Single Aneuploid (SA), or Complex Aneuploid (CxA).

- Clinical Data: Collect maternal age and, if available, other clinical features such as BMI, infertility diagnosis, and hormonal levels.

- Ethical Approval: Ensure the study protocol is approved by an Institutional Review Board (IRB).

2. Data Preprocessing

- Video Processing: Extract frames from time-lapse videos. Standardize the resolution and normalize pixel values.

- Feature Engineering: For non-image data, handle missing values (e.g., mean imputation for continuous variables) and normalize numerical features to a common scale (e.g., [-1, 1]).

- Data Splitting: Split the dataset into training (80%), validation (10%), and test (10%) sets, using stratified splitting to maintain the class distribution (ploidy status) in each set.

3. Model Training with a Multitask Architecture (e.g., BELA-inspired)

- Step 1 - Feature Extraction: Use a pre-trained CNN (e.g., ResNet) as a spatial feature extractor on the time-lapse video frames from a key developmental window (e.g., 96-112 hours post-insemination). This transforms each frame into a feature vector.

- Step 2 - Temporal Modeling: Feed the sequence of feature vectors into a Bidirectional LSTM (BiLSTM) network. This model learns the temporal dynamics and dependencies across the embryo's development.

- Step 3 - Multitask Learning: The BiLSTM outputs are used for two concurrent tasks:

- Task A (Blastocyst Score Prediction): Predict the morphological scores (ICM, TE, expansion) and the overall blastocyst score. This is an auxiliary task that provides a robust intermediate representation.

- Task B (Ploidy Prediction): Use the model-derived blastocyst score (MDBS) from Task A, concatenated with maternal age, as input to a final classifier (e.g., Logistic Regression layer) to predict the final ploidy status (EUP vs. ANU).

4. Model Validation and Interpretation

- Validation: Use k-fold cross-validation (e.g., 4-fold) on the training set to tune hyperparameters. Evaluate the final model on the held-out test set.

- Metrics: Report Area Under the ROC Curve (AUC), accuracy, precision, recall, and F1-score.

- Interpretation: Apply SHAP analysis to the trained model to identify which time points in the video and which clinical features were most influential for the predictions.

Research Reagent Solutions

Table 3: Essential Materials and Tools for Supervised Learning in Embryo Assessment

| Item Name | Function / Application | Specifications / Examples |

|---|---|---|

| Time-Lapse Incubator System | Provides the primary input data (videos) while maintaining stable embryo culture conditions. | Embryoscope or Embryoscope+ systems. [18] |

| Preimplantation Genetic Testing for Aneuploidy (PGT-A) | Provides the ground truth labels for the supervised learning task (Euploid/Aneuploid). | Essential for model training and validation. The gold standard for ploidy detection. [18] [23] |

| Computational Hardware (GPU) | Accelerates the training of deep learning models, which is computationally intensive. | High-performance GPUs (e.g., NVIDIA GeForce RTX 3090). [21] |

| Programming Frameworks & Libraries | Provides the software environment for implementing, training, and evaluating models. | Python with PyTorch or TensorFlow; scikit-learn for traditional ML. [21] |

| Data Visualization Libraries | Used for exploratory data analysis, model evaluation, and creating interpretability plots. | Matplotlib, Seaborn, Plotly for static and interactive plots. [25] [24] |

| Model Interpretability Toolkit | Explains model predictions to build clinical trust and validate biological plausibility. | SHAP (SHapley Additive exPlanations) library. [18] [21] |

Leveraging Convolutional Neural Networks (CNNs) for Image Analysis

Frequently Asked Questions & Troubleshooting Guides

This technical support center addresses common challenges researchers face when applying CNNs to high-dimensional biological data, with a special focus on fertility and biomedical research.

Model Architecture & Design

Q1: My CNN model for medical images has high accuracy on training data but poor performance on validation sets. What could be wrong?

This is a classic case of overfitting, where your model memorizes the training data instead of learning generalizable features. Several strategies can help:

- Implement Regularization Techniques: Add Dropout layers to randomly disable a percentage of neurons during training. A rate of 0.5 (50%) is common after convolutional layers. Also, consider L1 or L2 regularization to penalize large weights in the model [21].

- Use Data Augmentation: Artificially expand your training dataset by applying random (but realistic) transformations to your images, such as rotation, flipping, zooming, and changes in brightness or contrast.

- Simplify the Architecture: A model with too many parameters for the size of your dataset is prone to overfitting. Reduce the number of filters or fully-connected nodes.

- Add More Data: If possible, collect more labeled data. In medical domains, this can be challenging, making data augmentation and transfer learning even more critical.

Q2: How do I decide on the optimal CNN architecture (number of layers, filters) for my specific image dataset?

There is no one-size-fits-all architecture, but a systematic approach can guide you:

- Start with a Known Baseline: Begin with a well-established, simple architecture (e.g., a few convolutional and pooling layers) and then gradually modify it based on performance.

- Leverage Automated Search: Use algorithms like Genetic Algorithms to efficiently navigate the vast hyperparameter space. These algorithms can automatically discover high-performing architectures by evolving a population of model designs over generations [26].

- Consider Transfer Learning: For many biomedical image tasks, using a pre-trained model (like VGG16 or ResNet) and fine-tuning it on your specific data is the most effective and efficient approach [27] [28].

Training & Optimization

Q3: The training process for my CNN is very slow. How can I speed it up?

Training speed is influenced by hardware and model design.

- Hardware Acceleration: Ensure you are using a CUDA-compatible GPU (e.g., NVIDIA GPUs). The parallel processing capabilities of GPUs are essential for practical CNN training [21] [26].

- Optimize Batch Size: Experiment with the batch size. Larger batch sizes can lead to faster training as they better utilize parallel computation, but very large batches can sometimes harm generalization.

- Implement Efficient Preprocessing: Use data loading pipelines that pre-fetch data while the model is training to avoid bottlenecks.

Q4: My model's loss is not decreasing during training. What steps should I take?

A stagnant loss indicates the model is not learning.

- Check Learning Rate: The most common culprit is an inappropriate learning rate. A rate that is too high will cause the loss to bounce around, while one that is too low will result in minimal progress. Common values are 0.01, 0.001, or 0.0001. Use a learning rate scheduler to reduce it gradually [26] [29].

- Verify Data and Labels: Ensure your input data is correctly normalized and that there are no errors in your training labels.

- Inspect Gradient Flow: Use tools to check if gradients are flowing backwards through the network. Vanishing gradients can prevent early layers from learning.

Interpretation & Validation

Q5: How can I trust my CNN's prediction on a medical image? It feels like a "black box."

Model interpretability is critical for clinical adoption.

- Use Explainable AI (XAI) Techniques: Apply methods like SHAP (SHapley Additive exPlanations) to understand which features (e.g., patient age, BMI, specific image regions) contributed most to a prediction in a structured data context [21]. For image data, generate saliency maps or activation heatmaps [30] [28].

- Activation Maximization: This technique generates a synthetic image that maximally activates a specific neuron, helping you visualize what pattern a filter has learned to detect [28].

- Feature Map Visualization: Directly visualize the output of intermediate convolutional layers to see what low-level (edges, textures) and high-level (shapes, objects) features your model is extracting [28].

Q6: My model performs well on data from one clinic but fails on data from another. How can I improve generalizability?

This is a problem of domain shift, often due to differing data acquisition protocols.

- Standardize Preprocessing: Ensure consistent image normalization, scaling, and color processing across all data sources.

- Incorporate Diverse Data: Train your model on aggregated data from multiple sites and scanners to make it more robust.

- Use Federated Learning: This emerging technique allows you to train models across multiple decentralized data sources (e.g., different hospitals) without sharing the raw data, thus maintaining privacy while improving model generalizability [15].

Experimental Protocols & Performance Data

Protocol 1: Standard CNN Workflow for Image Classification

This protocol outlines the foundational steps for building a CNN-based image classifier, applicable to various biomedical image types.

- Data Preprocessing:

- Resizing: Standardize all input images to a fixed size (e.g., 224x224 pixels).

- Normalization: Scale pixel values to a standard range, typically [0, 1] or [-1, 1], to stabilize training.

- Data Augmentation (Training set only): Apply random transformations including rotation (±15°), horizontal flipping, width/height shift (±10%), and zoom (±5%).

- Model Construction:

- Convolutional Layers: Stack multiple Conv layers with small kernels (3x3). Use ReLU activation functions. The number of filters typically increases (e.g., 32, 64, 128) in deeper layers.

- Pooling Layers: Insert max-pooling layers (2x2) after one or more Conv layers to reduce spatial dimensions and control overfitting.

- Classification Head: Flatten the final feature map and connect to one or more Fully Connected (Dense) layers. The final layer uses a softmax activation for multi-class classification.

- Model Training:

- Loss Function: Use Categorical Cross-Entropy for multi-class problems.

- Optimizer: Use Adam optimizer with an initial learning rate of 0.001.

- Validation: Hold out 20-30% of the training data for validation to monitor for overfitting.

- Model Evaluation:

- Use a completely unseen test set to report final performance metrics: Accuracy, Precision, Recall, F1-Score, and AUC-ROC.

Protocol 2: Handling High-Dimensional Structured Data with CNNs

CNNs can be adapted for non-image, high-dimensional data, such as structured electronic medical records (EMRs) for fertility outcomes prediction [21].

- Input Transformation:

- Structure the tabular data (e.g., patient features like age, BMI, hormone levels) into a 2D matrix.

- Reshape this matrix into a pseudo-image, for example, with a fixed input shape of (1, 6, 7) for 42 selected features [21].

- Custom CNN Architecture:

- Use convolutional layers to allow the model to capture local patterns and dependencies between different clinical features.

- A study on IVF outcomes used an architecture with two convolutional layers (16 and 32 filters, 3x3 kernel), each followed by ReLU and 2x2 max pooling, and a dropout layer (rate=0.5) to prevent overfitting [21].

- Interpretation:

- Apply model interpretation tools like SHAP to identify the most important clinical predictors and validate the model's decision-making process against clinical knowledge [21].

Performance Comparison of Models on a High-Dimensional Fertility Dataset

The table below summarizes a comparative analysis of different machine learning models applied to predict live birth outcomes from 48,514 IVF cycles, demonstrating the effectiveness of CNNs on structured medical data [21].

| Model | Accuracy | AUC | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Convolutional Neural Network (CNN) | 0.9394 ± 0.0013 | 0.8899 ± 0.0032 | 0.9348 ± 0.0018 | 0.9993 ± 0.0012 | 0.9660 ± 0.0007 |

| Random Forest | 0.9406 ± 0.0017 | 0.9734 ± 0.0012 | 0.9350 ± 0.0021 | 0.9993 ± 0.0012 | 0.9662 ± 0.0009 |

| Decision Tree | 0.8631 ± 0.0049 | 0.8631 ± 0.0049 | 0.8631 ± 0.0049 | 0.9993 ± 0.0012 | 0.9265 ± 0.0032 |

| Naïve Bayes | 0.7143 ± 0.0063 | 0.8178 ± 0.0041 | 0.9993 ± 0.0012 | 0.7143 ± 0.0063 | 0.8332 ± 0.0050 |

| Feedforward Neural Network | 0.9394 ± 0.0013 | 0.9394 ± 0.0013 | 0.9394 ± 0.0013 | 0.9993 ± 0.0012 | 0.9686 ± 0.0007 |

Hyperparameter Search Space for Genetic Algorithm

For complex tasks, automating architecture design can be beneficial. The table below outlines a typical hyperparameter search space for a genetic algorithm optimizing a CNN [26].

| Hyperparameter | Possible Values |

|---|---|

| Number of Convolutional Layers | 1, 2, 3, 4, 5 |

| Filters per Layer | 16, 32, 64, 128, 256 |

| Kernel Sizes | 3, 5, 7 |

| Pooling Types | 'max', 'avg', 'none' |

| Learning Rate | 0.1, 0.01, 0.001, 0.0001 |

| Activation Functions | 'relu', 'elu', 'leaky_relu' |

| Dropout Rates | 0.0, 0.25, 0.5 |

The Scientist's Toolkit: Research Reagent Solutions

The table below lists key software and hardware tools essential for conducting CNN-based research in bio-medical image analysis.

| Item Name | Function / Application |

|---|---|

| PyTorch / TensorFlow | Core deep learning frameworks used for building, training, and evaluating CNN models [21] [28]. |

| SHAP (SHapley Additive exPlanations) | A game theory-based library to explain the output of any machine learning model, crucial for interpreting CNN predictions on structured clinical data [21]. |

| scikit-learn | A fundamental library for data preprocessing, traditional machine learning model implementation, and model evaluation (e.g., calculating metrics) [21]. |

| NVIDIA GPU (e.g., RTX 3090) | Graphics processing unit essential for accelerating the massive parallel computations required for CNN training, significantly reducing experiment time [21] [26]. |

| Google Colab / Jupyter Notebook | Interactive computing environments that facilitate iterative development, visualization, and documentation of CNN experiments. |

| RG7167 | RG7167, MF:C20H19N5O2 |

| AI11 | AI11 Reagent|For Research Use Only |

Workflow Visualization

CNN Image Analysis Pipeline

Model Interpretation with XAI

Troubleshooting Guide & FAQs for Researchers

This technical support center is designed to assist scientists and drug development professionals in navigating the technical and analytical challenges associated with AI-driven embryo selection platforms, specifically the PGTai system, within the context of research on high-dimensional fertility data.

Frequently Asked Questions

Q1: Our validation study shows a lower euploidy rate increase than the 7.7% reported. What are potential causes for this discrepancy? A1: Discrepancies in euploidy rate validation can stem from several research variables:

- Patient Cohort Demographics: The baseline euploidy rate is highly dependent on the age distribution of your patient population. The reported 7.7% relative increase is an average; validate your findings against age-stratified data from your own cohort [31] [32].

- Control Group Methodology: Ensure your control group uses the appropriate subjective NGS platform (e.g., BlueFuse Multi Software with manual assessment) for a direct comparison [32].

- Biopsy and Wet-Lab Procedures: Variations in trophectoderm biopsy technique, sample amplification (e.g., SurePlex DNA Amplification System), and library preparation (e.g., VeriSeq or Nextera XT kits) can impact DNA quality and subsequent AI analysis [32].

Q2: How does the PGTai algorithm handle mosaicism, and why does its reporting decrease? A2: The PGTai platform uses a combination of machine learning models to improve signal clarity.

- Mechanism: The AI is trained on a massive dataset of embryos with known live birth outcomes. This allows its algorithms to better distinguish true mosaic aneuploidy from molecular noise or technical artifacts that might be misinterpreted by subjective NGS analysis [31] [32].

- Outcome: This enhanced specificity leads to a relative decrease in mosaic embryo reporting (21.2% in initial studies), as embryos with low-level anomalies that may still be viable are not classified as mosaic. This refines the pool of embryos considered suitable for transfer [31].

Q3: What are the minimum data requirements to leverage the PGTai platform for a multi-center research study? A3: The platform's strength is its use of large-scale, high-quality data.

- Sequencing Data: Data must be generated via Next-Generation Sequencing (NGS). The AI 2.0 system utilizes paired-end sequencing on a platform like Illumina's NextSeq, targeting 4 million raw reads for higher data coverage [32].

- Clinical Outcome Data: The algorithm was built and validated on data from over 1,000 embryo biopsies with known live birth or sustained pregnancy outcomes. For robust external validation, your study should aim for a similarly well-annotated dataset [31].

- Sample Tracking: Ensure meticulous sample tracking to link embryo biopsy genetic data with post-transfer clinical outcomes, including biochemical pregnancy, spontaneous abortion, and ongoing pregnancy/live birth rates [32].

Q4: We are encountering a high rate of "no signal" or amplification failure in biopsies. How can we optimize this process? A4: Amplification failure is often a pre-analytical issue.

- Biopsy Quality: Re-train on trophectoderm biopsy techniques to ensure an adequate number of cells are retrieved without causing embryo damage.

- Sample Handling and Lysis: Strictly adhere to the protocols of your DNA amplification system (e.g., SurePlex). Improper lysis or contamination can lead to amplification failure.

- Reagent Quality: Ensure all reagents are stored and handled correctly. Use high-quality, validated research-grade kits for DNA amplification and library preparation [32].

Quantitative Performance Data

The following tables summarize key quantitative findings from studies evaluating the PGTai platform against standard NGS.

Table 1: Embryo Ploidy Classification Rates (N=24,908 embryos) [32]

| Ploidy Classification | Subjective NGS | PGTai (AI 1.0) | PGTai 2.0 (AI 2.0) |

|---|---|---|---|

| Euploid Rate | 28.9% | 36.6% | 35.0% |

| Simple Mosaicism Rate | 14.0% | 11.3% | 10.1% |

| Aneuploid Rate | 57.0% | 52.1% | 54.8% |

Table 2: Single Thawed Euploid Embryo Transfer (STEET) Outcomes [32]

| Clinical Outcome | Subjective NGS | PGTai 2.0 (AI 2.0) |

|---|---|---|

| Ongoing Pregnancy/Live Birth Rate (OP/LBR) | 61.7% | 70.3% |

| Biochemical Pregnancy Rate (BPR) | 11.8% | 4.6% |

| Implantation Rate (IR) | 66.1% | 73.4% |

Experimental Protocol: Validating an AI-Based PGT-A Platform

This protocol outlines the key steps for a research study comparing AI-driven PGT-A analysis to traditional methods.

1. Patient Selection and Ovarian Stimulation

- Design: Recruit patients undergoing IVF with PGT-A. A retrospective cohort design is common.

- Stimulation: Perform controlled ovarian hyperstimulation using recombinant FSH or a combination of FSH with human menopausal gonadotropins (hMG). Suppress luteinizing hormone using a GnRH antagonist or agonist protocol [32].

- Trigger: Induce final oocyte maturation with hCG or a GnRH agonist/hCG combination when lead follicles reach 18–20 mm [32].

2. Embryo Culture, Biopsy, and Preparation for PGT-A

- Culture: Fertilize oocytes via IVF or ICSI. Culture embryos in a single-step culture medium to the blastocyst stage (days 5, 6, or 7) [32].

- Assisted Hatching & Biopsy: Perform assisted hatching on day 4. Conduct trophectoderm biopsy on days 5, 6, or 7 [32] [33].

- Sample Prep: Lysate biopsied cells and amplify DNA using a commercial system (e.g., SurePlex DNA Amplification System). Prepare sequencing libraries using a validated kit (e.g., VeriSeq PGS or Nextera XT) [32].

3. Genetic Analysis and AI Interpretation

- Sequencing: Sequence libraries on an appropriate platform (e.g., Illumina MiSeq for subjective NGS; Illumina NextSeq for AI 2.0) [32].

- Control Group Analysis: Analyze sequencing data from the control group using subjective software (e.g., BlueFuse Multi). Competent laboratory staff should manually assess copy number plots using standardized thresholds (e.g., 1.8–2.2 copies for euploid) [32].

- AI Group Analysis: For the AI group, process sequencing data through the proprietary PGTai algorithm stack. The platform uses machine learning models (e.g., linear regression, hidden Markov models, convolutional neural networks) trained on known positive and negative samples to automatically classify embryos [31] [32].

4. Embryo Transfer and Outcome Measurement

- Transfer: Perform Single Thawed Euploid Embryo Transfers (STEET) in a subsequent cycle.

- Primary Outcomes: Measure rates of euploidy, aneuploidy, and mosaicism [32].

- Secondary Outcomes: Track key pregnancy indices:

- Implantation Rate (IR): Number of positive hCG tests per embryos transferred.

- Biochemical Pregnancy Rate (BPR): Early pregnancy loss after positive hCG.

- Ongoing Pregnancy/Live Birth Rate (OP/LBR): Pregnancy progressing beyond 20 weeks or resulting in a live birth [32].

Experimental Workflow and AI Analysis

Research Reagent Solutions

Table 3: Essential Research Materials for PGT-A Studies [32]

| Research Reagent / Equipment | Function in Experiment |

|---|---|

| Recombinant FSH / hMG | For controlled ovarian hyperstimulation to develop multiple follicles. |

| GnRH Antagonist/Agonist | Used for luteinizing hormone suppression during stimulation. |

| Single-Step Embryo Culture Medium | Supports embryo development from fertilization to the blastocyst stage. |

| Assisted Hatching Laser | Creates an opening in the zona pellucida prior to trophectoderm biopsy. |

| Trophectoderm Biopsy Pipettes | For the physical removal of a few cells from the blastocyst. |

| SurePlex DNA Amplification System | Whole Genome Amplification (WGA) of the limited DNA from the biopsy. |

| VeriSeq PGS / Nextera XT Kit | Prepares sequencing libraries from amplified DNA for NGS. |

| Illumina MiSeq/NextSeq | Next-Generation Sequencing platforms to generate the raw genetic data. |

| BlueFuse Multi Software | Bioinformatic software for manual, subjective analysis of NGS data (control arm). |

| PGTai Algorithm Platform | Proprietary AI stack for automated, standardized embryo classification. |

Frequently Asked Questions (FAQs)

Q: What are the primary challenges when integrating different types of biological data, such as imaging and clinical records? A: The main challenges involve data complexity and interoperability [34]. Each data type (e.g., genomic sequencing, imaging, EHRs) has its own formats, ontologies, and standards, making harmonization technically demanding. Additional hurdles include the high computational demand for processing large datasets and regulatory concerns over patient data privacy governed by statutes like HIPAA and GDPR [34].

Q: My high-dimensional data visualization seems to scramble the global structure. What alternatives are there to t-SNE or PCA? A: Methods like t-SNE often scramble global structure, while PCA can fail to capture nonlinear relationships [35]. Consider using visualization methods specifically designed for high-dimensional biological data, such as PHATE (Potential of Heat-diffusion for Affinity-based Transition Embedding). PHATE is designed to preserve both local and global nonlinear structures and can provide a denoised representation of your data [35].

Q: How can I make the graphs and charts in my research more accessible to colleagues with color vision deficiencies? A: Do not rely on color alone to convey information [36] [37]. Use multiple visual cues such as different node shapes, patterns, line styles, or markers [36] [37]. Always choose color palettes with sufficient contrast and test them with colorblind-safe simulators. Providing multiple color schemes, including a colorblind-friendly mode, can make a significant difference [36].

Q: What is a multimodal large language model (MLLM) and how is it relevant to biomedical research? A: A Multimodal Large Language Model (MLLM) is an advanced AI system that can process and integrate information across multiple modalities, such as text, images, audio, and genomic data, within a single architecture [38]. In biomedical research, this allows for the holistic analysis of heterogeneous data streams—for example, simultaneously analyzing genetic sequences, clinical notes, and medical images to identify robust therapeutic targets or improve patient stratification for clinical trials [39] [38].

Troubleshooting Guides

Issue 1: Incompatible Data Formats and Failed Integration

Problem: Data from various sources (e.g., sequencing, EHRs, microscopy images) cannot be aligned for analysis.

Solution:

- Employ a Unified Data Platform: Use database software like TileDB [34] or frameworks like MultiAssayExperiment in R [34] designed to consolidate multimodal data types into a unified architecture.

- Systematic Preprocessing Protocol:

- Normalization: Independently normalize each data modality to make them comparable.

- Metadata Alignment: Ensure rich, consistent metadata (e.g., sample IDs, timestamps) links all data points across modalities.

- Dimensionality Reduction: Apply methods like PHATE [35] to reduce noise and aid integration.

Issue 2: Poor Visualization of High-Dimensional Data

Problem: Standard tools like PCA lose fine-grained local structure, while t-SNE distorts global data relationships.

Solution:

- Adopt the PHATE Algorithm: This method is specifically designed for visualizing high-dimensional data while preserving both local and global structure [35].

- PHATE Experimental Protocol [35]:

- Compute pairwise distances from your data matrix.

- Transform distances to affinities using a kernel (like the α-decay kernel) to encode local information accurately.

- Learn global relationships via a diffusion process, which denoises data.

- Encode relationships with potential distance, an information-theoretic metric that compares the global context of each data point.

- Embed the potential distances into 2 or 3 dimensions using metric Multidimensional Scaling (MDS) for visualization.

Issue 3: Managing Computational Cost and Scalability

Problem: Analysis of large, integrated datasets is slow and exceeds available computational resources.

Solution:

- Leverage Scalable Algorithms: Use efficient versions of algorithms that incorporate landmark subsampling and sparse matrices. For instance, a scalable version of PHATE can process 1.3 million cells in approximately 2.5 hours [35].

- Utilize Cloud-Native and High-Performance Tools: Implement analysis with scalable, cloud-native databases [34] and open-source frameworks like Scanpy and Seurat for single-cell multimodal analysis [34].

Experimental Protocols for Multimodal Integration

Protocol 1: Multimodal Analysis for Embryo Viability Prediction

This protocol is adapted from a study using multimodal learning to predict embryo viability in clinical In-Vitro Fertilization (IVF) [40].

1. Objective: To combine Time-Lapse Video data and Electronic Health Records (EHRs) to automatically predict embryo viability, overcoming the subjectivity of manual embryologist assessment [40].

2. Key Reagent Solutions:

| Research Reagent | Function in the Experiment |

|---|---|

| Time-Lapse Microscopy | Captures continuous imaging data of embryo development, providing dynamic morphological information [40]. |

| Electronic Health Records (EHRs) | Contains static clinical and patient information to provide context alongside imaging data [40]. |

| Multimodal Machine Learning Model | A custom model architecture designed to effectively combine and learn from the inherent differences in video and EHR data modalities [40]. |

3. Workflow Diagram:

Protocol 2: A Multimodal Data Analysis Approach for Targeted Drug Discovery

This protocol outlines a method for hit identification and lead generation in drug discovery by combining multiple computational techniques [41].

1. Objective: To leverage the benefits of virtual high-throughput screening (vHTS), high-throughput screening (HTS), and structural fingerprint analysis by integrating them using Topological Data Analysis (TDA) to identify structurally diverse drug leads [41].

2. Key Reagent Solutions:

| Research Reagent | Function in the Experiment |

|---|---|

| Compound Library | A diverse collection of chemical compounds screened for potential drug activity [41]. |

| Virtual High-Through Screening (vHTS) | A computational technique to predict compound activity against a target [41]. |

| High-Throughput Screening (HTS) | An experimental method to rapidly test thousands of compounds for biological activity [41]. |

| Structural Fingerprint Analysis | A computational method to encode a molecule's structure for similarity comparison [41]. |

| Topological Data Analysis (TDA) | A mathematical approach that transforms complex, high-dimensional data from multiple screens into a topological network to identify clusters of active compounds [41]. |

3. Workflow Diagram:

Essential Computational Tools for Multimodal Data

The following table summarizes key software tools for analyzing multimodal biological data, as identified in the search results.

| Tool Name | Primary Function | Application Context |

|---|---|---|

| PHATE [35] | Dimensionality reduction and visualization | Preserving local/global structure in high-dimensional data (e.g., single-cell RNA-sequencing, mass cytometry). |

| TileDB [34] | Data management and storage | Unifying multimodal data types (omics, imaging) in a cloud-native, scalable database. |

| Scanpy [34] | Single-cell data analysis | Analyzing and integrating single-cell multimodal data, such as RNA and protein expression. |

| Seurat [34] | Single-cell data analysis | A comprehensive R toolkit for the analysis and integration of single-cell multimodal datasets. |

| MOFA+ [34] | Multi-Omics Factor Analysis | Integrating data across multiple omics layers (e.g., genomics, proteomics, metabolomics). |

Frequently Asked Questions (FAQs)

Q1: What are the most common applications of AI in the IVF laboratory today? AI is primarily applied to embryo selection, using images and time-lapse data to predict viability with a pooled sensitivity of 0.69 and specificity of 0.62 for implantation success [11]. Other key applications include sperm selection, embryo annotation, and workflow optimization. Adoption is growing, with over half of surveyed fertility specialists reporting regular or occasional AI use in 2025, up from about a quarter in 2022 [9].

Q2: Our AI model performs well on internal data but generalizes poorly to external datasets. What strategies can we employ? Poor generalization is a common challenge, often stemming from limited or non-diverse training data. To address this:

- Utilize Federated Learning: This approach allows multiple clinics to collaboratively train models without sharing sensitive patient data, increasing the diversity and volume of data the model learns from and improving its robustness [15].

- Implement Rigorous Validation: Ensure your model undergoes validation with larger, diverse, and multi-center datasets that are separate from the training data [15] [11].

- Standardize Input Data: Work towards standardizing imaging protocols and data formats across different sources to minimize technical variability that can impair model performance [15].

Q3: What are the key barriers to clinical adoption of AI tools in IVF, and how can they be overcome? The main barriers identified in a 2025 global survey are cost (38.01%) and a lack of training (33.92%) [9]. Ethical concerns and over-reliance on technology are also significant perceived risks. Overcoming these requires:

- Demonstrating clear value through improved outcomes and workflow efficiency, such as tools that can reduce documentation time by up to 40% [42].

- Developing comprehensive training programs for embryologists and clinicians.

- Creating transparent and explainable AI systems that build trust rather than acting as "black boxes" [15].

Q4: How can we effectively integrate AI tools into existing Electronic Medical Record (EMR) systems? Many AI tools currently operate as standalone platforms, creating workflow inefficiencies. For effective integration:

- Prioritize systems designed for embedded integration over those with manual data entry interfaces [42].

- Seek AI platforms that offer Application Programming Interfaces (APIs) to facilitate a seamless data exchange with your laboratory's EMR, ensuring that AI outputs directly populate patient records.

Troubleshooting Common AI Implementation Challenges

Issue: Inconsistent AI Performance Across Different Patient Subgroups

| Potential Cause | Diagnostic Check | Recommended Solution |

|---|---|---|

| Inherent Bias in Training Data | Audit the demographic and clinical characteristics of your training dataset. | Augment training data with underrepresented subgroups or employ algorithmic fairness techniques to mitigate bias. |

| Unaccounted Clinical Variables | Analyze if model performance drops for patients with specific prognoses (e.g., advanced maternal age). | Develop subgroup-specific models or integrate multi-modal data (e.g., clinical history, omics) to provide a more holistic assessment [15]. |

| Poor Quality or Non-Standard Input Images | Review the quality and consistency of images being fed into the AI system. | Implement and enforce standardized imaging protocols (e.g., focus, lighting) across all operators and equipment in the lab. |

Issue: Resistance to AI Adoption among Clinical Staff

| Observed Behavior | Underlying Concern | Mitigation Strategy |

|---|---|---|

| Ignoring AI Recommendations | Lack of trust in the "black box" decision-making process. | Choose AI systems with explainability features (e.g., heatmaps, feature importance scores) to help clinicians understand the rationale behind predictions [10]. |

| Complaints of Increased Workload | Poor integration creates duplicate data entry tasks. | Integrate AI tools directly into the EMR and workflow to automate tasks, demonstrating time savings [42]. |

| Reluctance to Change Established Practices | Perception that traditional methods are sufficient or that AI is too complex. | Provide hands-on training and share evidence from validated studies showing improved outcomes, such as AI models that outperform traditional morphological assessments [9]. |

Experimental Protocols for AI Model Validation

Protocol: Validating an Embryo Selection Model for Clinical Use

This protocol outlines key steps for establishing the diagnostic accuracy and clinical utility of an AI model for embryo selection.

1. Define the Objective and Outcome Clearly state the model's purpose (e.g., "to rank blastocysts based on their probability of leading to a clinical pregnancy") and the primary outcome measure (e.g., clinical pregnancy confirmed by ultrasound).

2. Dataset Curation and Partitioning

- Data Collection: Assemble a diverse dataset comprising embryo images (e.g., time-lapse videos), corresponding clinical data, and confirmed clinical outcomes.

- Data Partitioning: Randomly split the dataset into three distinct subsets:

- Training Set (70%): Used to train the initial model.

- Validation Set (15%): Used for hyperparameter tuning and model selection during development.

- Test Set (15%): Used only once for the final evaluation of the model's performance. This set must be completely held out from the training process to provide an unbiased estimate of real-world performance.