Proximity Search Mechanisms: A New Paradigm for Interpretable Clinical AI and Drug Discovery

This article explores the transformative potential of proximity-based mechanisms in enhancing the interpretability and trustworthiness of artificial intelligence for clinical decision-making and drug development.

Proximity Search Mechanisms: A New Paradigm for Interpretable Clinical AI and Drug Discovery

Abstract

This article explores the transformative potential of proximity-based mechanisms in enhancing the interpretability and trustworthiness of artificial intelligence for clinical decision-making and drug development. It provides a comprehensive examination of the foundational principles, drawing parallels from biologically inspired induced proximity in therapeutics. The scope covers methodological applications, including uncertainty-aware evidence retrieval and explainable AI (XAI) frameworks, that leverage proximity to create transparent, auditable models. The article further addresses critical troubleshooting and optimization strategies to overcome implementation challenges, and concludes with rigorous validation and comparative analysis frameworks essential for clinical adoption. Aimed at researchers, scientists, and drug development professionals, this work synthesizes cutting-edge research to outline a roadmap for building reliable, interpretable, and clinically actionable AI systems.

The Foundations of Proximity: From Biological Principles to Computational Interpretability

The concept of induced proximity represents a paradigm shift across multiple scientific disciplines, from fundamental molecular biology to advanced computational clinical research. In molecular biology, it describes the deliberate bringing together of cellular components to trigger specific biological outcomes [1]. In computational research, proximity searching provides a methodological framework for finding conceptually related terms within a body of text, enhancing data interpretability [2]. This article explores this unifying principle through application notes and detailed experimental protocols, framing them within the context of clinical interpretability research. By examining proximity-based mechanisms across these domains, researchers can identify transferable strategies for enhancing the precision, efficacy, and explainability of both therapeutic interventions and clinical risk prediction models.

Molecular Proximity: Mechanisms and Applications

Fundamental Mechanisms of Induced Proximity

Molecular proximity technologies function as "matchmakers" within the cellular environment, creating transient but productive interactions between disease-causing proteins and cellular machinery that can neutralize them [1] [3]. These systems typically consist of a heterobifunctional design where one domain binds to a target protein, another domain recruits an effector protein, and a linker connects these domains to facilitate new protein-protein interactions [1]. The matchmaker component subsequently dissociates, allowing for catalytic reuse and enabling a single molecule to eliminate multiple target proteins sequentially [1] [3].

The table below summarizes the primary classes of molecular proximity inducers and their mechanisms of action:

Table 1: Classes of Molecular Proximity Inducers and Their Mechanisms

| Class | Mechanism of Action | Cellular Location | Key Components | Outcome |

|---|---|---|---|---|

| PROTACs (Proteolysis Targeting Chimeras) [1] | Recruit E3 ubiquitin ligase to target protein | Intracellular | Target binder, E3 ligase recruiter, linker | Ubiquitination and proteasomal degradation |

| BiTE Molecules (Bispecific T-cell Engagers) [1] | Connect tumor cells with T cells | Extracellular, cell surface | CD3 binder, tumor antigen binder | T-cell mediated cytotoxicity |

| Molecular Glues (e.g., LOCKTAC) [1] | Stabilize existing protein interactions | Intracellular/Extracellular | Monovalent small molecule | Target stabilization or inhibition |

| LYTACs (Lysosome Targeting Chimeras) [1] | Link extracellular proteins to lysosomal receptors | Extracellular, cell surface | Target binder, lysosomal receptor binder | Lysosomal degradation |

| RNATACs (RNA-Targeting Chimeras) [1] | Target faulty RNA for degradation | Intracellular | RNA binder, nuclease recruiter | RNA degradation and reduced protein translation |

Experimental Protocol: DNA-Encoded Library Screening for Proximity Inducers

Purpose: To identify novel proximity-inducing molecules from vast chemical libraries using DNA-encoded library (DEL) technology [1].

Materials and Reagents:

- DNA-encoded chemical library (contains billions of unique small molecules tagged with DNA barcodes)

- Target protein of interest (purified, with known involvement in disease pathology)

- Effector protein (appropriate for desired outcome, e.g., E3 ubiquitin ligase for degradation)

- Solid support with immobilized binding partner

- PCR reagents for amplification of recovered DNA barcodes

- Next-generation sequencing platform for barcode identification

- Buffer systems (appropriate for maintaining protein stability and interactions)

Procedure:

- Library Preparation: Dilute the DNA-encoded library in appropriate binding buffer to ensure optimal diversity representation [1].

- Incubation with Target: Combine the library with immobilized target protein and incubate for 2-4 hours at 4°C with gentle agitation to facilitate binding.

- Wash Steps: Perform sequential wash steps (5-10 cycles) with buffer containing mild detergents to remove non-specifically bound molecules.

- Elution of Binders: Release specifically bound molecules using mild denaturing conditions (e.g., low pH or high salt) that preserve DNA barcode integrity.

- PCR Amplification: Amplify recovered DNA barcodes using primers compatible with subsequent sequencing platforms.

- Next-Generation Sequencing: Sequence the amplified DNA barcodes to identify molecules that bound to the target protein.

- Hit Validation: Resynthesize identified hit compounds without DNA tags and validate their binding and functional activity in secondary assays.

Troubleshooting Notes:

- Low library diversity can lead to limited hit identification; ensure proper library storage and handling.

- High background noise may require optimization of wash stringency.

- False positives may occur due to promiscuous binders; include appropriate counter-screens.

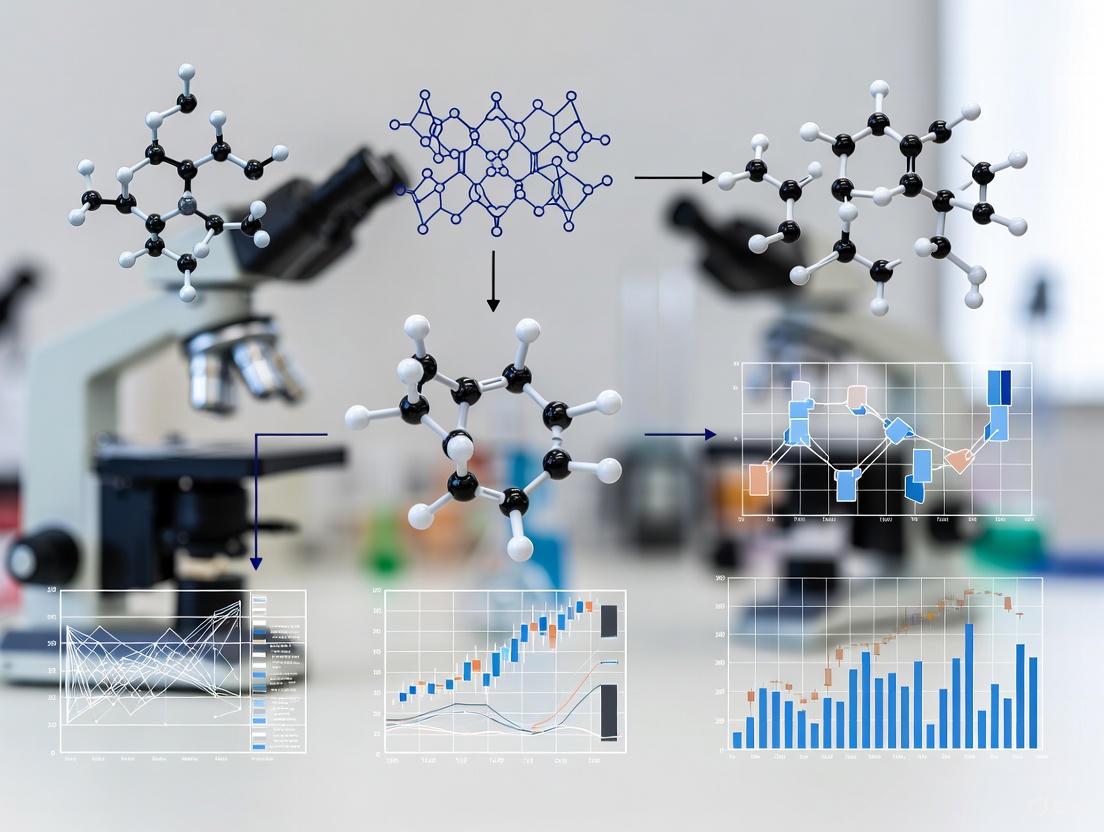

Diagram 1: DEL Screening Workflow for identifying proximity-inducing molecules.

Computational Proximity: Enhancing Clinical Interpretability

Proximity Search Mechanisms for Clinical Data Mining

In computational research, proximity searching enables researchers to locate conceptually related terms that appear near each other in text, regardless of the exact phrasing [2]. This methodology is particularly valuable for clinical interpretability research, where understanding relationships between clinical concepts, symptoms, and outcomes is essential. Different database systems implement proximity operators with varying syntax but consistent underlying principles.

The table below compares proximity search operators across different research database platforms:

Table 2: Proximity Search Operators Across Research Platforms

| Database Platform | Near Operator (Unordered) | Within Operator (Ordered) | Maximum Word Separation |

|---|---|---|---|

| EBSCO Databases [2] | N5 (finds terms within 5 words, any order) | W5 (finds terms within 5 words, specified order) | Varies (typically 10-255 words) |

| ProQuest [2] | N/5 or NEAR/5 | W/5 | Varies by implementation |

| Web of Science [2] | NEAR/5 (must spell out NEAR) | Not typically available | Varies by implementation |

| Google [2] | AROUND(5) | Not available | Limited contextual proximity |

Experimental Protocol: Proximity-Enhanced Clinical Risk Prediction

Purpose: To develop an interpretable clinical risk prediction model using proximity-based rule mining for acute coronary syndrome (ACS) mortality prediction [4].

Materials and Software:

- Clinical dataset (de-identified patient records including demographics, clinical measurements, and outcomes)

- Data preprocessing tools (for handling missing values and normalization)

- Rule mining algorithm (for creating dichotomized risk factors)

- Machine learning classifier (e.g., logistic regression, neural networks)

- Model evaluation framework (discrimination and calibration metrics)

- Statistical software (R, Python with appropriate libraries)

Procedure:

- Data Preparation:

- Collect and de-identify patient data including clinical parameters, lab values, and 30-day mortality outcomes [4].

- Handle missing data using appropriate imputation methods.

- Split data into training (70%), validation (15%), and test (15%) sets.

Rule Generation through Conceptual Proximity:

- Identify key clinical concepts related to ACS mortality through literature review.

- Create dichotomized rules by applying thresholds to continuous variables (e.g., "age > 65", "systolic BP < 100") [4].

- Use proximity searching in clinical literature databases to validate and expand rule concepts.

Model Training:

- Train a machine learning classifier to predict the "acceptance degree" (probability of correctness) for each rule for individual patients [4].

- Combine rule acceptance degrees to compute personalized mortality risk scores.

- Incorporate reliability estimates for each prediction.

Model Evaluation:

- Assess discrimination using Area Under the ROC Curve (AUC).

- Evaluate calibration using calibration curves and metrics.

- Compare performance against established clinical risk scores (e.g., GRACE score) [4].

Validation Metrics:

- Area Under ROC Curve (AUC): Target >0.80 for good discrimination [4].

- Geometric Mean (GM): Combined measure of sensitivity and specificity.

- Positive Predictive Value (PPV) and Negative Predictive Value (NPV): Clinical relevance of predictions.

- Calibration Slope: Target接近 1.0 (e.g., 0.96) for ideal calibration [4].

Diagram 2: Clinical Risk Prediction Workflow using proximity-based rules.

Integrated Application: Bridging Molecular and Computational Proximity

The table below details key research reagents and computational resources essential for proximity-based research across biological and computational domains:

Table 3: Research Reagent Solutions for Proximity Studies

| Category | Item | Specifications | Application/Function |

|---|---|---|---|

| Molecular Biology | DNA-encoded Libraries [1] | Billions of unique small molecules with DNA barcodes | High-throughput screening for proximity inducers |

| E3 Ubiquitin Ligase Recruiters [1] | CRBN, VHL, or IAP-based ligands | Targeted protein degradation via PROTACs | |

| Bispecific Scaffolds [1] | Anti-CD3 x anti-tumor antigen formats | T-cell engagement via BiTE technology | |

| Cell-Based Assays | Reporter Cell Lines | Engineered with pathway-specific response elements | Functional validation of proximity inducers |

| Primary Immune Cells | T-cells, macrophages from human donors | Ex vivo efficacy testing of immunomodulators | |

| Computational Resources | Research Databases [2] | EBSCO, ProQuest, Web of Science | Proximity searching for literature mining |

| Clinical Data Repositories | De-identified patient records with outcomes | Training and validation of risk prediction models | |

| Machine Learning Frameworks | Python/R with scikit-learn, TensorFlow | Implementation of interpretable AI models |

Advanced Protocol: Integrating Molecular and Computational Proximity for Target Validation

Purpose: To create an integrated workflow combining computational proximity searching with molecular proximity technologies for novel target validation.

Procedure:

- Target Identification via Literature Proximity Mining:

- Use proximity search operators (e.g., "disease N5 pathway N5 mechanism") to identify novel disease mechanisms [2].

- Apply natural language processing to extract protein-protein interaction networks from scientific literature.

- Prioritize targets based on network connectivity and druggability predictions.

Molecular Proximity Probe Design:

- Design PROTAC molecules or other proximity inducers for prioritized targets using structural informatics.

- Synthesize and validate target binding and degradation efficacy in cellular models.

Clinical Correlate Analysis:

- Apply proximity-based clinical rule mining to electronic health records.

- Identify patient subgroups most likely to respond to target modulation based on clinical特å¾.

Iterative Refinement:

- Use clinical insights to refine molecular design.

- Apply molecular insights to improve clinical risk stratification.

Validation Metrics:

- Computational: Precision and recall of target-disease association mining.

- Molecular: Degradation efficiency (DC50), maximum degradation (Dmax), and selectivity.

- Clinical: Model interpretability, reliability estimation, and clinical utility measures.

The principle of proximity—whether molecular or computational—provides a powerful framework for enhancing precision and interpretability in biomedical research. Molecular proximity technologies enable targeted manipulation of previously "undruggable" cellular processes, while computational proximity methods enhance our ability to extract meaningful patterns from complex clinical data. The integrated application of both approaches, as demonstrated in these application notes and protocols, offers a promising path toward more interpretable, reliable, and effective strategies for drug development and clinical decision support. As both fields continue to evolve, their convergence will likely yield novel insights and methodologies that further advance the precision medicine paradigm.

Chemically Induced Proximity (CIP) represents a transformative approach in biological research and therapeutic development, centered on using small molecules to control protein interactions with precise temporal resolution. Proximity, or the physical closeness of molecules, is a pervasive regulatory mechanism in biology that governs cellular processes including signaling cascades, chromatin regulation, and protein degradation [5]. CIP strategies utilize chemical inducers of proximity (CIPs)—synthetic, drug-like molecules that bring specific cellular proteins into close contact, thereby activating or modifying their function. This technology has evolved from a basic research tool to a promising therapeutic modality, enabling scientists to manipulate biological pathways in ways that were previously impossible. The lessons learned from applying CIP principles to targeted protein degradation platforms, particularly PROTACs and Molecular Glues, are now reshaping drug discovery and expanding the druggable proteome.

Fundamental Mechanisms of CIP, PROTACs, and Molecular Glues

Core Principles of Chemically Induced Proximity

At its foundation, CIP relies on creating physical proximity between proteins that may not naturally interact. This induced proximity can trigger downstream biological events such as signal transduction, protein translocation, or targeted degradation. The core mechanism involves a CIP molecule acting as a bridge between two protein domains—typically a "receptor" and a "receiver" [6]. This ternary complex formation can occur within seconds to minutes after CIP addition, allowing for precise experimental control over cellular processes. Unlike genetic approaches, CIP offers acute temporal control, enabling researchers to study rapid biological responses and avoid compensatory adaptations that may occur with chronic genetic manipulations.

PROTACs: Heterobifunctional Inducers of Degradation

PROteolysis TArgeting Chimeras (PROTACs) represent a sophisticated application of CIP principles for targeted protein degradation. These heterobifunctional molecules consist of three key elements: a ligand that binds to a Protein of Interest (POI), a second ligand that recruits an E3 ubiquitin ligase, and a chemical linker that connects these two moieties [7] [8] [9]. The PROTAC molecule simultaneously engages both the target protein and an E3 ubiquitin ligase, forming a ternary complex that brings the POI into proximity with the cellular degradation machinery. This induced proximity results in the ubiquitination of the target protein, marking it for destruction by the proteasome [9]. A significant advantage of the PROTAC mechanism is its catalytic nature—after ubiquitination, the PROTAC molecule is released and can cycle to degrade additional target proteins, enabling efficacy even at low concentrations [9].

Molecular Glues: Monovalent Stabilizers of Interaction

Molecular Glues represent a distinct class of proximity inducers that function through a monovalent mechanism. Unlike the heterobifunctional structure of PROTACs, molecular glues are typically smaller, single-pharmacophore molecules that induce proximity by stabilizing interactions between proteins [8] [9]. These compounds often work by binding to an E3 ubiquitin ligase and altering its surface, creating a new interface that can recognize and engage target proteins that would not normally interact with the ligase [9]. This induced interaction leads to ubiquitination and degradation of the target protein, similar to the outcome of PROTAC activity but through a different structural approach. Classic examples include thalidomide and its analogs, which bind to the E3 ligase cereblon (CRBN) and redirect it toward novel protein substrates [8].

Comparative Mechanisms Visualization

The diagram below illustrates the fundamental mechanistic differences between Molecular Glues and PROTACs in targeted protein degradation:

Figure 1: Molecular Glues vs. PROTACs - Comparative Mechanisms in Targeted Protein Degradation

Quantitative Comparison and Characteristics

Structural and Functional Properties

Table 1: Comparative Analysis of Molecular Glues vs. PROTACs

| Characteristic | Molecular Glues | PROTACs |

|---|---|---|

| Molecular Structure | Monovalent, single pharmacophore | Heterobifunctional, two ligands connected by linker |

| Molecular Weight | Typically lower (<500 Da) | Typically higher (>700 Da) [9] |

| Rule of Five Compliance | Usually compliant | Often non-compliant due to size [9] |

| Mechanism of Action | Binds to E3 ligase or target, creating novel interaction surface | Simultaneously binds E3 ligase and target protein, inducing proximity [8] [9] |

| Degradation Specificity | Can degrade proteins without classical binding pockets | Requires accessible binding pocket on target protein [7] [9] |

| Design Approach | Often discovered serendipitously; rational design challenging | Rational design based on known ligands and linkers [9] |

| Cell Permeability | Generally good due to smaller size | Can be challenging due to larger molecular weight [9] |

| Catalytic Activity | Yes, can induce multiple degradation events | Yes, recycled after each degradation event [9] |

Performance Metrics of CIP Systems

Table 2: Quantitative Comparison of CIP Systems in Experimental Models

| CIP System | Ligand Structure | Time to Effect (t~0.75~) | Effective Concentration (EC~50~) | Interacting Fraction | Key Applications |

|---|---|---|---|---|---|

| Mandi System | Synthetic agrochemical | 10.1 ± 1.7 s (500 nM) [6] | 0.43 ± 0.17 µM [6] | 77 ± 12% [6] | Protein translocation, network shuttling, zebrafish embryos |

| Rapamycin System | Natural product with synthetic analogs | 107.9 ± 16.4 s (500 nM) [6] | Varies by analog | 71 ± 3% [6] | Signal transduction, transcription control, immunology |

| ABA System | Phytohormone (ABA-AM) | 3.5 ± 0.1 min (5 µM) [6] | 30.8 ± 15.5 µM [6] | 41 ± 6% [6] | Gene expression, stress response pathways |

| GA3 System | Phytohormone (GA3-AM) | 2.4 ± 0.5 min (5 µM) [6] | Not specified | Not specified | Plant biology, developmental studies |

Experimental Protocols and Applications

Protocol: Mandi-Induced Protein Translocation Assay

Purpose: To quantitatively measure Mandi-induced protein translocation kinetics in mammalian cells [6].

Materials:

- Mandi compound (commercially available synthetic agrochemical) [6]

- Plasmids: pPYR~Mandi~-TOM20 (receptor fused to mitochondrial outer membrane protein) and pABI-EGFP (cytosolic receiver fused to EGFP) [6]

- Cell line: HEK293T or other mammalian cell lines

- Imaging system: Automated epifluorescence microscope with environmental control and integrated liquid handling

- Analysis software: Image analysis platform with machine learning cell segmentation capabilities

Procedure:

- Cell preparation and transfection: Plate HEK293T cells in 96-well imaging plates at 50,000 cells/well. Transfect with both pPYR~Mandi~-TOM20 and pABI-EGFP using standard transfection reagents. Incubate for 24-48 hours to allow protein expression.

- Microscope setup: Configure automated microscope with temperature (37°C) and CO~2~ (5%) control. Set up time-lapse imaging with appropriate filter sets for EGFP and mitochondrial markers. Program liquid handling system for Mandi addition during imaging.

- Baseline imaging: Acquire images for 2 minutes to establish baseline localization of ABI-EGFP.

- Mandi addition: Add Mandi to final concentrations ranging from 10 nM to 5 µM while continuing time-lapse imaging. For kinetic comparisons with other CIPs, use 500 nM concentration.

- Image acquisition: Continue imaging for 15-30 minutes post-Mandi addition, capturing images every 5-10 seconds.

- Quantitative analysis: Use machine learning algorithms for automated cell segmentation and intensity measurement. Calculate translocation ratio as the fraction of ABI-EGFP signal colocalized with mitochondrial markers over time.

- Kinetic parameter extraction: Determine t~0.75~ values (time at which translocation reaches 75% of maximum) from translocation curves. Compare across different CIP systems and concentrations.

Troubleshooting: Optimize transfection efficiency if basal interaction is observed. Adjust Mandi concentration if translocation is too fast to resolve. Include controls with empty vector transfection to account for non-specific effects.

Protocol: PROTAC-Induced Protein Degradation Assessment

Purpose: To evaluate efficiency of PROTAC-mediated protein degradation in cellular models.

Materials:

- PROTAC molecules (heterobifunctional compounds with target protein ligand and E3 ligase recruiter)

- Cell lines expressing target protein of interest

- Western blot equipment or target-specific immunoassays

- Proteasome inhibitor (e.g., MG132) as control

- E3 ligase ligands (e.g., CRBN or VHL ligands depending on PROTAC design)

Procedure:

- Cell treatment: Seed appropriate cell lines in 6-well plates and allow to adhere overnight. Treat cells with varying concentrations of PROTAC (typically 1 nM to 10 µM) for different time points (4-24 hours).

- Control conditions: Include vehicle control, proteasome inhibitor control (MG132, 10 µM), and competition control with excess E3 ligase ligand.

- Sample collection: Harvest cells at designated time points and prepare lysates for protein quantification.

- Target protein detection: Perform Western blotting or specific immunoassays to quantify target protein levels. Normalize to loading controls (e.g., GAPDH, actin).

- Dose-response analysis: Calculate percentage degradation relative to vehicle control across different PROTAC concentrations.

- Ternary complex assessment: For mechanistic studies, employ techniques like cellular thermal shift assays or proximity ligation assays to confirm ternary complex formation.

- Functional consequences: Assess downstream biological effects of target degradation, such as pathway modulation or cell viability.

Applications: This protocol enables characterization of PROTAC efficiency, specificity, and kinetics, supporting optimization of degrader molecules for therapeutic development [7] [9].

Experimental Workflow Visualization

The following diagram illustrates a generalized experimental workflow for evaluating CIP systems:

Figure 2: Generalized Experimental Workflow for CIP System Evaluation

Research Reagent Solutions

Essential Research Tools for CIP Studies

Table 3: Key Research Reagents for CIP and Targeted Protein Degradation Studies

| Reagent Category | Specific Examples | Function/Application | Commercial Sources |

|---|---|---|---|

| CIP Molecules | Mandipropamid, Rapamycin, Abscisic Acid (ABA), Gibberellic Acid (GA3) | Induce proximity between engineered protein pairs; study kinetics of induced interactions [6] | Commercial chemical suppliers (e.g., Sigma-Aldrich, Tocris) |

| E3 Ubiquitin Ligases | Cereblon (CRBN), Von Hippel-Lindau (VHL), BIRC3, BIRC7, HERC4, WWP2 | Key components of ubiquitination machinery; recruited by PROTACs and molecular glues [9] | SignalChem Biotech, Sino Biological |

| PROTAC Components | Target protein ligands (e.g., AR binders, ER binders), E3 ligase ligands, Chemical linkers | Building blocks for PROTAC design and optimization; enable targeted degradation of specific proteins [9] | Custom synthesis, specialized chemical suppliers |

| Molecular Glue Compounds | Thalidomide, Lenalidomide, Pomalidomide, CC-90009, E7820 | Induce novel protein-protein interactions; redirect E3 ligase activity to non-native substrates [8] [9] | Pharmaceutical suppliers, chemical manufacturers |

| Detection Tools | Ubiquitination assays, Proteasome activity probes, Protein-protein interaction assays | Validate mechanism of action; confirm ternary complex formation and degradation efficiency | Life science suppliers (e.g., Promega, Abcam, Thermo Fisher) |

| Cell-Based Assays | Luciferase reporter systems, Split-TEV protease assays, Colocalization markers | Quantitative assessment of CIP efficiency; dose-response characterization [6] | Academic repositories, commercial assay developers |

Clinical Applications and Therapeutic Impact

Clinical Translation of CIP Technologies

The transition of CIP technologies from basic research to clinical applications represents a significant milestone in chemical biology and drug discovery. PROTACs have demonstrated remarkable progress in clinical development, with multiple candidates advancing through Phase I-III trials [9]. Bavdegalutamide (ARV-110), an androgen receptor-targeting PROTAC, has completed Phase II studies for prostate cancer, while Vepdegestrant (ARV-471), targeting the estrogen receptor for breast cancer, has advanced to NDA/BLA submission [9]. These clinical successes validate the CIP approach for targeting historically challenging proteins, including transcription factors that lack conventional enzymatic activity.

Molecular glue degraders have an established clinical track record, with drugs like thalidomide, lenalidomide, and pomalidomide approved for various hematological malignancies [9]. These immunomodulatory drugs (IMiDs) serendipitously discovered to function as molecular glues, have paved the way for deliberate development of glue-based therapeutics. Newer clinical-stage candidates include CC-90009 targeting GSPT1 and E7820 targeting RBM39, demonstrating expansion to novel target classes [9].

Clinical-Stage PROTACs

Table 4: Representative Clinical-Stage PROTACs in Development

| Molecule Name | Target Protein | E3 Ligase | Clinical Phase | Indication |

|---|---|---|---|---|

| ARV-471 (Vepdegestrant) | Estrogen Receptor (ER) | CRBN | NDA/BLA | ER+/HER2− breast cancer [9] |

| ARV-766 | Androgen Receptor (AR) | CRBN | Phase II | Prostate cancer [9] |

| ARV-110 (Bavdegalutamide) | Androgen Receptor (AR) | CRBN | Phase II | Prostate cancer [9] |

| DT-2216 | Bcl-XL | VHL | Phase I/II | Hematological malignancies [9] |

| NX-2127 | BTK | CRBN | Phase I | B-cell malignancies [9] |

| NX-5948 | BTK | CRBN | Phase I | B-cell malignancies [9] |

| CFT1946 | BRAF V600 | CRBN | Phase I | Melanoma with BRAF mutations [9] |

| KT-474 | IRAK4 | CRBN | Phase II | Auto-inflammatory diseases [9] |

Technical Challenges and Optimization Strategies

Addressing Limitations in CIP Implementation

Despite the considerable promise of CIP technologies, several technical challenges require careful consideration in experimental design and therapeutic development:

PROTAC-Specific Challenges: The relatively large molecular weight (>700 Da) of many PROTACs often places them outside the "Rule of Five" guidelines for drug-likeness, potentially leading to poor membrane permeability and suboptimal pharmacokinetic properties [9]. Optimization strategies include rational linker design incorporating rigid structures such as spirocycles or piperidines, which can significantly improve degradation potency and oral bioavailability [9]. Additionally, expanding the repertoire of E3 ligase ligands beyond the commonly used CRBN and VHL recruiters may enhance tissue specificity and reduce potential resistance mechanisms.

Molecular Glue Challenges: The discovery and rational design of molecular glues remain challenging due to the unpredictable nature of the protein-protein interactions they stabilize [9]. While serendipitous discovery has historically driven the field, emerging approaches include systematic screening of compound libraries and structure-based design leveraging structural biology insights. Recent strategies have shown promise in converting conventional inhibitors into degraders by adding covalent handles that promote interaction with E3 ligases [9].

General CIP Considerations: For all CIP systems, achieving optimal specificity and minimal off-target effects requires careful validation. Control experiments should include catalytically inactive versions, competition with excess ligand, and assessment of pathway modulation beyond the intended targets. The temporal control offered by CIP systems is a distinct advantage, but researchers must optimize timing and duration of induction to match biological contexts.

The field of Chemically Induced Proximity has revolutionized our approach to biological research and therapeutic development, providing unprecedented control over protein interactions and cellular processes. The lessons learned from PROTACs and Molecular Glues highlight both the immense potential and ongoing challenges in proximity-based technologies. As these approaches continue to evolve, several exciting directions are emerging: the integration of artificial intelligence and computational methods for rational degrader design; the expansion of E3 ligase toolbox beyond current standards; and the development of conditional and tissue-specific CIP systems for enhanced precision. The continuing translation of CIP technologies into clinical applications promises to expand the druggable proteome and create new therapeutic options for diseases previously considered untreatable. By applying the principles, protocols, and considerations outlined in these application notes, researchers can leverage the full potential of proximity-based approaches in their scientific and therapeutic endeavors.

The Core Concept of Proximity Search in Information Retrieval and Machine Learning

Proximity search refers to computational methods for quantifying the similarity, dissimilarity, or spatial relationship between entities within a dataset. In clinical interpretability research, these mechanisms enable researchers to identify patterns, cluster similar patient profiles, and elucidate decision-making processes of complex machine learning (ML) models. By measuring how "close" or "distant" data points are from one another in a defined feature space, proximity analysis provides a foundational framework for interpreting model behavior, validating clinical relevance, and ensuring that automated decisions align with established medical knowledge [10]. The translation of these technical proximity measures into clinically actionable insights remains a significant challenge, necessitating specialized application notes and protocols for drug development professionals and clinical researchers [11].

Foundational Concepts and Measures

Proximity measures vary significantly depending on data type and clinical application. The core principle involves converting clinical data into a representational space where distance metrics can quantify similarity.

Proximity Measures for Different Data Types

Table: Proximity Measures for Clinical Data Types

| Data Type | Common Proximity Measures | Clinical Application Examples | Key Considerations |

|---|---|---|---|

| Binary Attributes | Jaccard Similarity, Hamming Distance | Patient stratification based on symptom presence/absence; treatment outcome classification | Differentiate between symmetric and asymmetric attributes; pass/fail outcomes are typically asymmetric [12]. |

| Nominal Attributes | Simple Matching, Hamming Distance | Demographic pattern analysis; disease subtype categorization | Useful for categorical data without inherent order (e.g., race, blood type) [12]. |

| Ordinal Attributes | Manhattan Distance, Euclidean Distance | Severity staging (e.g., cancer stages); priority scoring | Requires rank-based distance calculation to preserve order relationships [12]. |

| Text Data | Cosine Similarity, Doc2Vec Embeddings | Patent text analysis for drug discovery; clinical note similarity | Captures semantic relationships beyond keyword matching; Doc2Vec outperforms frequency-based methods for document similarity [13]. |

| Geospatial Data | Haversine Formula, Euclidean Distance | Healthcare access studies; epidemic outbreak tracking | Requires specialized formulas for earth's curvature; often optimized with spatial indexing [14]. |

Technical Implementation of Binary Proximity Measures

For binary data commonly encountered in clinical applications (e.g., presence/absence of symptoms, positive/negative test results), asymmetric proximity calculations are particularly relevant. The dissimilarity between two patients m and n can be calculated using the following approach for asymmetric binary attributes:

Step 1: Construct a contingency table where:

a= number of attributes where both patients m and n have value 1 (e.g., both have the symptom)b= number of attributes where m=1 and n=0c= number of attributes where m=0 and n=1e= number of attributes where both m and n have value 0 (e.g., both lack the symptom)

Step 2: Apply the asymmetric dissimilarity formula:

dissimilarity = (b + c) / (a + b + c)

This approach excludes e (joint absences) from consideration, which is appropriate for many clinical contexts where mutual absence of a symptom may not indicate similarity [12].

Applications in Clinical Interpretability Research

Interpretable Machine Learning for Clinical Prediction

Recent research demonstrates how proximity-based interpretability methods can bridge the gap between complex ML models and clinical decision-making. In a comprehensive study on ICU mortality prediction, researchers developed and rigorously evaluated two ML models (Random Forest and XGBoost) using data from 131,051 ICU admissions across 208 hospitals. The random forest model demonstrated an AUROC of 0.912 with a complete dataset (130,810 patients, 5.58% ICU mortality) and 0.839 with a restricted dataset excluding patients with missing data (5,661 patients, 23.65% ICU mortality). The XGBoost model achieved an AUROC of 0.924 with the first dataset and 0.834 with the second. Through multiple interpretation mechanisms, the study consistently identified lactate levels, arterial pH, and body temperature as critical predictors of ICU mortality across datasets, cross-validation folds, and models. This alignment with routinely collected clinical variables enhances model interpretability for clinical use and promotes greater understanding and adoption among clinicians [11].

Evaluating Model Consistency with Clinical Protocols

A critical challenge in clinical ML is ensuring model predictions align with established medical protocols. Researchers have proposed specific metrics to assess both the accuracy of ML models relative to established protocols and the similarity between explanations provided by clinical rule-based systems and rules extracted from ML models. In one approach, researchers trained two neural networks—one exclusively on data, and another integrating a clinical protocol—on the Pima Indians Diabetes dataset. Results demonstrated that the integrated ML model achieved comparable performance to the fully data-driven model while exhibiting superior accuracy relative to the clinical protocol alone. Furthermore, the integrated model provided explanations for predictions that aligned more closely with the clinical protocol compared to the data-driven model, ensuring enhanced continuity of care [10].

Advanced Proximity Applications in Drug Development

Proximity-based methods are revolutionizing multiple aspects of drug development:

Patent Analysis and Innovation Tracking: Researchers have applied document vector representations (Doc2Vec) to patent abstracts followed by cosine similarity measurements to quantify proximity in "idea space." This approach revealed that patents within the same city show 0.02-0.05 standard deviations higher text similarity compared to patents from different cities, suggesting geographically constrained knowledge flows. This method provides an alternative to citation-based analysis of knowledge transfer in pharmaceutical innovation [13].

Genetic Disorder Classification: For complex genetic disorders like thalassemia, probabilistic state space models leverage the spatial ordering of genes along chromosomes to classify disease profiles from targeted next-generation sequencing data. One approach achieved a sensitivity of 0.99 and specificity of 0.93 for thalassemia detection, with 91.5% accuracy for characterizing subtypes. This spatial proximity-based method outperforms alternatives, particularly in specificity, and is broadly applicable to other genetic disorders [15].

Protein Representation Learning: Multimodal bidirectional hierarchical fusion frameworks effectively merge sequence representations from protein language models with structural features from graph neural networks. This approach employs attention and gating mechanisms to enable interaction between sequential and structural modalities, establishing new state-of-the-art performance on tasks including enzyme classification, model quality assessment, and protein-ligand binding affinity prediction [15].

Experimental Protocols

Protocol: Measuring Proximity in Clinical Text Data for Knowledge Discovery

Objective: Quantify similarity between clinical text documents (e.g., patent abstracts, clinical notes) to map knowledge relationships and innovation pathways.

Materials:

- Collection of text documents (e.g., patent abstracts from USPTO Bulk Data Products)

- Computational environment with Python and libraries (gensim, scikit-learn, numpy)

- Document metadata (e.g., location, time, technology classification)

Methodology:

- Text Preprocessing:

- Extract and clean relevant text (e.g., patent abstracts)

- Perform standard NLP preprocessing: tokenization, lowercasing, removal of stop words and punctuation

- Optionally apply stemming or lemmatization

Document Vectorization:

- Implement Document Vectors (Doc2Vec) with the following parameters:

- Vector size: 300 dimensions

- Window size: 5-10 words

- Minimum word count: 5-10

- Training epochs: 20-40

- Negative sampling: 5-25

- Train model on entire document corpus

- Implement Document Vectors (Doc2Vec) with the following parameters:

Similarity Calculation:

- Extract document vectors for all documents of interest

- Calculate pairwise cosine similarities between document vectors:

similarity = (A · B) / (||A|| ||B||)

- For localization studies, compare similarity distributions for:

- Documents from same geographic region (e.g., same city)

- Documents from different geographic regions

Statistical Analysis:

- Normalize similarity scores using z-score transformation

- Perform t-tests or ANOVA to compare similarity between groups

- Calculate effect sizes for significant differences

Validation:

- Confirm known relationships: documents sharing classes, citations, or inventors should have higher similarity

- Compare with alternative measures (e.g., term frequency-inverse document frequency)

- Assess robustness through cross-validation [13]

Protocol: Binary Proximity Analysis for Patient Stratification

Objective: Identify similar patient profiles based on binary clinical attributes (e.g., symptom presence, test results) for cohort identification and comparative effectiveness research.

Materials:

- Binary patient dataset (patients × attributes)

- Computational environment with standard statistical software

- Clinical expertise for attribute interpretation

Methodology:

- Data Preparation:

- Code all attributes as binary (0/1) values

- Determine attribute symmetry:

- Symmetric: Both presences (1,1) and absences (0,0) contribute equally to similarity

- Asymmetric: Only co-presences (1,1) indicate similarity; co-absences (0,0) are uninformative or excluded

Dissimilarity Matrix Calculation:

- For asymmetric binary attributes (most common in clinical applications):

- For each patient pair (i, j), calculate:

- a = number of attributes where both patients have 1

- b = number of attributes where i=1 and j=0

- c = number of attributes where i=0 and j=1

- e = number of attributes where both have 0

- Compute dissimilarity:

d(i,j) = (b + c) / (a + b + c)

- For each patient pair (i, j), calculate:

- For symmetric binary attributes:

- Use:

d(i,j) = (b + c) / (a + b + c + e)

- Use:

- For asymmetric binary attributes (most common in clinical applications):

Analysis and Interpretation:

- Construct dissimilarity matrix across all patient pairs

- Identify patient clusters using hierarchical clustering or similar methods

- Validate clusters with clinical outcomes or expert assessment

- Characterize clusters by their defining clinical features

Application Example: In a study of 57 individuals with thalassemia profiles, a probabilistic state space model leveraging spatial proximity along chromosomes achieved 91.5% accuracy for characterizing subtypes, rising to 93.9% when low-quality samples were excluded using automated quality control [15].

Protocol: Integrating Proximity Measures with Clinical Rules for Model Interpretability

Objective: Ensure ML model predictions align with clinical protocols and provide interpretable explanations consistent with medical knowledge.

Materials:

- Clinical dataset with outcomes

- Established clinical protocol or decision rules

- ML modeling environment (Python with scikit-learn, tensorflow/pytorch)

Methodology:

- Baseline Model Development:

- Train a standard data-driven ML model (e.g., neural network) using only patient data

- Evaluate performance using standard metrics (accuracy, AUROC)

Protocol-Integrated Model Development:

- Formalize clinical protocol as computable rules or constraints

- Integrate protocol knowledge during model training through:

- Custom loss functions that penalize protocol deviations

- Structured model architectures that encode protocol logic

- Multi-task learning that jointly predicts outcomes and protocol adherence

Explanation Similarity Assessment:

- Extract explanatory rules from both models (e.g., via rule extraction techniques)

- Extract rules from the clinical protocol

- Calculate explanation distance using:

- Rule syntax similarity (e.g., Jaccard similarity between condition sets)

- Semantic similarity (e.g., overlap in clinical concepts referenced)

- Outcome alignment (agreement in recommended actions)

Comprehensive Evaluation:

- Compare model performance metrics

- Assess protocol adherence on critical cases

- Measure explanation similarity between models and clinical protocol

- Evaluate clinical utility through expert review or simulated cases

Application: This approach has demonstrated that integrated models can achieve comparable performance to data-driven models while providing explanations that align more closely with clinical protocols, enhancing continuity of care and interpretability [10].

The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Computational Tools for Proximity Search in Clinical Research

| Tool/Category | Specific Examples | Function in Proximity Analysis | Implementation Considerations |

|---|---|---|---|

| Spatial Indexing Structures | R-trees, kd-trees, Geohashing | Enables efficient proximity search in large clinical datasets; essential for geospatial health studies | R-trees effective for multi-dimensional data; kd-trees suitable for fixed datasets; geohashing provides compact representation [14]. |

| Similarity Measurement Libraries | scikit-learn, gensim, NumPy | Provides implemented proximity measures (cosine, Jaccard, Euclidean) and embedding methods (Doc2Vec, Word2Vec) | Pre-optimized implementations ensure computational efficiency; gensim specializes in document embedding methods [13]. |

| Clinical Rule Formalization Tools | Clinical Quality Language (CQL), Rule-based ML frameworks | Encodes clinical protocols as computable rules for integration with ML models and explanation comparison | Requires collaboration between clinicians and data scientists; CQL provides standardized approach [10]. |

| Visualization Platforms | TensorBoard Projector, matplotlib, Plotly | Creates low-dimensional embeddings of high-dimensional clinical data for visual proximity assessment | Enables intuitive validation of proximity relationships; critical for interdisciplinary communication. |

| Optimization Services | Database spatial extensions (PostGIS), Search optimization services | Accelerates proximity queries in large clinical databases; essential for real-time applications | Reduces computational burden; PostgreSQL with PostGIS provides robust open-source solution [14]. |

| N-Desmethyl dosimertinib-d5 | N-Desmethyl Dosimertinib-d5 | Deuterium-labeled EGFR inhibitor for NSCLC research. N-Desmethyl Dosimertinib-d5 is for research use only. Not for human consumption. | Bench Chemicals |

| hDHODH-IN-8 | hDHODH-IN-8, MF:C21H15F6N3O4, MW:487.4 g/mol | Chemical Reagent | Bench Chemicals |

Proximity search mechanisms provide fundamental methodologies for enhancing interpretability in clinical machine learning applications. By quantifying similarities between patient profiles, clinical texts, and molecular structures, these approaches enable more transparent and clinically aligned AI systems. The experimental protocols and application notes presented here offer researchers and drug development professionals practical frameworks for implementing these techniques across diverse healthcare contexts. As the field advances, further integration of proximity-based interpretability methods into clinical workflow

Why Interpretability is Non-Negotiable in Clinical Decision-Making and Drug Development

In clinical decision-making and drug development, machine learning (ML) and artificial intelligence (AI) models are being deployed for high-stakes predictions including disease diagnosis, treatment selection, and patient risk stratification [16] [17]. While these models can outperform traditional statistical approaches by characterizing complex, nonlinear relationships, their adoption is critically dependent on interpretability—the ability to understand the reasoning behind a model's predictions [16] [18]. In contrast to "black box" models whose internal workings are opaque, interpretable models provide insights that are essential for building trust, ensuring safety, facilitating regulatory compliance, and ultimately, improving human decision-making [16] [19] [18].

The U.S. Government's Blueprint for an AI Bill of Rights and guidelines from the U.S. Food and Drug Administration (FDA) explicitly emphasize the principle of "Notice and Explanation," making interpretability a regulatory expectation and a prerequisite for the ethical deployment of AI in healthcare [16]. This document outlines the application of interpretable ML frameworks, provides experimental protocols for model interpretation, and situates these advancements within a novel research context: the use of proximity search mechanisms to enhance clinical interpretability.

Core Concepts and Definitions

Within the AI in healthcare landscape, key terms are defined with specific nuances [19] [18]:

- Transparency involves the disclosure of an AI system's data sources, development processes, limitations, and operational use. It answers the question, "What happened?" by making appropriate information available to relevant stakeholders [19].

- Explainability is a representation of the mechanisms underlying an AI system's operation. It answers the question, "How was the decision made?" by expressing the important factors that influenced the results in a way humans can understand [19] [18].

- Interpretability goes a step further, enabling humans to grasp the causal connections within a model and its outputs. It answers the question, "Why should I trust this prediction?" allowing a user to consistently predict the model's behavior [16] [18].

Applications and Quantitative Evidence

Interpretability is not a theoretical concern but a practical necessity across the clinical and pharmaceutical R&D spectrum. The table below summarizes evidence of its application and impact.

Table 1: Documented Applications and Performance of Interpretable ML in Healthcare

| Application Domain | Interpretability Method | Quantitative Performance / Impact | Key Interpretability Insight |

|---|---|---|---|

| Disease Prediction (Cardiovascular, Cancer) | Random Forest, Support Vector Machines [17] | AUC of 0.85 (95% CI 0.81-0.89) for cardiovascular prediction; 83% accuracy for cancer prognosis [17] | Identifies key risk factors (e.g., blood pressure, genetic markers) from real-world data [17]. |

| Medical Visual Question Answering (GI Endoscopy) | Multimodal Explanations (Heatmaps, Text) [20] | Evaluated via BLEU, ROUGE, METEOR scores and expert-rated clinical relevance [20] | Heatmaps localize pathological features; textual reasoning aligns with clinical logic, building radiologist trust [20]. |

| Psychosomatic Disease Analysis | Knowledge Graph with Proximity Metrics [21] | Graph constructed with 9668 triples; closer network distances predicted similarity in clinical manifestations [21] | Proximity between diseases and symptoms reveals potential comorbidity and shared treatment pathways [21]. |

| Drug Discovery: Hit-to-Lead | AI-Guided Retrosynthesis & Scaffold Enumeration [22] | Generated >26,000 virtual analogs, yielding sub-nanomolar inhibitors with 4,500-fold potency improvement [22] | Interpretation of structure-activity relationships (SAR) guides rational chemical optimization [22]. |

| Target Engagement Validation | Cellular Thermal Shift Assay (CETSA) [22] | Quantified dose-dependent target (DPP9) engagement in rat tissue, confirming cellular efficacy [22] | Provides direct, empirical evidence of mechanistic drug action beyond in-silico prediction. |

Experimental Protocols for Model Interpretation

Protocol: Global Feature Importance using Permutation

Objective: To rank all input variables (features) by their average importance to a model's predictive accuracy across an entire population or dataset [16]. Materials: A trained ML model, a held-out test dataset. Procedure:

- Calculate the model's baseline performance (e.g., accuracy, AUC) on the test set.

- For each feature (e.g.,

blood_pressure,genetic_marker_X): a. Randomly shuffle the values of that feature across the test set, breaking its relationship with the outcome. b. Recalculate the model's performance using this permuted dataset. c. Record the decrease in performance (e.g., baseline AUC - permuted AUC). - The average performance decrease for each feature, normalized across all features, represents its global importance.

- Features causing the largest performance drop when shuffled are deemed most critical. Interpretation: This model-agnostic method reveals which factors the model relies on most for its average prediction, useful for hypothesis generation and model auditing [16].

Protocol: Local Explanation using LIME (Local Interpretable Model-agnostic Explanations)

Objective: To explain the prediction for a single, specific instance (e.g., one patient) by approximating the complex model locally with an interpretable one [18]. Materials: A trained "black box" model, a single data instance to explain. Procedure:

- Select the patient of interest and obtain the model's prediction for them.

- Generate a perturbed dataset by creating slight variations of this patient's data.

- Get predictions from the complex model for each of these perturbed instances.

- Fit a simple, interpretable model (e.g., linear regression with Lasso) to this new dataset, weighting instances by their proximity to the original patient.

- The coefficients of the simple model serve as the local explanation. Interpretation: LIME answers, "For this specific patient, which factors were the primary drivers of their high-risk prediction?" This aligns with clinical reasoning for individual cases [18].

Protocol: Knowledge Graph Construction and Proximity Analysis

Objective: To structure clinical entities and their relationships into a network, and use proximity metrics to uncover novel connections for diagnosis and treatment [21]. Materials: Unstructured clinical text, medical ontologies, LLMs (e.g., BERT), graph database. Procedure:

- Entity Recognition: Use a fine-tuned LLM to perform Named Entity Recognition (NER) on clinical text to extract entities (e.g., diseases, symptoms, drugs) [21].

- Relationship Extraction: Identify and define relationships between entities (e.g.,

Disease-Amanifests_withSymptom-B,Drug-CtreatsDisease-A) to form subject-predicate-object triples [21]. - Graph Construction: Assemble the triples into a knowledge graph where nodes are entities and edges are relationships.

- Proximity Search: Calculate network-based proximity scores (e.g., shortest path distance, random walk with restart) between any two nodes (e.g., two diseases, a drug and a symptom) [21]. Interpretation: Closer proximity between diseases can predict similarities in their clinical manifestations and treatment approaches, providing a novel, interpretable perspective on disease relationships [21].

The Scientist's Toolkit: Essential Research Reagents & Platforms

Table 2: Key Tools and Platforms for Interpretable AI Research

| Item / Platform | Primary Function | Relevance to Interpretability |

|---|---|---|

| SHAP (Shapley Additive exPlanations) | Unified framework for feature attribution | Quantifies the marginal contribution of each feature to an individual prediction, based on game theory [18]. |

| LIME (Local Interpretable Model-agnostic Explanations) | Local surrogate model explanation | Approximates a complex model locally to provide instance-specific feature importance [18]. |

| Grad-CAM | Visual explanation for convolutional networks | Generates heatmaps on images (e.g., X-rays) to highlight regions most influential to the model's decision [18]. |

| CETSA (Cellular Thermal Shift Assay) | Target engagement validation in cells/tissues | Provides empirical, interpretable data on whether a drug candidate engages its intended target in a biologically relevant system [22]. |

| DALEX & lime R/Python Packages | Model-agnostic explanation software | Provides comprehensive suites for building, validating, and explaining ML models [16]. |

| Knowledge Graph Databases (e.g., Neo4j) | Network-based data storage and querying | Enables proximity analysis and relationship mining between clinical entities for hypothesis generation [21]. |

| C6 NBD Phytoceramide | C6 NBD Phytoceramide, MF:C30H51N5O7, MW:593.8 g/mol | Chemical Reagent |

| InhA-IN-4 | InhA-IN-4, MF:C14H12BrN3O2S, MW:366.23 g/mol | Chemical Reagent |

Visualizing Workflows for Interpretability

Workflow for Proximity-Based Clinical Interpretability Research

Diagram 1: Proximity-Based Clinical Insight Workflow

Multimodal Explanation for Medical VQA

Diagram 2: Multimodal Explainable VQA Framework

Application Note: Proximity Concepts in Clinical Research

Core Principles and Definitions

The concept of proximity—the physical closeness of molecules or computational elements—serves as a foundational regulatory mechanism across biological systems and computational networks. In clinical interpretability research, proximity-based analysis provides a unified framework for understanding complex systems, from protein interactions within cells to decision-making processes within neural networks. Chemically Induced Proximity (CIP) represents a deliberate intervention strategy using synthetic molecules to recruit neosubstrates that are not normally encountered or to enhance the affinity of naturally occurring interactions [23]. This approach has revolutionized both biological research and therapeutic development by enabling precise temporal control over cellular processes.

The fundamental hypothesis underlying proximity-based analysis is that effective interactions require physical closeness. In biological systems, reaction rates scale with concentration, which inversely correlates with the mean interparticle distance between molecules [24]. Similarly, in computational systems, mechanistic interpretability research investigates how neural networks develop shared computational mechanisms that generalize across problems, focusing on the functional "closeness" of processing elements that work together to solve specific tasks [25]. This parallel enables researchers to apply similar analytical frameworks to both domains, creating opportunities for cross-disciplinary methodological exchange.

Quantitative Foundations of Proximity Effects

The quantitative relationship between proximity and interaction efficacy follows well-established physical principles. The probability of an effective collision between two molecules is a third-order function of distance, allowing steep concentration gradients to produce qualitative changes in system behavior [24]. This mathematical foundation enables researchers to predict and model the effects of proximity perturbations in both biological and computational systems.

Table 1: Key Proximity Metrics Across Biological and Computational Domains

| Domain | Proximity Metric | Calculation Method | Interpretation |

|---|---|---|---|

| Biological Networks | Drug-Disease Proximity (z-score) | ( z = (dc - μ)/σ ) where ( dc ) = average shortest path between drug targets and disease proteins [26] [27] | z ≤ -2.0 indicates significant therapeutic potential [27] |

| Computational Networks | Component Proximity in Circuits | Analysis of attention patterns and activation pathways across model layers [25] | Identifies functionally related processing units |

| Experimental Biology | Chemically Induced Proximity Efficacy | Effective molarity and ternary complex stability measurements [28] [24] | Predicts functional consequences of induced interactions |

Experimental Protocols

Protocol 1: Network Proximity Analysis for Drug Repurposing

Purpose and Scope

Network Proximity Analysis (NPA) provides an unsupervised computational method to identify novel therapeutic applications for existing drugs by quantifying the network-based relationship between drug targets and disease proteins [26] [27]. This protocol details the steps for implementing NPA to identify candidate therapies for diseases with known genetic associations, enabling drug repurposing opportunities.

Materials and Reagents

- Computational Resources: Python environment with NetworkX or similar graph analysis libraries

- Data Sources:

- Human interactome data (protein-protein interaction network)

- Drug-target associations from DrugBank

- Disease-gene associations from GWAS catalog and OMIM

- Reference Implementation: Validated Python code from Guney et al. [27]

Procedure

Disease Gene Identification: Compile a list of genes significantly associated with the target disease through systematic literature review and database mining. Include only genes meeting genome-wide significance thresholds (p < 5 × 10â»â¸) [27].

Interactome Preparation: Assemble a comprehensive human protein-protein interaction network, incorporating data from validated experimental sources. The interactome should include approximately 13,329 proteins and 141,150 interactions for sufficient coverage [26].

Drug Target Mapping: For each drug candidate, identify its known protein targets within the interactome. Average number of targets per drug is approximately 3.5, with targets typically having higher-than-average network connectivity (degree = 28.6 vs. interactome average 21.2) [26].

Proximity Calculation:

- Calculate ( d_c ), the average shortest path length between each drug target and its nearest disease protein in the interactome

- Compute the relative proximity z-score using ( z = (d_c - μ)/σ ), where μ and σ represent the mean and standard deviation of distances from randomly selected protein sets

- Apply a z-score threshold of ≤ -2.0 to identify statistically significant drug-disease pairs [27]

Validation and Prioritization: Cross-reference significant results with known drug indications to validate methodology, then prioritize novel candidates based on z-score magnitude and clinical feasibility.

Expected Results and Interpretation

Application of this protocol to Primary Sclerosing Cholangitis (PSC) identified 42 medicinal products with z ≤ -2.0, including immune modulators such as basiliximab (z = -5.038) and abatacept (z = -3.787) as promising repurposing candidates [27]. The strong performance of this method is demonstrated by its ability to correctly identify metronidazole, the only previously researched agent for PSC that also showed significant proximity (z ≤ -2.0) [27].

Protocol 2: Development and Validation of Bio-Inspired Diagnostic Optimization

Purpose and Scope

This protocol describes the implementation of a hybrid diagnostic framework combining multilayer feedforward neural networks with nature-inspired optimization algorithms to enhance predictive accuracy in clinical diagnostics, specifically applied to male fertility assessment [29].

Materials and Reagents

- Clinical Dataset: 100 clinically profiled male fertility cases representing diverse lifestyle and environmental risk factors [29]

- Computational Framework:

- Multilayer feedforward neural network architecture

- Ant colony optimization (ACO) algorithm with adaptive parameter tuning

- Proximity search mechanism for feature selection

Procedure

Data Preparation and Feature Engineering:

- Collect comprehensive clinical profiles including sedentary habits, environmental exposures, and psychosocial stress factors

- Normalize continuous variables and encode categorical variables

- Partition data into training (70%), validation (15%), and test (15%) sets

Hybrid Model Implementation:

- Initialize feedforward neural network with one input layer, two hidden layers, and one output layer

- Integrate ant colony optimization for adaptive parameter tuning, mimicking ant foraging behavior to navigate parameter space

- Implement proximity search mechanism to identify optimal feature combinations

Model Training and Optimization:

- Train neural network using gradient descent with ACO-enhanced optimization

- Employ proximity-based feature importance analysis to identify key contributory factors

- Validate model generalizability using k-fold cross-validation

Performance Assessment:

- Evaluate classification accuracy, sensitivity, and specificity on unseen test samples

- Measure computational efficiency through inference time

- Assess clinical interpretability via feature importance rankings

Expected Results and Interpretation

Implementation of this protocol for male fertility diagnostics achieved 99% classification accuracy, 100% sensitivity, and an ultra-low computational time of 0.00006 seconds, demonstrating both high performance and real-time applicability [29]. Feature importance analysis highlighted key risk factors including sedentary habits and environmental exposures, providing clinically actionable insights [29].

Protocol 3: Targeted Protein Degradation Using PROTAC Technology

Purpose and Scope

PROteolysis TArgeting Chimeras (PROTACs) represent a leading proximity-based therapeutic modality that induces targeted protein degradation by recruiting E3 ubiquitin ligases to target proteins [28] [23]. This protocol details the design, synthesis, and validation of PROTAC molecules for targeted protein degradation.

Materials and Reagents

- Ligand Components: Target protein-binding ligand, E3 ligase-recruiting ligand

- Linker Chemistry: Flexible chemical spacers of varying length and composition

- Cell Lines: Appropriate cellular models expressing target protein and E3 ligase machinery

- Analytical Tools: Western blot equipment, quantitative PCR, cellular viability assays

Procedure

PROTAC Design:

- Select target-binding ligand with demonstrated affinity for protein of interest

- Choose E3 ligase ligand based on tissue distribution and compatibility (common choices: VHL, CRBN, IAP ligands)

- Design linker composition and length to optimize ternary complex formation

Synthesis and Characterization:

- Synthesize PROTAC molecules using modular conjugation chemistry

- Confirm molecular identity and purity through LC-MS and NMR

- Assess membrane permeability and physicochemical properties

Cellular Efficacy Assessment:

- Treat cells with PROTAC molecules across concentration gradient (typically 0.1 nM - 10 μM)

- Measure target protein degradation via western blot at multiple time points (2-24 hours)

- Assess downstream functional consequences through relevant phenotypic assays

Mechanistic Validation:

- Confirm ubiquitin-proteasome system dependence using proteasome inhibitors (e.g., MG132)

- Verify E3 ligase requirement through CRISPR knockout or dominant-negative approaches

- Demonstrate ternary complex formation using techniques such as co-immunoprecipitation or proximity assays

Expected Results and Interpretation

Successful PROTAC molecules typically demonstrate DCâ‚…â‚€ values in low nanomolar range and maximum degradation (Dmax) >80% within 4-8 hours of treatment [28]. The catalytic nature of PROTACs enables sub-stoichiometric activity, and the induced proximity mechanism can address both enzymatic and scaffolding functions of target proteins [28]. Currently, approximately 26 PROTAC degraders are advancing through clinical trials, validating this proximity-based approach as a transformative therapeutic strategy [28].

Visualization of Proximity Mechanisms

PROTAC Mechanism Diagram

Network Proximity Analysis Workflow

Hybrid Diagnostic Optimization Framework

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Research Reagents for Proximity-Based Investigations

| Reagent/Technology | Category | Function and Application | Key Characteristics |

|---|---|---|---|

| PROTAC Molecules | Bifunctional Degraders | Induce target protein degradation via E3 ligase recruitment [28] [23] | Modular design; catalytic activity; sub-stoichiometric efficacy |

| Molecular Glues | Monomeric Degraders | Enhance naturally occurring or create novel E3 ligase-target interactions [28] | Lower molecular weight; drug-like properties; serendipitous discovery |

| Network Proximity Analysis Code | Computational Tool | Quantifies drug-disease proximity in protein interactome [26] [27] | Python implementation; z-score output; validated thresholds |

| Ant Colony Optimization | Bio-inspired Algorithm | Adaptive parameter tuning through simulated foraging behavior [29] | Nature-inspired; efficient navigation of complex parameter spaces |

| Chemical Inducers of Proximity (CIPs) | Synthetic Biology Tools | Enable precise temporal control of cellular processes [5] [24] | Rapamycin-based systems; rapid reversibility; precise temporal control |

| Cox-2-IN-13 | Cox-2-IN-13, MF:C19H18N2O5S, MW:386.4 g/mol | Chemical Reagent | Bench Chemicals |

| S1P5 receptor agonist-1 | S1P5 Receptor Agonist-1|Selective S1P5 Agonist|RUO | Bench Chemicals |

The integration of proximity concepts across biological and computational domains provides a powerful unifying framework for clinical interpretability research. The experimental protocols and analytical methods detailed in this document enable researchers to leverage proximity-based approaches for therapeutic discovery, diagnostic optimization, and mechanistic investigation. As the field advances, emerging opportunities include the development of more sophisticated proximity-based modalities, enhanced computational methods for analyzing proximity networks, and novel clinical applications across diverse disease areas. The continued convergence of biological and computational proximity research promises to accelerate the development of interpretable, effective clinical interventions.

Building Transparent Clinical AI: Proximity-Based Methods and Applications

Theoretical Foundation & Mechanism

This protocol details the implementation of a proximity-based evidence retrieval mechanism designed to enhance the interpretability and reliability of uncertainty-aware decision-making in clinical research. The core innovation replaces a single, global decision cutoff with an instance-adaptive, evidence-conditioned criterion [30]. For each test instance (e.g., a new patient's clinical data), proximal exemplars are retrieved from an embedding space. The predictive distributions of these exemplars are fused using Dempster-Shafer theory, resulting in a fused belief that serves as a transparent, per-instance thresholding mechanism [30]. This approach materially reduces confidently incorrect outcomes and provides an auditable trail of supporting evidence, which is critical for clinical applications [30].

Application Protocol: Clinical Evidence Retrieval Workflow

Objective: To retrieve and fuse evidence from similar clinical cases to support a diagnostic or treatment decision for a new patient, providing a quantifiable measure of uncertainty.

Materials:

- Clinical Dataset: A structured database of historical patient records, including features (e.g., lab results, imaging features, demographics) and outcomes.

- Embedding Model: A pre-trained model (e.g., BiT, ViT, or a clinical NLP model) to project patient records into a numerical embedding space [30].

- Similarity Metric: A function (e.g., cosine similarity, Euclidean distance) to calculate proximity between patient records in the embedding space.

- Evidence Retrieval System: Computational framework to perform nearest-neighbor search.

Procedure:

- Query Instance Encoding: For a new patient (the "query instance"), encode their clinical data into a feature vector using the embedding model.

- Proximity Search: Execute a k-Nearest Neighbors (k-NN) search within the historical dataset to identify the

kmost proximal exemplars to the query instance. The distance metric defines the proximity constraint [31] [32]. - Evidence Extraction: Extract the known outcomes or predictive distributions associated with each of the

kretrieved exemplars. - Evidence Fusion: Fuse the predictive distributions of the retrieved exemplars using Dempster-Shafer theory to generate a combined belief and plausibility measure for each possible outcome [30].

- Uncertainty-Aware Decision:

- The fused belief is used as an adaptive confidence threshold.

- If the belief for a particular outcome exceeds a pre-defined operational minimum (e.g., 0.85), the decision is made with high confidence.

- If no outcome's belief meets the threshold, the case is flagged for manual review by a clinical expert, thus managing uncertainty sustainably [30].

Performance Data & Validation

Experimental validation on benchmark datasets demonstrates the efficacy of the proximity-based retrieval model compared to advanced baselines. The following tables summarize key quantitative findings.

Table 1: Model Performance Comparison on Clinical Retrieval Tasks

| Model | MAP (Mean Average Precision) | F1 Score | Key Feature |

|---|---|---|---|

| HRoc_AP (Proximity-Based) | 0.085 (improvement over PRoc2) | 0.0786 (improvement over PRoc2) | Adaptive term proximity feedback, self-adaptive window size [33] |

| PRoc2 | Baseline | Baseline | Traditional pseudo-relevance feedback [33] |

| TF-PRF | -0.1224 (vs. HRoc_AP MAP) | -0.0988 (vs. HRoc_AP F1) | Term frequency-based feedback [33] |

Table 2: Uncertainty-Aware Performance on CIFAR-10/100

| Model / Method | Confidently Incorrect Outcomes (%) | Review Load | Interpretability |

|---|---|---|---|

| Proximity-Based Evidence Retrieval | Materially Fewer | Sustainable | High (Explicit evidence) [30] |

| Threshold on Prediction Entropy | Higher | Less Controlled | Low (Black-box) [30] |

Workflow & System Visualization

The following diagram illustrates the logical workflow and data flow of the proximity-based evidence retrieval system.

Proximity-Based Clinical Evidence Retrieval Workflow

Research Reagent Solutions

Table 3: Essential Materials for Proximity-Based Clinical Retrieval Research

| Item | Function / Description | Example / Specification |

|---|---|---|

| TREC Clinical Datasets | Standardized corpora for benchmarking clinical information retrieval systems. | TREC 2016/2017 Clinical Support Track datasets [33]. |

| Pre-trained Embedding Models | Converts clinical text (e.g., EHR notes) or structured data into numerical vectors. | BiT (ResNet), ViT, or domain-specific clinical BERT models [30]. |

| Similarity Search Library | Software for efficient high-dimensional nearest-neighbor search. | FAISS (Facebook AI Similarity Search), Annoy, or Scikit-learn's NearestNeighbors. |

| Dempster-Shafer Theory Library | Implements the evidence fusion logic to combine predictive distributions. | Custom implementations or probabilistic programming libraries (e.g., PyMC3, NumPy). |

| Proximity Operator (N/W) | Defines the proximity constraint for retrieving relevant evidence. | N/5 finds terms within 5 words, in any order; W/3 finds terms within 3 words, in exact order [2] [32]. |

The integration of artificial intelligence in clinical diagnostics faces a significant challenge: the trade-off between model performance and interpretability. This is particularly critical in cardiology, where ventricular tachycardia (VT)—a life-threatening arrhythmia that can degenerate into ventricular fibrillation and sudden cardiac death—demands both high diagnostic accuracy and clear, actionable insights for clinicians [34]. Proximity-informed models present a promising pathway to bridge this gap. These models leverage geometric relationships and neighborhood information within data to make predictions that are not only accurate but also inherently easier to interpret and justify clinically. This document details the application of these models for VT diagnosis, framing the methodology within the broader thesis that proximity search mechanisms are fundamental to advancing clinical interpretability research.

Quantitative Performance of Diagnostic Models for Ventricular Tachycardia

Recent research demonstrates the potential of advanced computational models to achieve high performance in detecting and classifying cardiac arrhythmias. The following table summarizes key quantitative findings from recent studies, which serve as benchmarks for proximity-informed model development.

Table 1: Performance Metrics of Recent Computational Models for Arrhythmia Detection

| Model / Approach | Application Focus | Key Performance Metrics | Reference |

|---|---|---|---|

| Topological Data Analysis (TDA) with k-NN | VF/VT Detection & Shock Advice | 99.51% Accuracy, 99.03% Sensitivity, 99.67% Specificity in discriminating shockable (VT/VF) vs. non-shockable rhythms. | [35] |

| TDA with k-NN (Four-way Classification) | Rhythm Discrimination (VF, VT, Normal, Other) | Average Accuracy: ~99% (98.68% VF, 99.05% VT, 98.76% normal sinus, 99.09% Other). Specificity >97.16% for all classes. | [35] |

| Bio-inspired Hybrid Framework (Ant Colony Optimization + Neural Network) | Male Fertility Diagnostics (Conceptual parallel for diagnostic precision) | 99% Classification Accuracy, 100% Sensitivity, Computational Time: 0.00006 seconds. | [29] |

| Genotype-specific Heart Digital Twin (Geno-DT) | Predicting VT Circuits in ARVC Patients | GE Group: 100% Sensitivity, 94% Specificity, 96% Accuracy.PKP2 Group: 86% Sensitivity, 90% Specificity, 89% Accuracy. | [36] |